Google recaps how its LLMs could change in-game interactions

At this year's Game Developers Conference, Google introduced new AI models and development tools aimed at game studios.

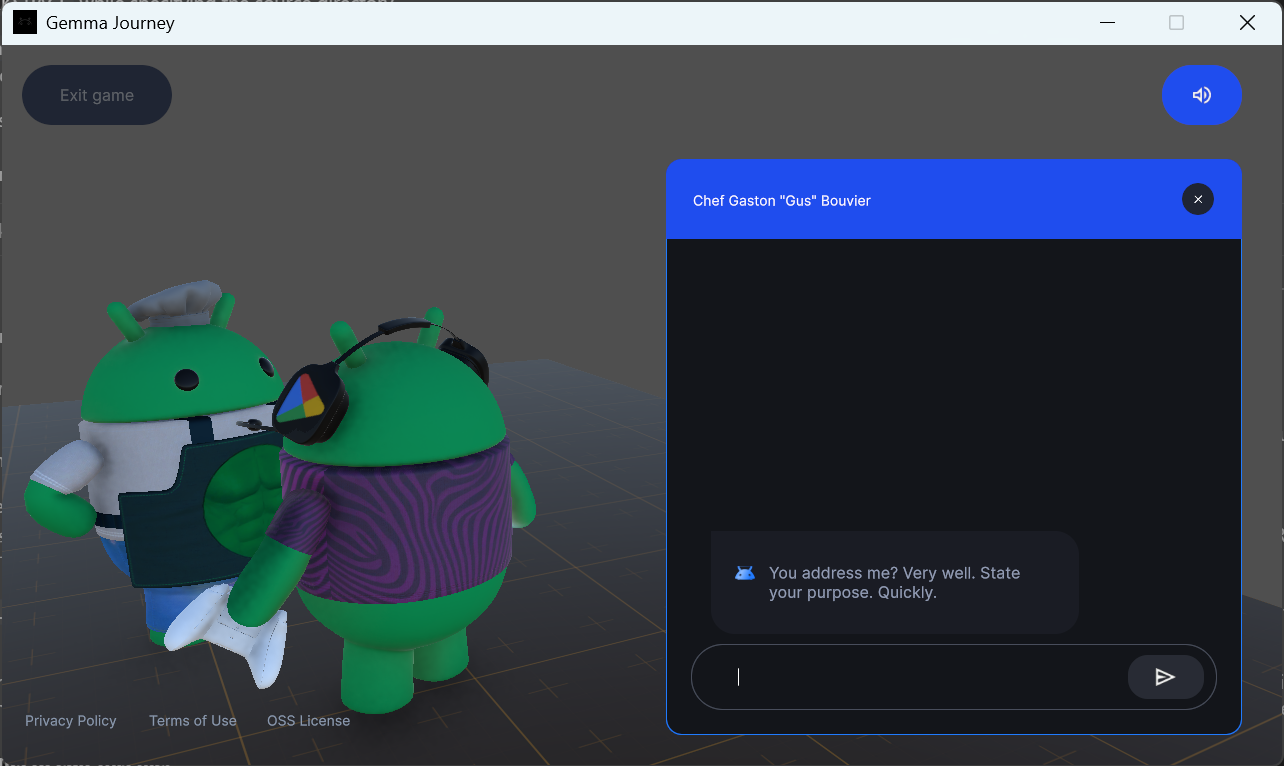

In a recent blog post, the company summarized its announcements and showcased "Gemma Journey," a playable demo built to exhibit how large language models (LLMs) can be used in games.

Gemma 3 is Google's latest open language model family, designed to run locally on devices like smartphones, laptops, and workstations. The models handle multimodal input—including text, images, and video—and use an extended context window to process longer conversations or complex game sequences.

With function calling, developers can use Gemma 3 to build AI systems that interact directly with game mechanics, such as triggering actions or adapting to player behavior. The models range from 1 to 27 billion parameters and support over 140 languages.

Unity integration with Gemma.cpp

To integrate Gemma into games, Google released a Unity plugin based on Gemma.cpp, a C++ inference engine optimized for CPU performance. This approach leaves GPU resources available for graphics. The open-source plugin is intended to make it easier to add Gemma 3 to Unity projects.

The demo game "Gemma Journey" lets players interact with Android NPCs whose personalities and behavior are controlled entirely by prompts. One example is "Chef Gus," an impulsive cook who reacts sharply to criticism and challenges players with riddles.

Gus's backstory, tone, and vocabulary are all set in the prompt, with the model generating dynamic, multilingual dialogue in real time—no manual scripting involved. According to Google, the demo shows that targeted prompts can be used to create complex character behaviors.

Google is collaborating with Indian game developer Nazara Technologies to bring Gemma.cpp to existing titles like "Animal Jam." The aim is to make in-game characters respond to players in a more dynamic and contextual way. The project is intended to explore how generative AI can be added to established games.

Cloud AI for large-scale games with Gemini 2.0

For larger games that require cloud infrastructure, Google is using Gemini 2.0. In the "Home Run: Gemini Coach Edition" demo app, an AI agent built with Gemini 2.0 Flash acts as a virtual coach in a mobile baseball game, analyzing gameplay in real time and providing advice. It runs on Google Cloud and demonstrates how generative AI can handle interactive coaching and assistance.

Technically, Google uses Vertex AI for model execution, Agones for Kubernetes-based game server hosting, and Google Kubernetes Engine (GKE) to run game environments and AI systems in parallel. The setup is designed to scale and support live service and multiplayer games.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.