Google's open standard lets AI agents build user interfaces on the fly

Key Points

- Google has introduced A2UI, an open standard that allows AI agents to create graphical user interfaces like forms or buttons directly.

- A2UI works by transmitting structured data instead of code, which enhances security and allows the design to adapt to each app.

- The standard is platform-independent, already in productive use, and supported by several partners.

Google's new A2UI standard gives AI agents the ability to create graphical interfaces on the fly. Instead of just sending text, AIs can now generate forms, buttons, and other UI elements that blend right into any app.

The open-source project A2UI (Agent-to-User Interface) wants to standardize how AI agents create visual responses. Released under the Apache 2.0 license, the standard bridges the gap between generative AI and graphical user interfaces.

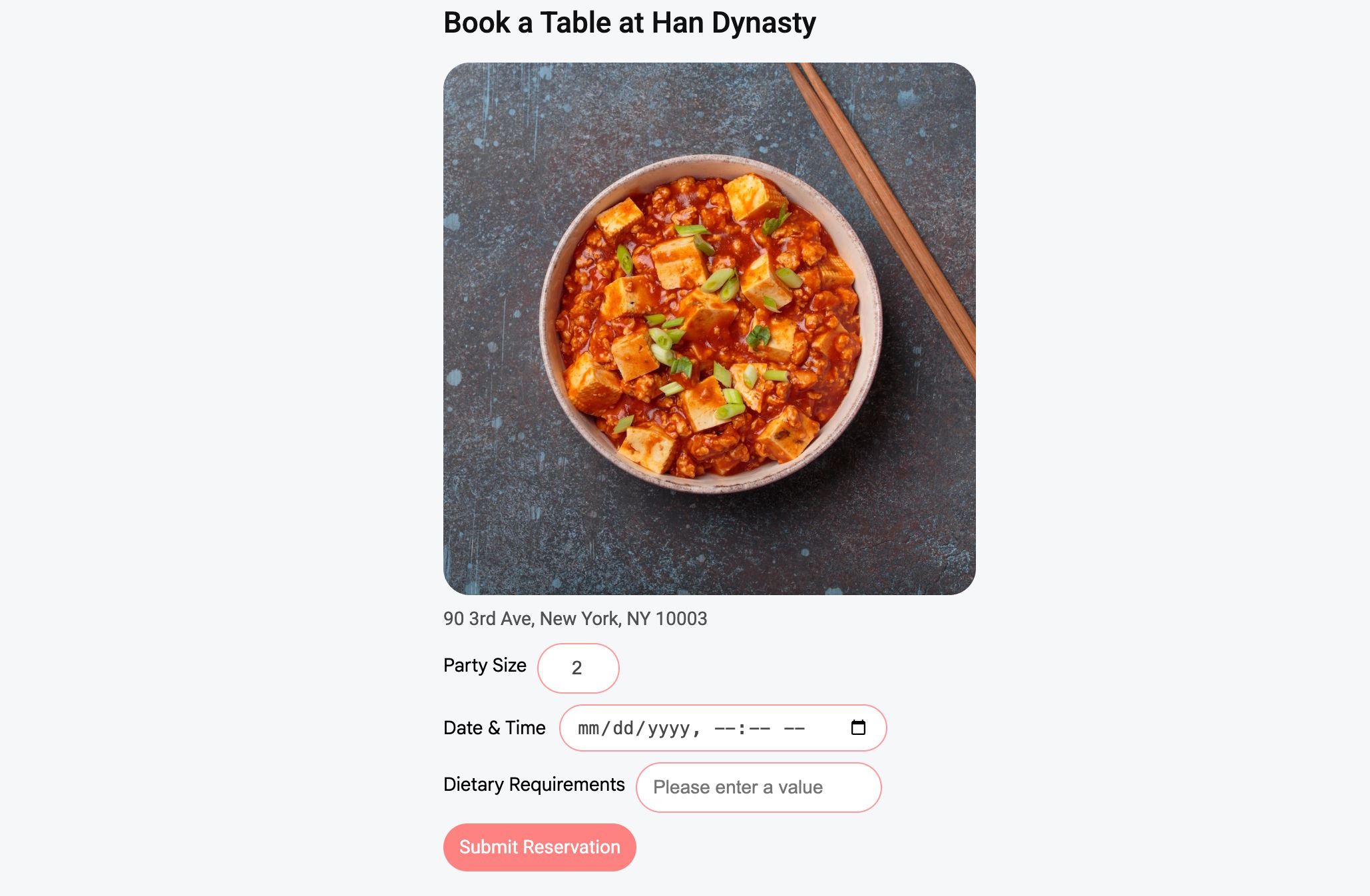

The thinking behind A2UI is simple: plain text or code output often doesn't cut it for complex tasks. Google points to restaurant reservations as an example; a text-only conversation can get tedious fast.

With A2UI, an agent can spin up a complete form with date pickers and available time slots right away. The goal is a "context-aware interface" that adapts as the conversation unfolds.

A2UI ditches iframes and executable code

A2UI breaks with the common practice of having agents generate HTML or JavaScript that runs in sandboxes or iframes. According to the research team, this approach poses security risks and often looks janky since the design rarely matches the host app.

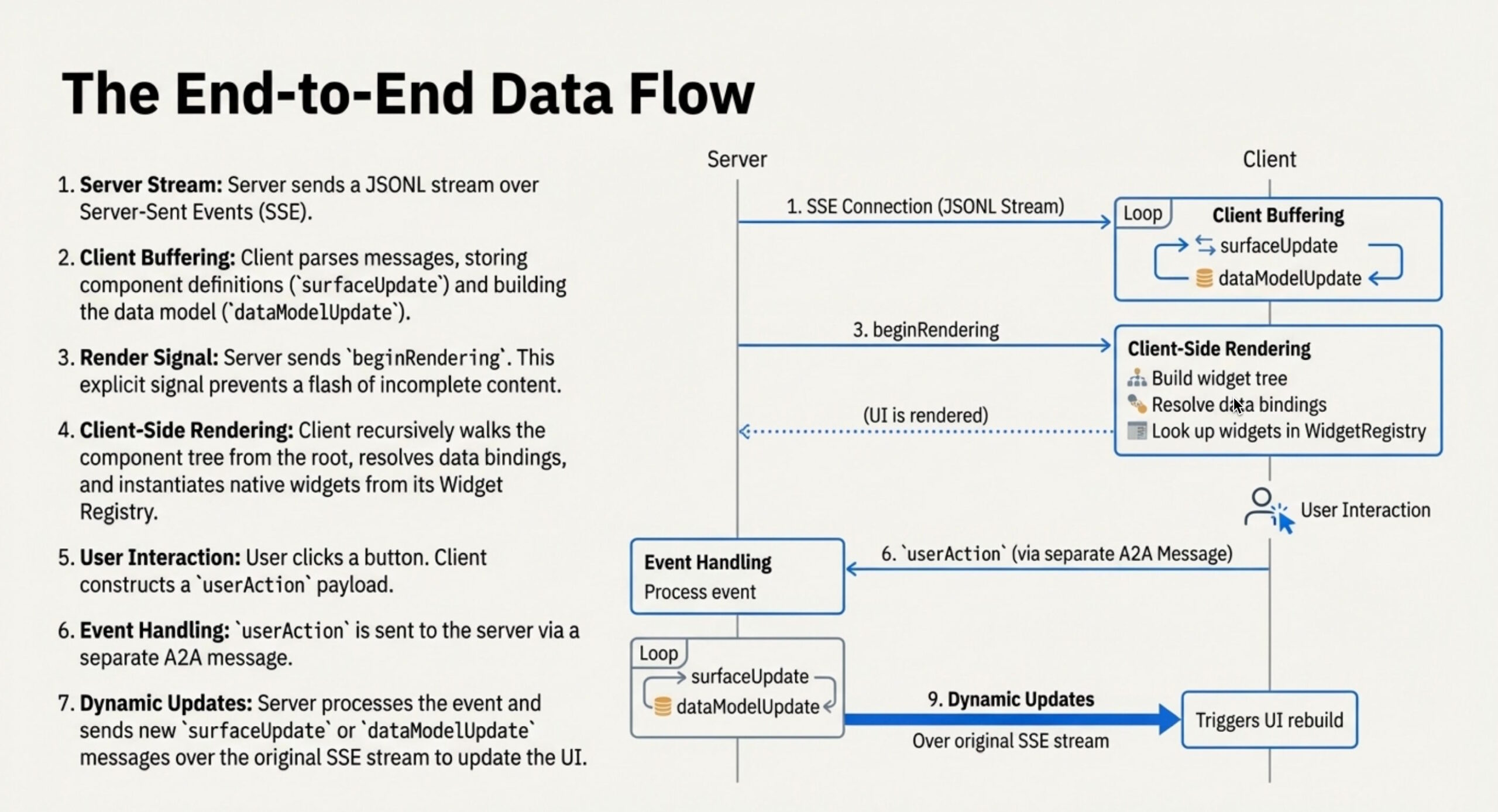

A2UI takes a different route: it sends data, not code. The agent doesn't push executable logic - it sends a JSON structure describing the interface it wants.

The receiving app interprets this data and renders it with its own native UI components. This security model limits agents to a predefined set of components like buttons and text fields.

The approach cuts down on code injection risks. And since the agent only specifies structure, not pixel-perfect design, the client app keeps full control over how things look.

How A2UI stacks up against Anthropic's MCP and OpenAI's ChatKit

Google is carving out space in an increasingly crowded market for agentic UI. The team draws clear lines between A2UI and other standards. According to Google, Anthropic's Model Context Protocol (MCP) treats UI as a resource—typically prefab HTML loaded in a sandbox. A2UI goes native-first, aiming for deeper integration with host apps.

Google also sets A2UI apart from OpenAI's ChatKit. ChatKit works best inside OpenAI's ecosystem, while A2UI is built to be platform-agnostic. It's designed for complex multi-agent setups where one agent orchestrates others and securely displays UI suggestions from remote sub-agents.

Just weeks ago, Google showed off how AI models can create interactive tools and simulations in real time with the introduction of "Generative UI" in the Gemini app and search. Called "Dynamic View," the feature generates tailored graphical interfaces to make complex topics easier to grasp.

The standard sits at version 0.8 and is already in production, according to Google. The GenUI SDK for Flutter uses A2UI to handle communication between server-side agents and mobile apps. Google's internal mini-app platform Opal and Gemini Enterprise run on the protocol too.

Google has lined up partnerships with external frameworks. The teams behind AG UI and CopilotKit supported the protocol from day one. The project ships client libraries for Flutter, Web Components, and Angular, and the team is inviting developers to contribute more renderers and integrations. Given how fast standards like MCP have spread, A2UI looks well-positioned for broad adoption.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now