The large language model GPT-JT was fine-tuned in a decentralized manner. It is available as open source and can compete with GPT-3 in some disciplines.

Stable Diffusion, with its open-source approach, is a serious alternative to DALL-E 2 and Midjourney when it comes to generative AI for images. The new decentralized variant, GPT-JT, could succeed in doing the same for large language models by approaching the performance of GPT-3.

GPT-JT was developed by researchers from the Together community, including researchers from ETH Zurich and Stanford University.

A fork of GPT-J-6B

The language model builds on EleutherAI's six billion parameter GPT-J-6B and has been fine-tuned with 3.5 billion tokens. Instead of networking all computers via high-speed data centers, Together only had relatively slow connections with up to one gigabit/s available.

With classical learning algorithms, each machine would generate 633 TB of data for communication, according to the researchers. Thanks to an optimizer and a strategy based on local training that randomly skips global communications, the GPT-JT team was able to reduce that demand to 12.7 TB.

Notably, and somewhat more importantly than the model itself, which represents a first step, we want to highlight the strength of open-source AI, where community projects can be improved incrementally and contributed back into open-source, resulting in public goods, and a value chain that everyone can benefit from.

Together.xyz

GPT-JT can catch up with GPT-3 in classification

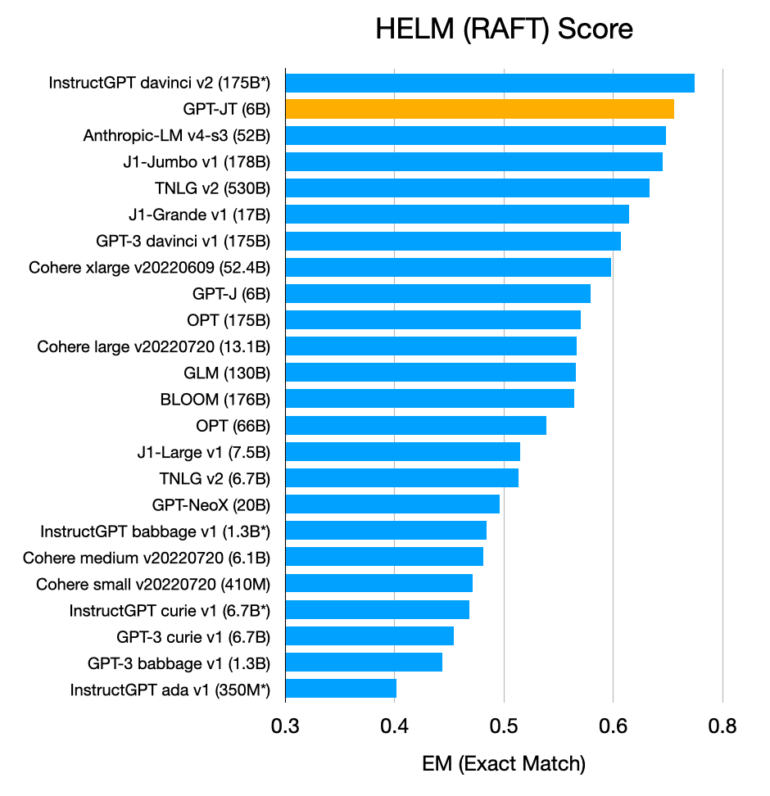

GPT-JT can keep up with other language models despite its training limitations. When it comes to classifying text, the open-source model ranks second in the RAFT Score, a method for the holistic evaluation of language models.

This result puts GPT-JT just behind OpenAI's InstructGPT "davinci V2", which has almost 30 times as many parameters with 175 billion. Similar large open-source models like BLOOM only appear in the second half of the ranking.

"Attack on the political economy of AI"

Jack Clark, author of the Import AI newsletter, calls GPT-JT an "attack on the political economy of AI." Until now, much of AI development has been driven by a few groups with access to large, centralized computer networks.

"GPT-JT suggests a radically different future – distributed collectives can instead pool computers over crappy internet links and train models together," Clark concludes.

Open-source model now available

You can try out a GPT-JT demo for free on Hugging Face with sample scenarios such as sentiment analysis, topic classification, summarization, or question answering. The code is available there.