Green Alliance sees two fundamental climate risks from AI

Key Points

- Climate Action Against Disinformation, a coalition of 50 climate and anti-disinformation organizations, warns of two major climate risks posed by AI: dramatic increases in energy and water consumption, and the accelerated spread of climate disinformation.

- The International Energy Agency predicts that energy consumption by AI data centers could double in the next two years, and data centers consume large amounts of freshwater, which could exacerbate local water shortages.

- The organization calls for immediate and comprehensive regulation of AI to fully understand and protect its impact on the climate, and stresses the importance of transparency, safety, and accountability in AI development.

According to Climate Action Against Disinformation, the enormous consumption of resources and the spread of misinformation through AI are serious threats to climate protection.

An alliance of over 50 climate and anti-disinformation organizations, Climate Action Against Disinformation, warns of two major climate risks posed by AI: a drastic increase in energy and water consumption, and the accelerated spread of climate disinformation.

As examples, the report cites AI search queries that require five to ten times more computing power than a normal Internet search, and the generation of a single image by a powerful AI model consumes as much energy as a full charge of a smartphone. The training phases of large language models such as GPT-3 and GPT-4 are also extremely energy-intensive.

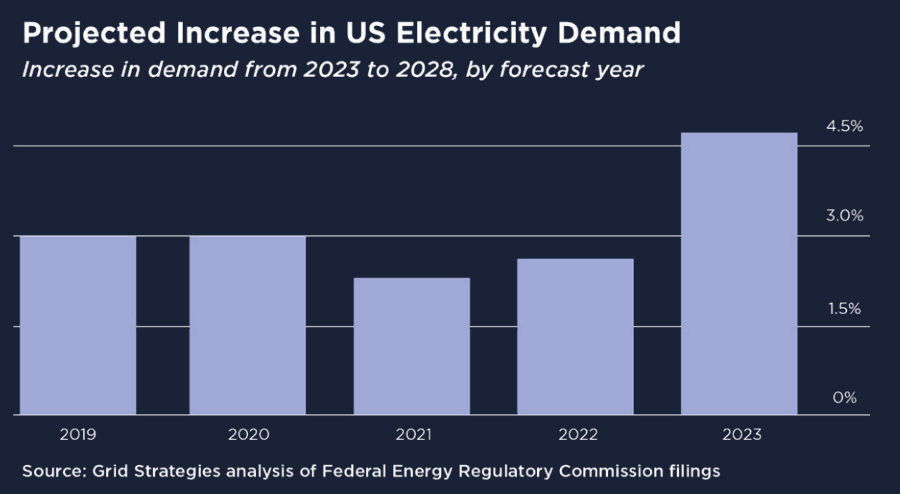

The International Energy Agency predicts that the energy consumption of AI data centers could double in the next two years, equivalent to the energy consumption of Japan.

The organization also sees the strain on local freshwater resources as problematic. Data centers in the US used 1.7 billion gallons of water per day in 2014.

At least one-fifth of data centers are located in areas of moderate to severe water scarcity. Increasing demand for computing power could exacerbate local water scarcity and drought risks.

OpenAI CEO Sam Altman recently said that AI will consume far more energy than previously thought.

AI could promote climate disinformation

The report points out that AI could accelerate the spread of climate misinformation. Climate deniers could use AI to produce more convincing false content faster and cheaper, and spread it through social media, targeted advertising, and search engines.

AI is already being used for political disinformation campaigns, as in the case of the 2023 Slovakian elections or the fake Biden robocalls ahead of the 2024 New Hampshire primary.

AI models could enable climate disinformation professionals to build on decades of disinformation campaigns and produce more and more personalized content.

The report calls for immediate and comprehensive regulation of AI to fully understand and protect against climate impacts. Key concepts for better AI development should focus on three principles: Transparency, Safety, and Accountability.

According to Climate Action Against Disinformation, regulators must require companies to publicly report their energy use and associated emissions.

In addition, AI companies should demonstrate that their products are safe for people and the environment, and how they are protected from discrimination, bias, and disinformation.

Researcher Kate Crawford of USC Annenberg and Microsoft Research recently criticized the "hidden environmental costs" of generative AI in late February.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now