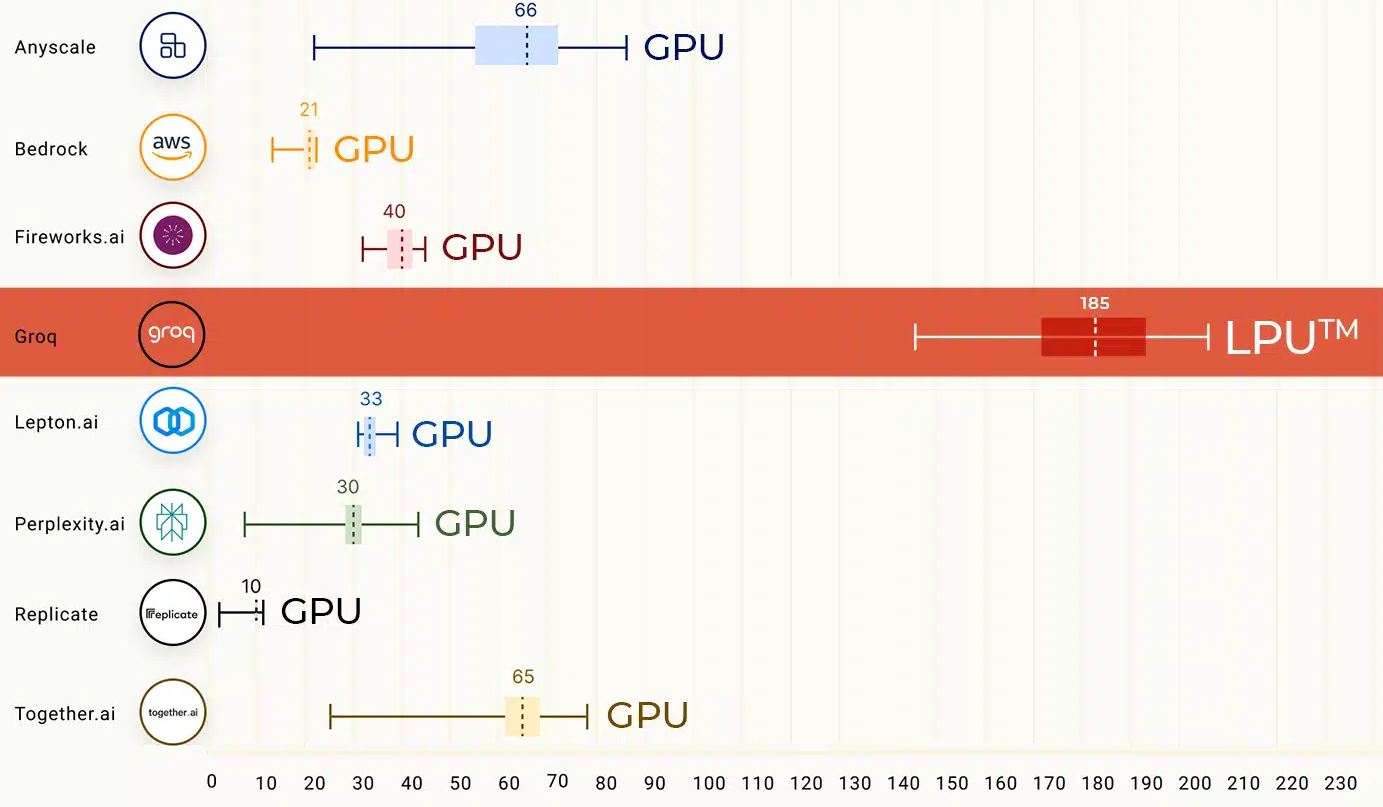

Compared to humans, language models can generate text incredibly fast. But for some, like startup Groq, that is not fast enough.

To achieve even faster performance, Groq has developed specialized hardware: LPUs (Language Processing Units).

These LPUs are specifically designed to run language models and offer speeds of up to 500 tokens per second. In comparison, the relatively fast LLMs Gemini Pro and GPT-3.5 manage between 30 and 50 tokens per second, depending on load, prompt, context, and delivery.

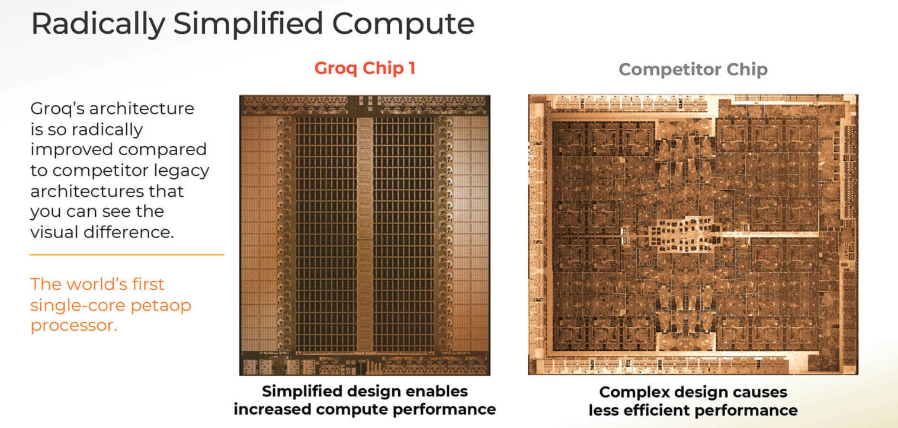

The first "GroqChip" in the LPU system category uses a "tensor streaming architecture" that Groq says is designed for performance, efficiency, speed and accuracy.

According to the startup, unlike traditional graphics processing units (GPUs), the chip offers a simplified architecture that enables constant latency and throughput. This can be an advantage for real-time AI applications, such as gaming.

LPUs are also more energy efficient, Groq says. They reduce the effort required to manage multiple threads and avoid underutilization of cores, allowing more computations to be performed per watt.

Groq's chip design allows multiple TSPs to be connected without the traditional bottlenecks associated with GPU clusters. According to Groq, this makes the system scalable and simplifies hardware requirements for large AI models.

Groq's systems support common machine learning frameworks, which should ease integration into existing AI projects. Groq sells hardware and also offers a cloud API with open-source models such as Mixtral. You can test Groq's speed with Mixtral and Llama here.

LPUs could improve the deployment of AI applications and provide an alternative to Nvidia's A100 and H100 chips, which are widely used today and in short supply.

But for now, LPUs only work for inference, a fancy word for running AI models. To train the models, companies still need Nvidia GPUs or similar chips. Groq was founded in 2016 by Jonathan Ross, who previously worked on TPU chips at Google.