Algorithmic prompting helps large language models like GPT-3 to solve math problems more reliably.

Large language models like GPT-3 are bad at math - a finding that attracted a lot of attention when OpenAI presented the model. After all, the fact that GPT-3 could add some numbers at all was surprising to some.

Since then, researchers have continued to develop new methods to improve the mathematical capabilities of large language models, for example with various forms of prompt engineering or access to an external Python interpreter.

Algorithmic reasoning via prompt engineering?

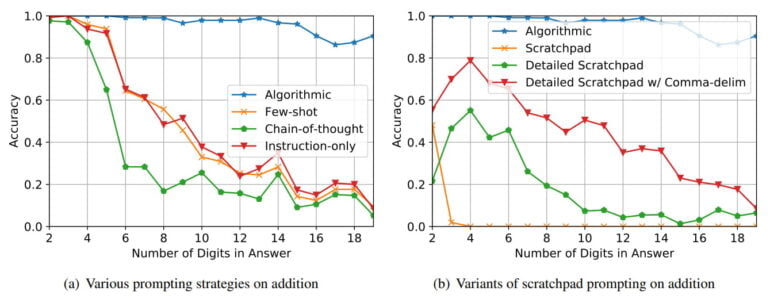

In prompt engineering, researchers experiment with different input patterns and measure their effect on the output of language models. Chain-of-thought prompting, for example, shows that prompting for a step-by-step approach produces significantly better results in some tasks.

But despite these advances, language models struggle to solve even simple algorithmic tasks. A method of prompt engineering developed by researchers at the Universite de Montreal and Google Research, however, significantly increases the performance of the models in mathematical tasks.

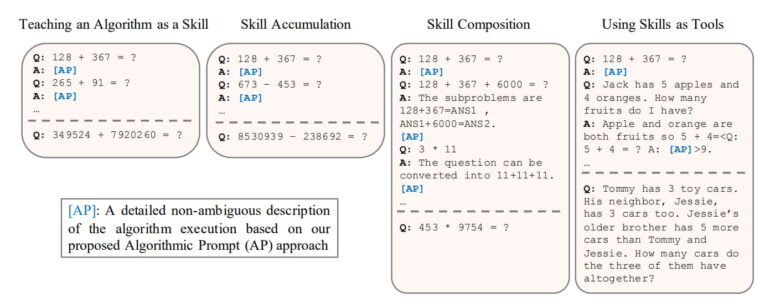

The team formulates detailed prompt inputs that describe algorithms for solving math problems, such as for addition. The language model can use this algorithm as a tool to solve similar math problems. The researchers evaluate their approach on a series of arithmetic and quantitative reasoning tasks.

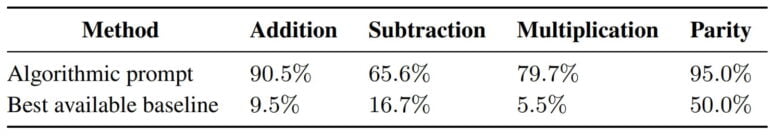

Through their "Algorithmic Prompting" approach, the language models achieve a significant performance improvement compared to other prompting strategies: In particular, for long parity, addition, multiplication, and subtraction, the method achieves an error reduction of up to 10x and can solve tasks with significantly more numbers than other prompts.

The researchers also show that the language models can learn multiple skills, such as addition and subtraction, in an accumulated fashion, apply different skills together, and also use the learned skills as tools in more complex tasks.

Algorithmic Prompting in Times of ChatGPT

Using addition as an example, the team shows that large language models can apply instructions with as few as five digits to as many as 19 digits. This, they say, is an example of out-of-distribution generalization and a direct effect of algorithmic prompting. The method should work with more digits, but right now it is limited by the context length of the used code-davinci-002-Model of OpenAI (8.000 tokens).

OpenAI's latest ChatGPT spits out correct answers to math problems without much prompt engineering. OpenAI presumably uses an external interpreter for this. So why explore more methods of prompt engineering for math?

One area with significant room for improvement is the ability of LLMs to perform complex reasoning tasks. In this realm, mathematical reasoning provides a unique challenge as a domain. It requires the ability to parse, to logically deconstruct a problem into sub-problems and recombine them, and to apply knowledge of rules, transformations, processes, and axioms

From the paper

Methods such as "algorithmic prompting" could thus improve models' abilities to reason. Models that learn to execute an algorithm can produce consistent results, reduce hallucinations, and since "since they are input independent by nature, they are immune to OOD performance degradation when executed properly."

The team sees the role of context length as a key finding: it may be possible to convert longer context length to better reasoning performance by providing more detailed solution examples. "This highlights the ability to leverage long contexts (either through increasing context length or other means such as implementing recurrence or an external memory) and generate more informative rationales as promising research directions."