Can mathematical proofs and formal verification help ensure the safety and controllability of advanced AI systems?

In their new paper, Max Tegmark and Steve Omohundro outline a possible way to ensure the safe development of advanced artificial intelligence, including general artificial intelligence (AGI).

Tegmark is a physicist, cosmologist and AI researcher, and as president of the Future of Life Institute, he was one of the initiators of the open letter that in March called for at least a six-month pause in AI development. Omohundro is also a physicist and AI researcher, as well as founder and CEO of Beneficial AI Research, a startup that aims to develop useful and safe AI.

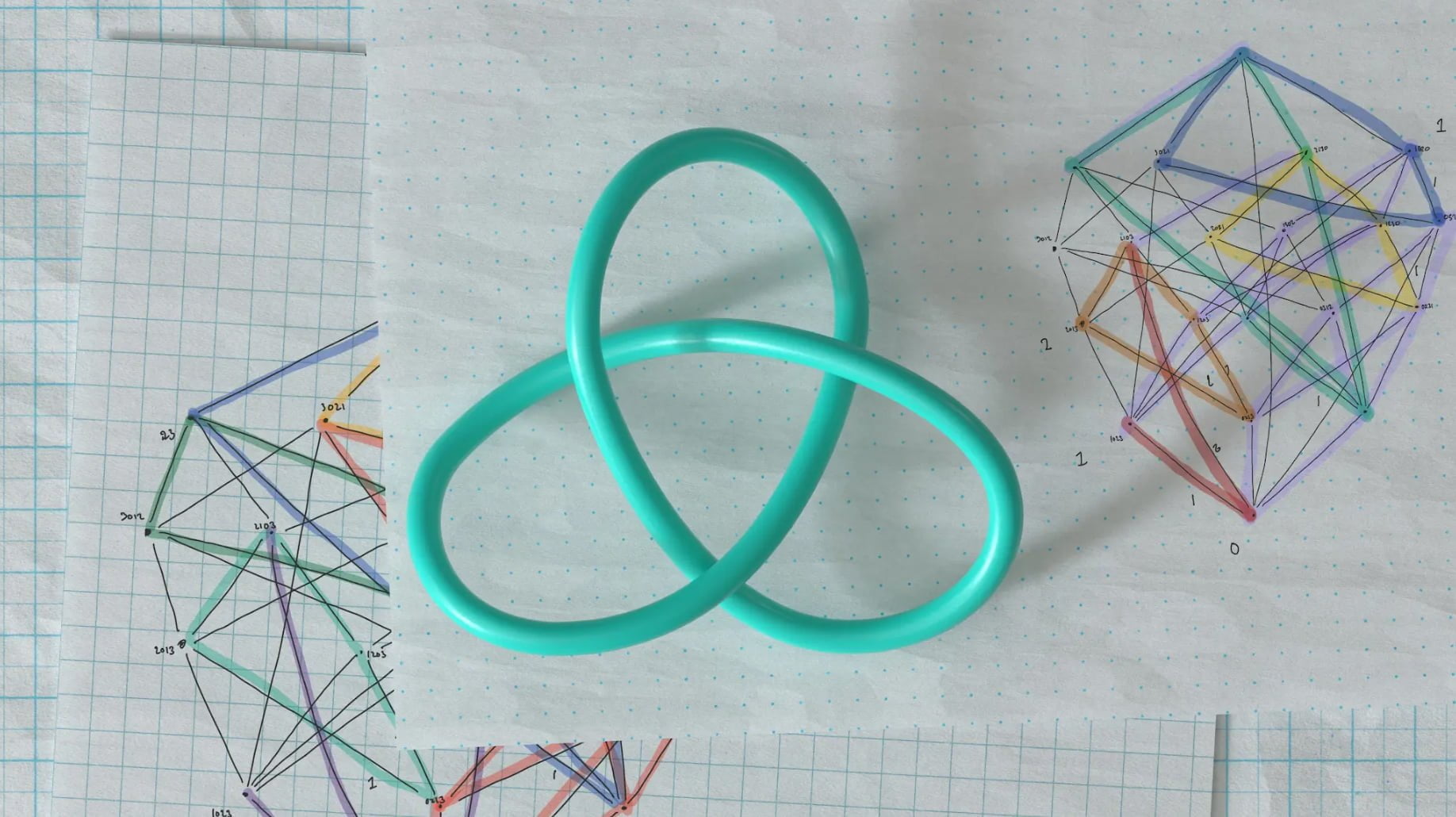

The central idea of the paper is to design AI systems so that critical behaviors are provably compliant with formal mathematical specifications that encode human values and preferences. This would force AI systems to perform only those actions that are mathematically provable safe and beneficial to humans - because at each step they would have to provide a mathematical proof of safety that could then be verified before allowing an action.

The authors argue that current approaches that focus on aligning AI goals with human values do not, by themselves, provide protection against misused AI. Instead, they say, a security mindset is needed that builds safety "both into AGIs and also into the physical, digital, and social infrastructure that they interact with."

Software and hardware verification as a safety net

The proposal has several components:

- Provably compliant system (PCS): System provably meeting certain formal specifications.,

- Provably compliant hardware (PCH): Hardware provably meeting certain formal specifications.

- Proof-carrying code (PCC): Software that on demand outputs proof that it meets certain formal specifications.

- Provable contract (PC): Secure hardware that controls action by checking compliance with formal specification.

- Provable meta-contract (PMC): Secure hardware that controls the creation and updating of other provable contracts.

Together, they would make it impossible for AI systems to violate key safety properties. The proofs would guarantee compliance even for superintelligent AI, the researchers say. Rather than individual AI models that hopefully meet our requirements, the method relies on a sequential safety net in which proofs must be provided at each step.

Existential risks should be stopped at each step

The authors use a specific bioterrorism scenario from a research paper on the four categories of catastrophic AI risks to illustrate how their approach might be applied:

A terrorist group wants to use AI to release a deadly virus over a densely populated area. It uses AI to design the DNA and shell of a pathogen, as well as the steps to produce it. He hires a chemical lab to synthesize the DNA and incorporate it into the protein shell. It uses AI-controlled drones to spread the virus, and AI on social media to spread its message after the attack.

With the proposed approach to provably secure systems, such an attack could be prevented at every stage: Biochemical design AIs would not synthesize dangerous designs, GPUs would not run insecure AI programs, chip factories would not sell GPUs without security verification, DNA synthesis machines would not operate without security verification, drone control systems would not allow drone flights without security verification, and social bots would not manipulate media.

Researchers see technical obstacles but expect progress

The authors acknowledge that to fully realize this vision, significant technical obstacles must be overcome. Machine learning would likely be required to automate the discovery of compliant algorithms and the corresponding proofs. Recent advances in machine learning for automated theorem proving give reason for optimism about rapid progress, the researchers say.

But even apart from considerations such as how to formally specify "don't let humanity go extinct," there are still a number of unsolved but simple and very well-specified challenges, the solution of which would already bring major benefits to cybersecurity, blockchain, privacy, and critical infrastructure in the medium term, they said.