Japanese startup Sakana AI explores time-based thinking with brain-inspired AI model

Sakana AI, a Tokyo-based startup, has introduced a new kind of AI system designed to mimic how the brain processes time.

The company's new model, called the Continuous Thought Machine (CTM), takes a different approach from conventional language models by focusing on how synthetic neurons synchronize over time, rather than treating input as a single static snapshot.

Instead of traditional activation functions, CTM uses what Sakana calls neuron-level models (NLMs), which track a rolling history of past activations. These histories shape how neurons behave over time, with synchronization between them forming the model's core internal representation, a design inspired by patterns found in the biological brain.

When Sakana launched in 2023, the company said it aimed to build AI systems inspired by nature. CTM appears to be one of the first major results. Co-founder Llion Jones was one of the original authors of the Transformer architecture, which today underpins nearly every major generative AI model.

While earlier systems used techniques like budget forcing—a form of prompt engineering—to encourage longer reasoning, CTM proposes an entirely new architecture. It's part of a growing class of reasoning models that rely on heavier computation during inference compared to standard LLMs.

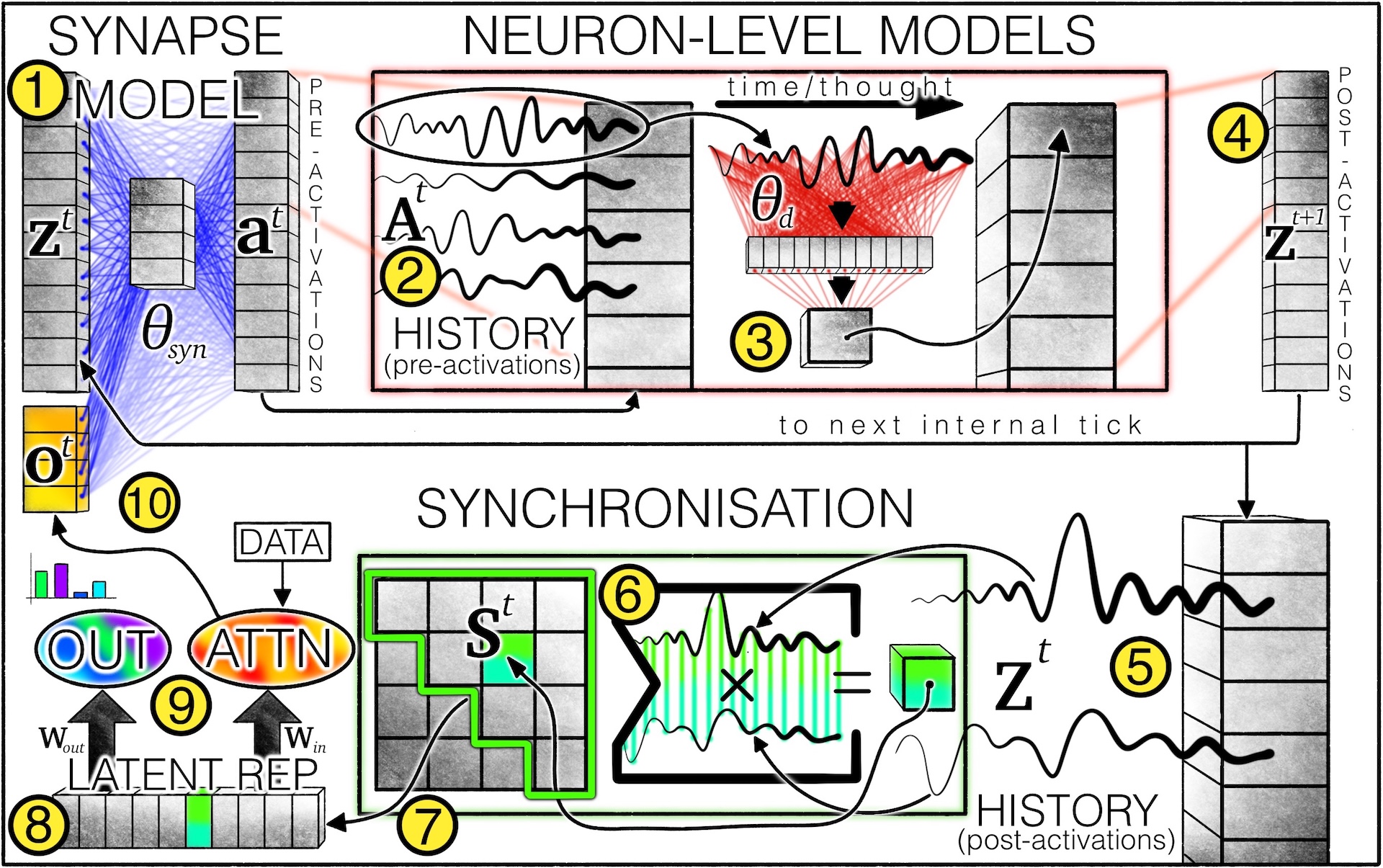

A step-by-step thinking loop

CTM introduces an internal concept of time—what the researchers call "internal ticks"—decoupled from external input. This lets the model take multiple internal steps when solving a problem, rather than jumping straight to a final answer in a single pass.

Each step begins with a "synapse model" that processes current neuron states along with external input to generate pre-activations. Every neuron keeps a running history of these pre-activations, which are then used to compute updated states, or post-activations, in the next phase.

These neuron states accumulate over time and are analyzed for synchronization. This time-based synchronization becomes the model's key internal signal for driving attention and generating predictions.

CTM also includes an attention mechanism to focus on the most relevant parts of the input. The updated neuron states and selected input features feed back into the loop to trigger the next internal tick.

In initial tests, CTM was applied to image classification on ImageNet 1K. The model analyzed different regions of each image across multiple steps and achieved 72.47% top-1 accuracy and 89.89% top-5—not cutting-edge, but respectable. Sakana says performance was not the primary focus.

CTM also adapts its processing depth dynamically. For simple tasks, it can stop early; for harder ones, it continues reasoning longer. This behavior emerges naturally from the architecture, with no special loss functions or stopping criteria required.

Synchronization as memory

The model's ability to reason over time hinges on synchronization between neurons. These patterns, derived from the neurons' activation history, guide both attention and prediction. The result is a system capable of integrating information over multiple timescales, including short-term reactions to new input and longer-term pattern recognition.

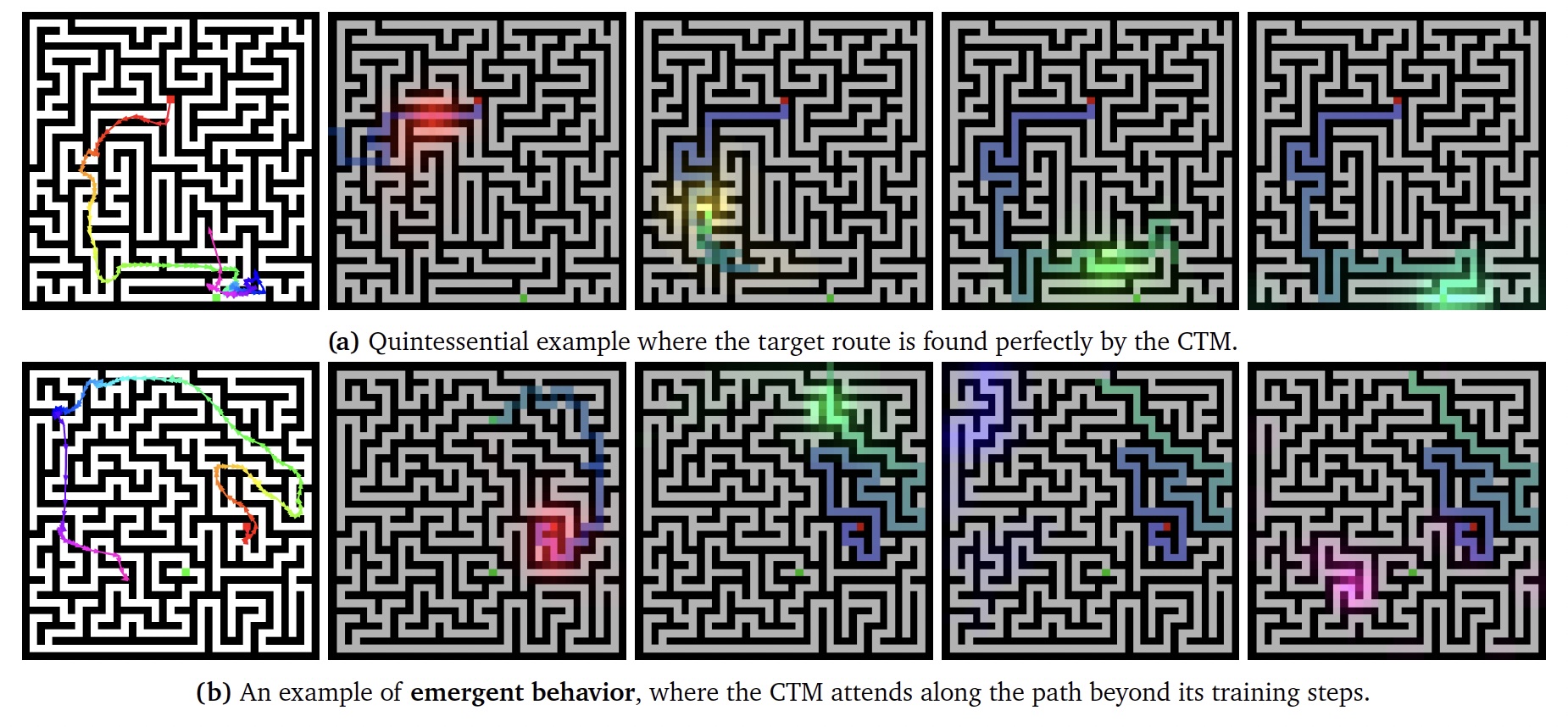

In one experiment, CTM was tested on maze navigation. The model appeared to plan its path step-by-step, even managing to partially solve larger and more complex mazes it hadn't seen during training. Sakana released an interactive demo that shows the model navigating 39×39 mazes in up to 150 steps.

The researchers say this behavior wasn't hand-coded; it emerged from the model's architecture and training process.

How it stacks up

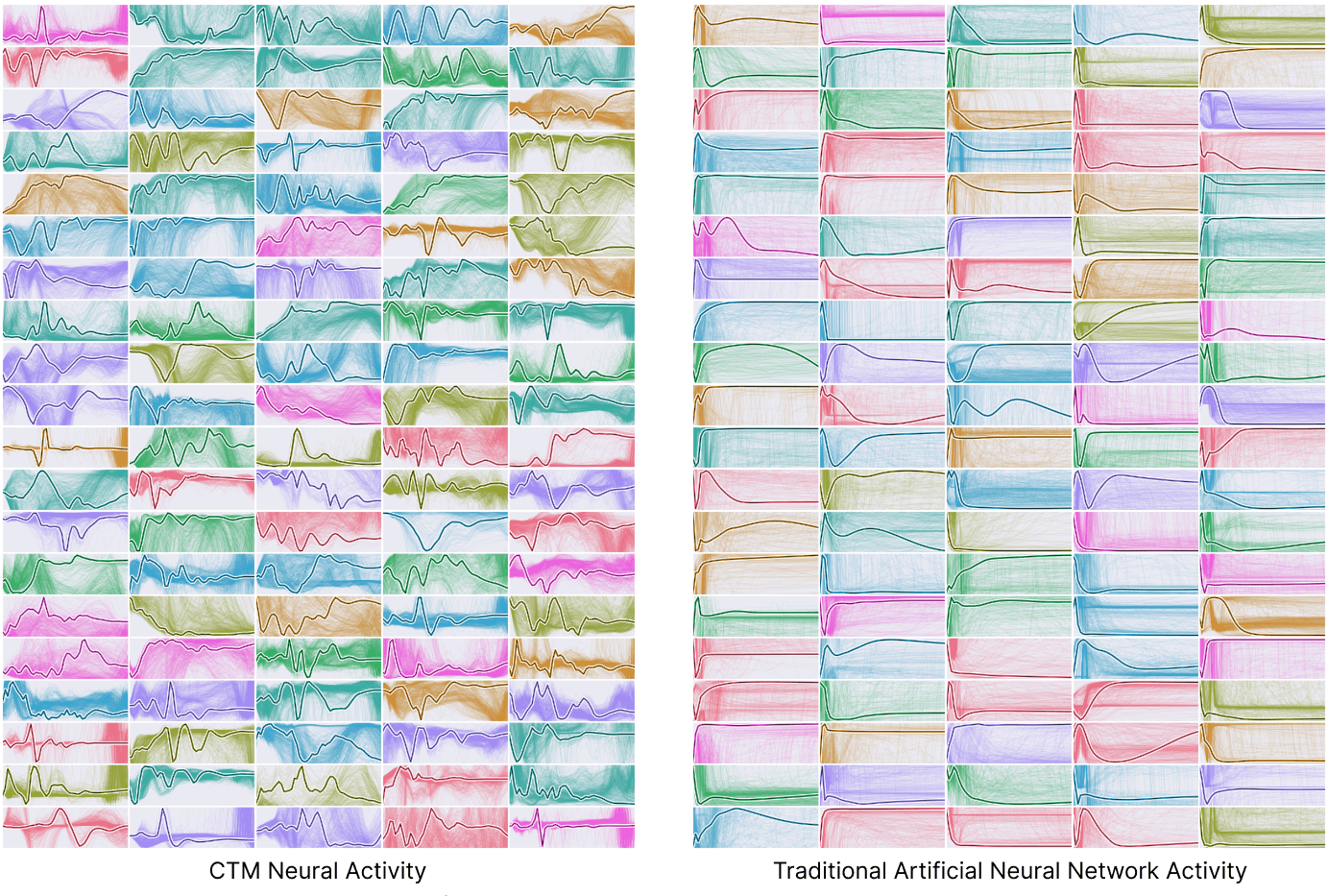

To benchmark CTM, the team ran tests against Long Short-Term Memory networks (LSTMs), which are widely used for sequence processing, and simple feedforward networks, which handle input in a single pass.

In tasks like sorting number sequences and computing parities, CTM learned faster and more reliably than both baselines. Its neuron activity was also noticeably more complex and varied. Whether that complexity leads to better performance in practical applications remains an open question.

On the CIFAR-10 dataset—60,000 images across ten categories—CTM slightly outperformed the other models. Its predictions also aligned more closely with how humans tend to classify images. The team ran similar tests on CIFAR-100, which has 100 categories, and found that while larger models produced more diverse neuron patterns, increasing the number of neurons didn't always improve accuracy.

Inspired by biology, constrained by hardware

CTM isn't meant to perfectly replicate the brain, but it borrows ideas from neuroscience, particularly the concept of time-based synchronization. While real neurons don't have access to their own activation history, the researchers say the model is more about functional inspiration than strict biological realism.

Still, there are trade-offs. Because CTM works recursively, it can't be easily parallelized during training, which slows things down. It also requires significantly more parameters than traditional models, making it more computationally demanding. Whether the added complexity is worth it remains to be seen.

Sakana has open-sourced the code and model checkpoints, and hopes CTM will serve as a foundation for future research into biologically inspired AI systems.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.