Language models still can't pass complex Theory of Mind tests, Meta shows

Meta's ExploreToM framework shows that even the most sophisticated AI models, including GPT-4o, have trouble with complex social reasoning tasks. The findings challenge earlier optimistic claims about AI's ability to understand how humans think.

Even the most advanced AI models like GPT-4o and Llama have trouble understanding how other minds work, according to new research from Meta, the University of Washington, and Carnegie Mellon University. The study focuses on the "theory of mind" - our ability to understand what others are thinking and believing.

Previous theory-of-mind tests were too basic and could lead to an overestimation of the models’ capabilities, the researchers say. In earlier tests, models like GPT-4 achieved top scores and repeatedly spurred claims that language models had developed a theory of mind (ToM). However, it is more likely that they learned from the narrative practice of ToM and can thus pass simple ToM tests with this ability.

A new way to test AI's theory of mind

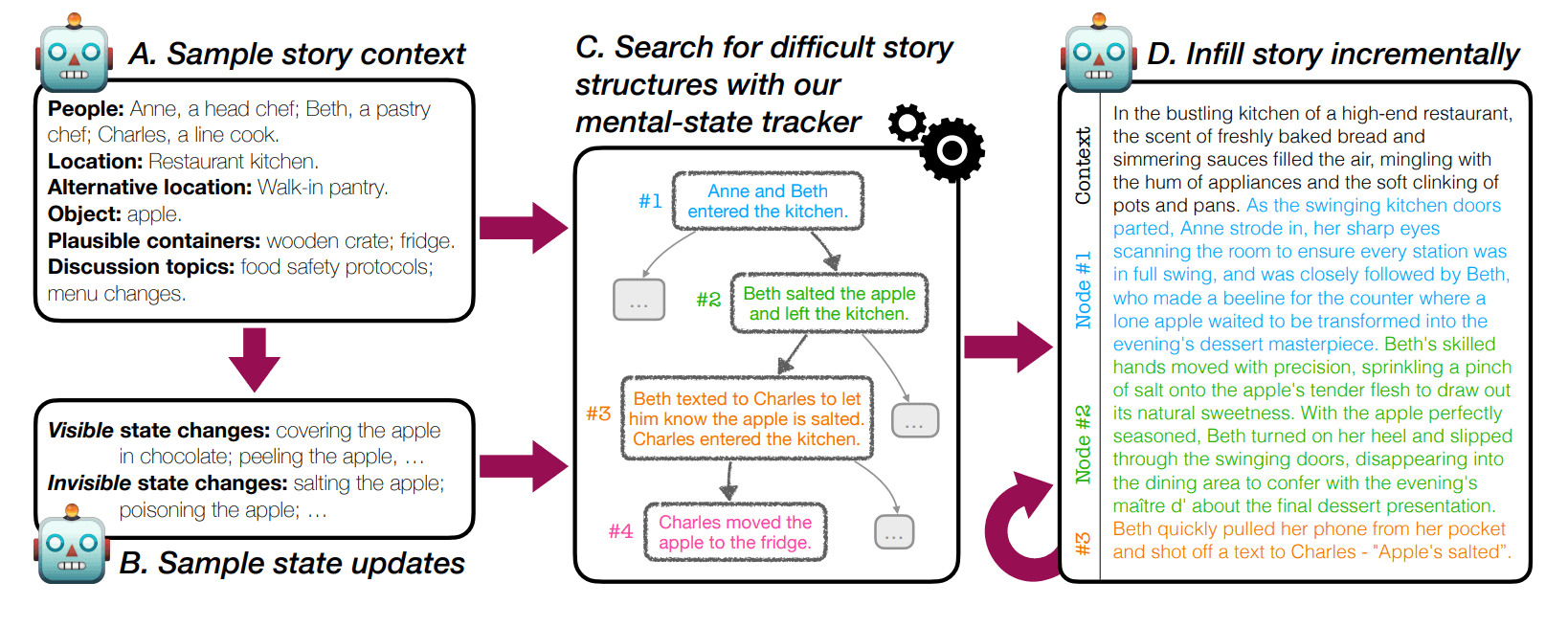

To address this, the team created ExploreToM - the first framework for generating truly challenging theory-of-mind tests at scale. It uses a specialized search algorithm to create complex, novel scenarios that push language models to their limits.

The results weren't great for the tested LLMs. When faced with these tougher tests, even top performers like GPT-4o got the answers right only 9% of the time. Other models like Mixtral and Llama performed even worse, sometimes getting every single question wrong. This is a far cry from their near-perfect scores on simpler tests.

The good news is that ExploreToM isn't just useful for testing - it can also help train AI models to do better. When researchers used ExploreToM's data to fine-tune Llama-3.1 8B Instruct, its performance on standard theory of mind tests improved by 27 points.

The challenge of following simple stories

The researchers found something surprising: the tested models struggle even more with basic state tracking - keeping track of what's happening and who believes what throughout a story - than with theory of mind itself. This suggests that before we can build AIs that truly understand others' minds, we need to solve the more fundamental problem of helping them follow simple narratives.

Interestingly, when it comes to specifically improving an AI's ability to understand others' minds, the researchers found that training data needs to focus explicitly on theory of mind rather than just state tracking. All the data from this research is available on Hugging Face for other researchers to use.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.