Three new GPT-3 based apps show that large language models (LLMs) are much more than text generators.

Developer Dwarkesh Patel uses OpenAI's embeddings API for a semantic search in eBooks. An embedding is an information-dense representation of the meaning of a piece of text.

Patel takes advantage of this representation for a text search in books, which can search book passages based on a scenic description ("Character A and Character B meet") or on questions, for example.

The semantic search is much more flexible than the conventional Ctrl+F search function for eBooks, which returns text passages only if they match the search command exactly. Patel demonstrates this in a short demo video.

I read a lot of books for my podcast & blog.

But often I can't finding the particular passage I'm looking for.

Ctrl-F doesn't work unless you know the exact phrase.

So I built search for ebooks using OpenAI's embeddings API.

Link below to use.

Works surprisingly well! pic.twitter.com/UFDjDhZ507

— Dwarkesh Patel (@dwarkesh_sp) October 30, 2022

Patel provides a demo version of his embeddings search for eBooks at Google Colab.

Natural language prompts for Google Sheets

Developer Shubhro Saha demonstrates another use case for GPT-3: He connects the API to Google Sheets. Using natural language prompts in the table columns, he can assign tasks to Sheets that he would otherwise have to write in abstract code, such as for extracting a zip code from an address line. All that is needed is the question, "What is the zip code of this address?"

GPT-3 can also write a result to a new column directly in Sheets, based on the contents of various columns. For example, GPT-3 can create the text for a thank you card from a name and a short list of things to mention.

This weekend I built =GPT3(), a way to run GPT-3 prompts in Google Sheets.

It's incredible how tasks that are hard or impossible to do w/ regular formulas become trivial.

For example: sanitize data, write thank you cards, summarize product reviews, categorize feedback... pic.twitter.com/4fXOTpn2vz

— Shubhro Saha (@shubroski) October 31, 2022

Saha's example, however, also shows the biggest weakness of large language models besides social and cultural biases: The systems are still too unreliable for tasks where precision is the top premise.

For example, in the zip code example already mentioned, even the largest GPT-3 model "text-davinci-002" fails in some cases and fantasizes wrong numbers into the columns. Purpose-built plugins or regular Google sheet code are more reliable in this scenario.

Good catch.

It's likely because the original video's data sanitization formula used 'text-curie-001', which is a smaller model.

Here it is with the formula explicitly set to a larger model, 'text-davinci-002'.

Seems to work better: pic.twitter.com/EtvZyJVgY3

— Shubhro Saha (@shubroski) October 31, 2022

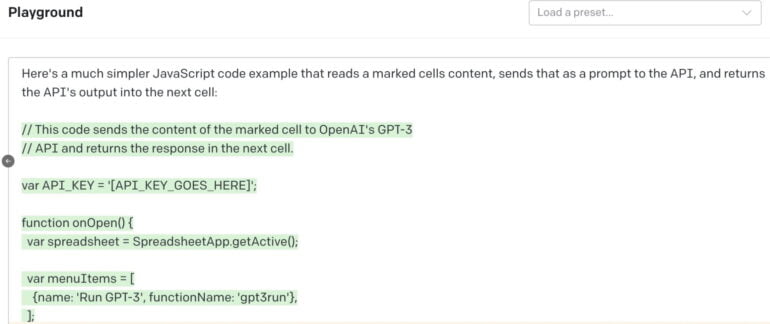

If you are interested in Saha's "GPT3()" software, you can express your interest here. An alternative is this pre-filled Google Doc by Fabian Stelzer. It contains the Javascript code that connects the GPT-3 API with the Google software. Stelzer says he can't program and had the connection code generated by GPT-3 as well.

Zahid Khawaja also takes advantage of the programming capability of the large language model for his "ToolBot," which uses natural language to create a prototype app based on GPT-3.

A user enters an app idea via a prompt, from which ToolBot then generates a simple user interface with a text input field that processes another user input in the context of the app function.

Introducing ToolBot 🤖 — an app that generates custom GPT-3 tools using plain English.

This is a ridiculously easy way to prototype different GPT-3 tools. It's especially useful for end users who want to explore the potential of LLMs.

If this sounds interesting, let me know. pic.twitter.com/48R1q6OoeD

— Zahid Khawaja (@chillzaza_) October 26, 2022

Khawaja says he developed ToolBot for people who want to use GPT-3 for applications but are not familiar with user interface creation or prompt engineering. They can save their created tool and share it via a link.