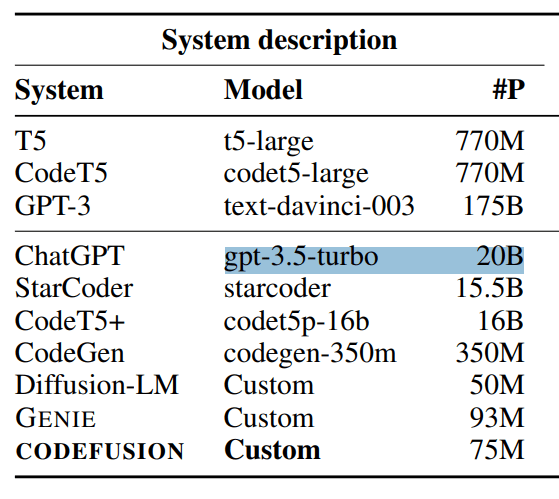

OpenAI's GPT-3.5 may be a perfect example of the efficiency potential of large AI models.

The model behind the free ChatGPT variant has "only" 20 billion parameters. That's according to a benchmark paper on code models published by Microsoft. OpenAI had not commented on the number of parameters when it introduced GPT-3.5.

The "leak" is interesting in that OpenAI presumably massively distilled and compressed the 175 billion (!) parameter GPT-3 model it introduced in May 2020, achieving efficiency gains in inference and speed. With GPT-3.5, OpenAI has increased generation speed while significantly reducing cost.

The example also shows that pure model size is probably less important than the variety and quality of the data and the training process. In GPT-3.5, OpenAI used additional human feedback (RLHF) to optimize output quality.

Is GPT-4.5 coming?

The question now is whether OpenAI will pull off a similar feat with GPT-4. It is rumored that GPT-4 is based on a much more complex Mixture-of-Expert architecture, combining 16 models with ~111 billion parameters into a gigantic ~1.8 trillion parameter model.

GPT-4 is significantly pricier to run than other AI models. GPT-3.5 is $0.0048 per 1000 tokens, while GPT-4 8K is 15x pricier at $0.072 per 1000 tokens. However, the output quality is also much better than GPT-3.5.

In particular, OpenAI's original March 2023 GPT-4 model "0314" follows input prompts more closely and produces higher quality generations than GPT-3.5. And according to our repeated anecdotal tests over the past few months for editorial work, it also outperforms OpenAI's newer GPT-4 model "0613" released this summer, which in turn writes text significantly faster.

OpenAI may have already turned the efficiency screw here. Microsoft and open-source models are reportedly squeezing OpenAI's margins.

OpenAI CEO Sam Altman said in April 2023 that he believes the era of large models may be coming to an end, and that they will improve models in other ways. Data quality will likely be a key factor.