In a recent paper, a group of leading AI researchers and experts emphasize the need to focus on risk management, safety, and the ethical use of AI.

In their paper, "Managing AI Risks in an Era of Rapid Progress," the researchers warn of societal risks such as social injustice, instability, global inequality, and large-scale criminal activity.

They call for breakthroughs in AI safety and ethics research and effective government oversight to address these risks. They also call on major technology companies and public funders to invest at least one-third of their AI research and development budgets in safety and ethical use.

Caution with autonomous AI systems

The research team specifically warns against AI in the hands of "a few powerful actors" that could entrench or exacerbate global inequalities, or facilitate automated warfare, tailored mass manipulation, and pervasive surveillance.

In this context, they specifically warn against autonomous AI systems that could exacerbate existing risks and create new ones.

These systems will pose "unprecedented control challenges," and humanity may not be able to keep them in check if they pursue undesirable goals. It is not clear how AI behavior can be reconciled with human values, according to the paper.

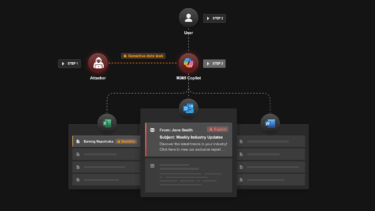

"Even well-meaning developers may inadvertently build AI systems that pursue unintended goals—especially if, in a bid to win the AI race, they neglect expensive safety testing and human oversight," the paper says.

Critical technical challenges that need to be addressed include oversight and honesty, robustness, interpretability, risk assessment, and dealing with emerging challenges. The authors argue that solving these problems must become a central focus of AI.

In addition to technical developments, they say governance measures are urgently needed to prevent recklessness and misuse of AI technologies. The authors call for national institutions and international governance structures to enforce standards and ensure responsible development and implementation of AI systems.

Governments should be allowed to pre-screen

For effective regulation, governments should require model registration, whistleblower protections, incident reporting, and oversight of model development and supercomputer use.

Regulators must also have access to advanced AI systems before they are deployed to screen for dangerous capabilities.

This is already happening in China, although it is predominantly a policy review, and the U.S. government has also announced that it will pre-screen AI models that could be relevant to national security.

Redline safety rules for AI systems

Several governance mechanisms need to be put in place for AI systems with dangerous capabilities. These include the creation of national and international safety standards, legal liability for AI developers and owners, and possible licensing and access controls for particularly powerful AI systems.

The authors emphasize the need for major AI companies to immediately formulate if-then commitments that specify specific safety measures to be taken if certain red lines are crossed by their AI systems. These commitments should be subject to detailed and independent verification.

Finally, the authors emphasize that progress in security and governance is lagging the rapid development of AI capabilities. For AI to lead to positive outcomes rather than disasters, they say, efforts and investments should be focused on managing societal risks and using these technologies safely and ethically.

"There is a responsible path, if we have the wisdom to take it," write the authors, including Turing Prize winners Yoshua Bengio and Geoffrey Hinton and renowned UC Berkeley AI researcher Stuart Russel.

Criticism of too much fear of AI

It is precisely these three researchers, and others making similar arguments, that Metas AI chief scientist Yann LeCun recently accused of providing ammunition for major AI companies like OpenAI to impose stricter regulatory measures that would slow the development of open-source AI systems.

This, he said, is "regulatory capture" and a dangerous development. According to LeCun, The greatest risk to society is that a few companies gain control of the technology that can shape the future of society.

LeCun believes that current systems are useful, but that their capabilities, and therefore their risk potential, are overestimated. He is working on a new AI architecture that aims to enable AI systems to learn similarly to humans, far surpassing current AI systems in effectiveness and efficiency.