LLM-generated questions differ from human questions, study finds

Researchers from UC Berkeley, Saudi Arabia's King Abdullah City for Science and Technology, and the University of Washington took a close look at how LLMs generate questions. Their findings show some clear differences between AI and human questioning patterns.

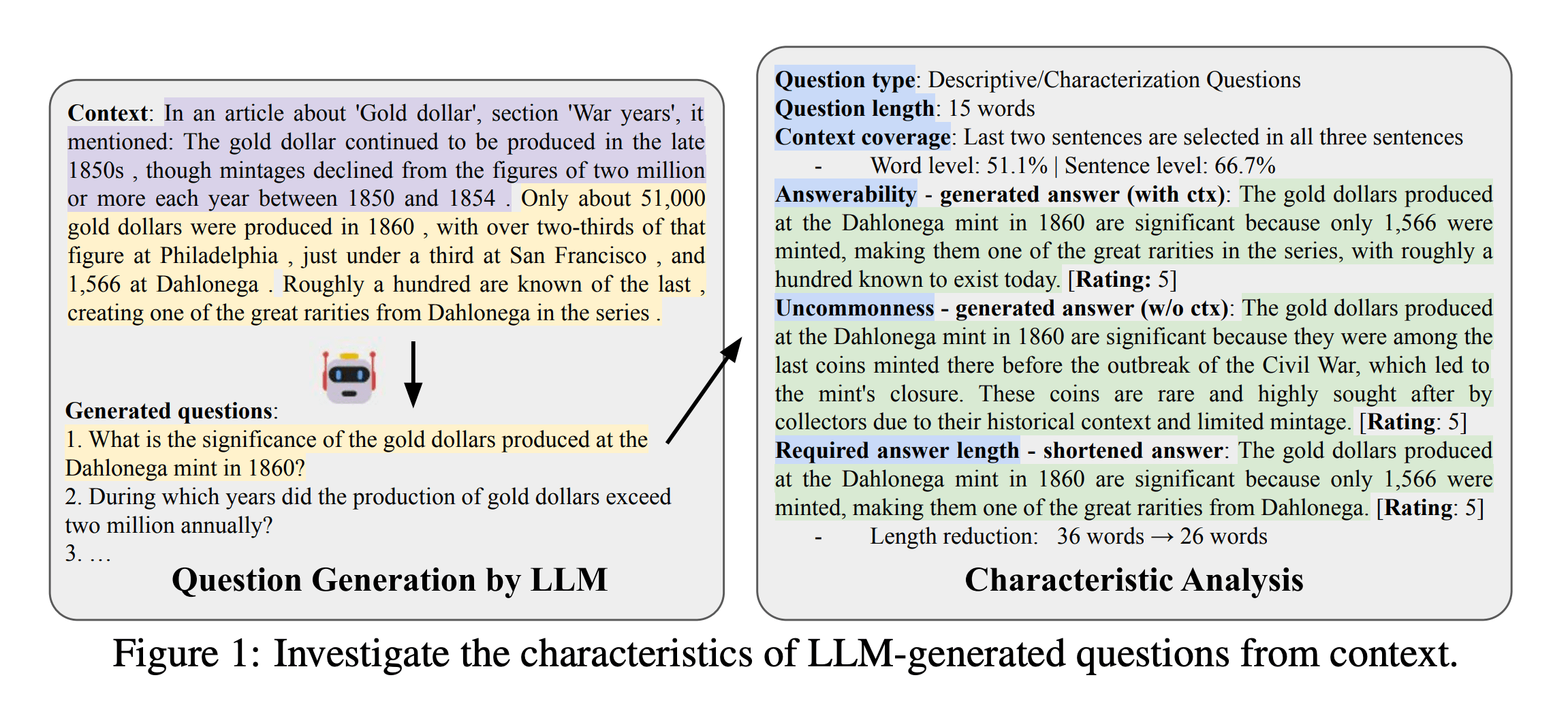

The research team started by developing categories for different types of questions, ranging from basic fact-checking to complex queries requiring detailed explanations. They then used these categories to analyze both AI-generated questions and existing datasets of human questions.

To test their theories, the team looked at how LLMs answered questions both with and without supporting context. By experimenting with different answer lengths, they could measure how much information each question really needed, giving them insight into the complexity of different question types.

To compare AI-generated questions, the researchers used two different datasets based on Wikipedia articles, each created using a different method. In one dataset, questions were created based on specific text passages, while in the other, researchers matched existing questions to relevant Wikipedia sections.

LLMs questions cover context more evenly

The team discovered that AI models heavily favor questions that need detailed explanations - about 44% of AI-generated questions fall into this category. Humans, on the other hand, tend to ask more straightforward, fact-based questions.

These AI-generated questions typically require longer answers to be complete, even when keeping responses as concise as possible. The difference in required answer length was significant compared to human-created questions.

While humans often focus their questions on information that appears early in a text, AI models spread their questions more evenly across the entire content. This is particularly interesting because LLMs typically shows positional bias when answering questions.

The researchers believe their findings have practical applications. Since AI questions have such unique patterns, they could help test RAG systems or identify when AI systems are making things up. The insights could also help users write better prompts, whether they want AI to generate more human-like questions or questions with specific characteristics they're looking for.

AI-generated questions are becoming more common in commercial products. For example, Amazon's shopping assistant Rufus suggests product-related questions, while Perplexity's search engine and X's Grok chatbot use follow-up questions to help users dig deeper into topics. These tools let users either select from AI-generated questions to learn more about specific posts or topics.

For anyone interested in exploring further, the research team has shared their code publicly on GitHub alongside their published paper.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.