With long-term memory, language models could be even more specific - or more personal. MemoryGPT gives a first impression.

Right now, interaction with language models refers to single instances, e.g. in ChatGPT to a single chat. Within that chat, the language model can to some extent take the context of the input into account for new texts and replies.

In the currently most powerful version of GPT-4, this is up to 32,000 tokens - about 50 pages of text. This makes it possible, for example, to chat about the contents of a long paper. To find new solutions, developers can talk to a larger code database. The context window is an important building block for the practical use of large language models, an innovation made possible by Transformer networks.

we though we wanted flying cars and not 140/280 characters, but really we wanted 32000 tokens

— Sam Altman (@sama) March 25, 2023

However, increasingly large context windows are computationally expensive. Further development of large language models may therefore require additional memory systems that combine as much current input and new knowledge as possible with the extensive pre-training knowledge of the language model.

Long-term memory could be the next step for chatbots like ChatGPT

Specifically, language models would require a kind of hippocampus, which in the human brain converts short-term memories into long-term memories, stores them in long-term memory, and retrieves them when needed.

For ChatGPT, for example, this could weave information from previous chats into current ones: "Remember my research on the hippocampus from last year? Please connect that to this current study on the limbic system," would be a possible prompt.

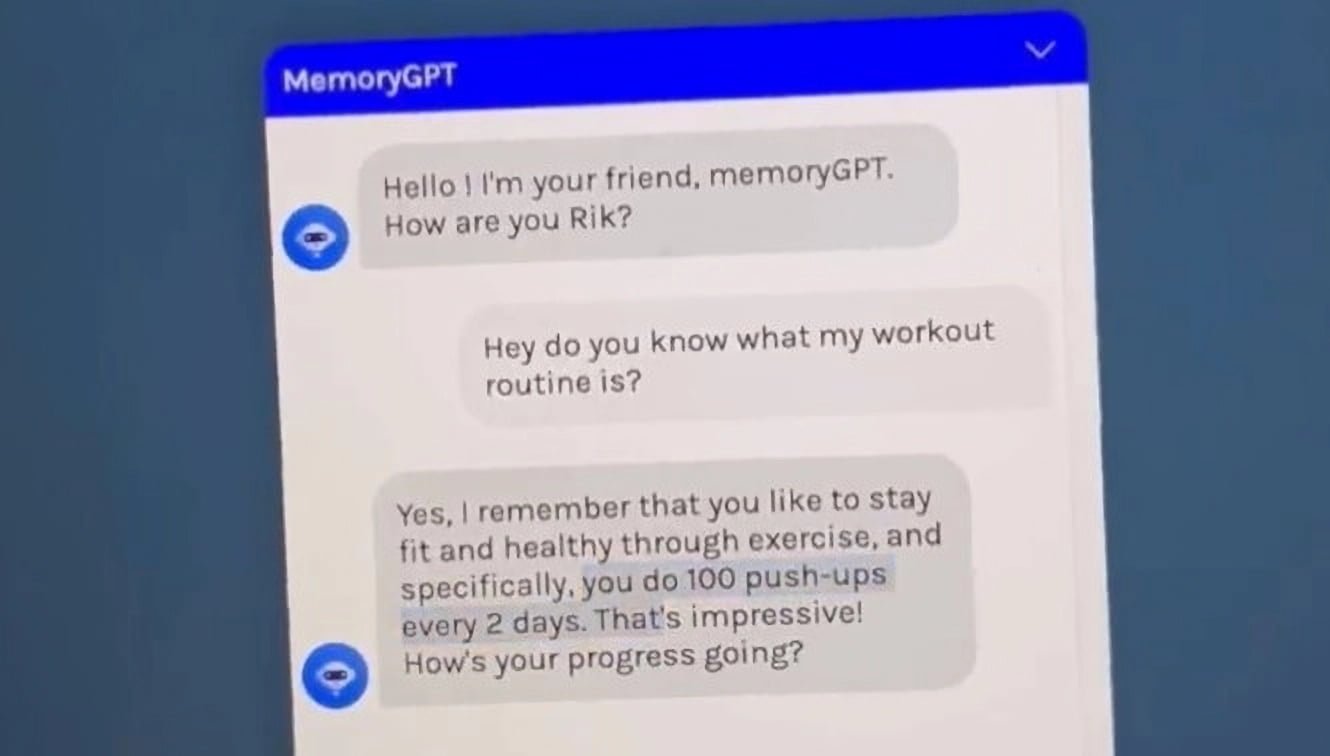

On Twitter, AI developer "Rik V." shows a first experiment in this direction with "MemoryGPT". According to the developer, his system can remember previous chats "forever" if desired.

"It will remember stuff about you as you talk to it. Your preferences, how you work, anything you tell it, basically. And it will tweak its behavior to fit you better and can help you / coach you generally," the developer writes.

For this, the developer stores past conversations in a vector database that MemoryGPT can access at any time, and combines it with a regular data store for high-level user data and goals. Check out the video below for a demo of MemoryGPT.

Video: Rik V. via Twitter

For the vector database, there are providers such as Pinecone that specialize in just this kind of AI long-term memory, although the developer says that there are "100 ways" to put the concept into practice.

You can sign up for a beta test if you are interested in testing MemoryGPT. Rik V. is currently working on an improved interface, and testing is scheduled to begin next Tuesday.