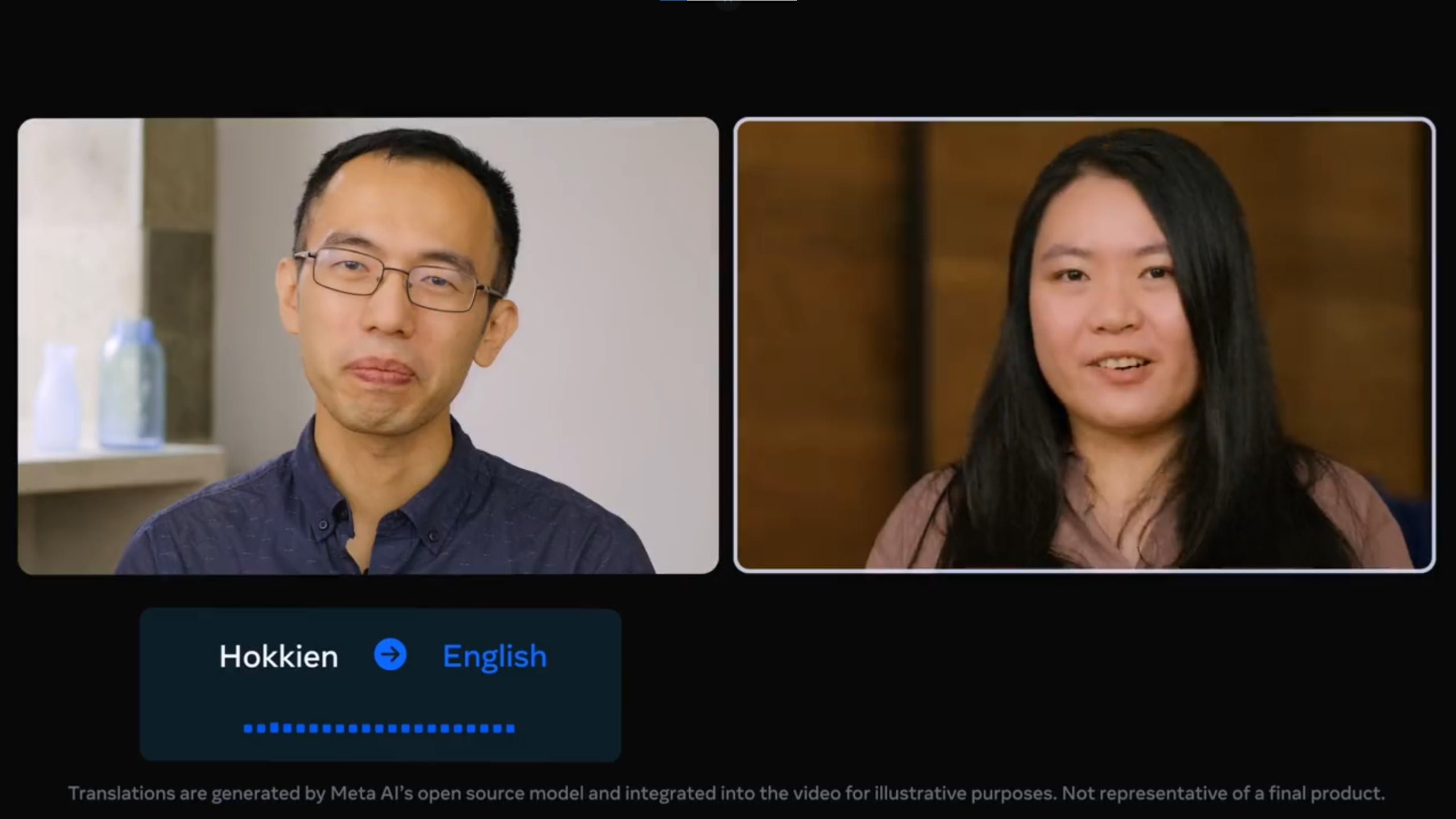

Meta wants to eliminate language barriers with the help of AI-assisted translation. A new system can translate the mainly spoken language Hokkien into English and vice versa in real time.

Meta connects people on social networks and one day, perhaps, in the Metaverse. In the process, the company has identified the language barrier as a hurdle and has been researching how to overcome it for years.

Direct speech-to-speech translation

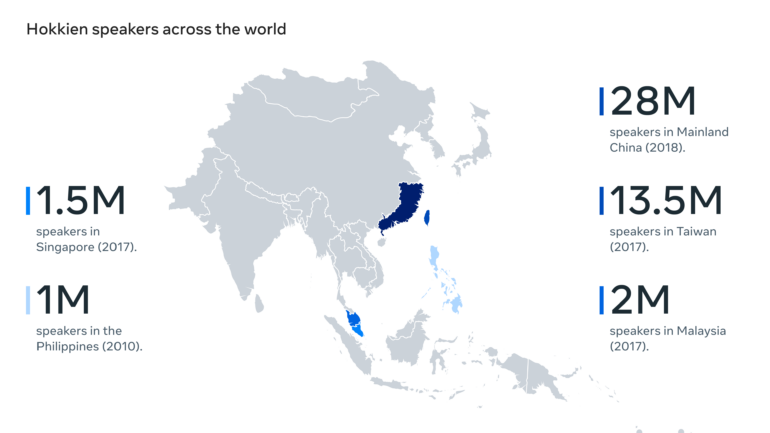

Now Meta is unveiling the next step in machine translation closer to its grand vision: a Universal Speech Translator. Its new system can translate the low-resource and primarily spoken Taiwanese language Hokkien into English and back in real time. The system translates speech directly into language without having to take a detour using text translation.

The challenge, according to Meta, lay particularly in the scarcity of training data. Meta, therefore, used Mandarin as a bridge language, translating spoken Hokkien into Mandarin text and then into spoken English and vice versa. The use of a resource-rich language significantly improved model performance, according to Meta.

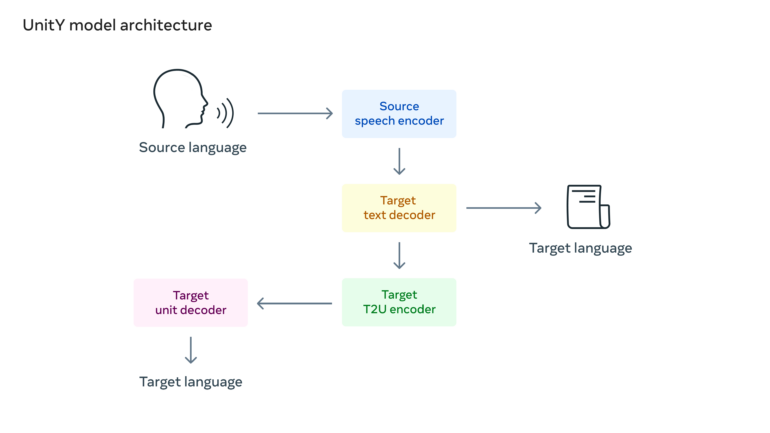

Using a language encoder, Meta was additionally able to encode language embeddings in Hokkien into the same semantic space as other languages, where it could then be aligned with spoken and written English. From the texts, Meta in turn generated spoken English, thus obtaining Hokkien and English in parallel. Meta calls this process "speech mining."

For speech-to-speech translation, Meta used speech-to-unit translation (S2UT), which translates a speech input into a sequence of acoustic units via a path developed by Meta. Using UnitY as a two-pass decoding mechanism, the decoder generated text in a related language (Mandarin) in the first pass and creates acoustic units in the second pass.

According to Meta, the methods first developed for Hokkien can be applied to many other written and unwritten languages. Meta is releasing the system and a large corpus of speech-to-speech translations as open source for the development of other translation systems. A Hokkien demo is available at Hugging Face.

(1/3) Until now, AI translation has focused mainly on written languages. Universal Speech Translator (UST) is the 1st AI-powered speech-to-speech translation system for a primarily oral language, translating Hokkien, one of many primarily spoken languages. https://t.co/onYKQ8uoKN pic.twitter.com/Iy8MRMOypQ

— Meta AI (@MetaAI) October 19, 2022

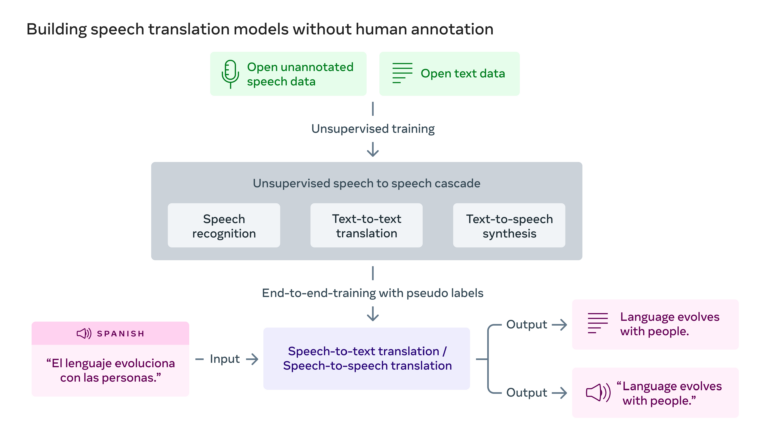

Meta has been researching AI translation for years

The Hokkien model can currently translate only one full sentence at a time. Nevertheless, Meta sees the model as a step towards a future with simultaneous translation between many languages. To achieve this, Meta relies on unsupervised (also called self-supervised) AI training with large amounts of speech and text data combined with speech recognition, text-to-text translation, and text-to-speech synthesis.

"Our progress in unsupervised learning demonstrates the feasibility of building high-quality speech-to-speech translation models without any human annotations," Meta writes.

For Meta CEO Mark Zuckerberg, universal translation is a "superpower that people have always dreamed of." Meta unveiled an unsupervised trained AI system for back-translation in 2018, M2M-100, a system capable of translating 100 languages, in 2020, and its evolution in 2021, which scored top marks in the WMT2021 benchmark for translation.

In February 2022, Meta introduced the "No Language Left Behind" project for real time universal translations even of rare languages. This was followed in summer 2022 by NLLB-200, a model for translating 200 languages.

All AI translation research at Meta comes together under the umbrella of the Universal Speech Translator project, which is also the grand vision.