Artificial Intelligence trained with first-person videos could better understand our world. At Meta, AR and AI development intersect in this space.

In the run-up to the CVPR 2022 computer vision conference, Meta is releasing the "Project Aria Pilot Dataset," with more than seven hours of first-person videos spread across 159 sequences in five different locations in the United States. They show scenes from everyday life - doing the dishes, opening a door, cooking, or using a smartphone in the living room.

AI training for everyday life

AI researchers should use this data to train an artificial intelligence that better understands everyday life. In practice, such an AI system could improve visual assistance systems in AR headsets in particular. The AI recognizes more elements in the environment and can, for example, provide tips while cooking.

Scenes from the dataset. | Video: Meta

Meta announced the first-person video collection project in October 2021, and at that time already released the Ego4D dataset with more than 2200 hours of first-person video footage.

Mike Schroepfer, Meta's CTO at the time, said at the launch of the Ego4D data set that it could be used, for example, to train an AI assistant to help you remember where you left your keys or teach you how to play the guitar.

Project Aria delivers particularly rich data

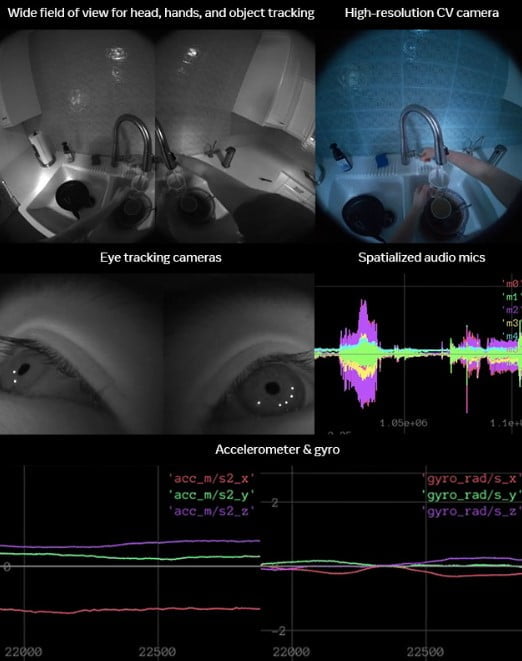

The current data set was collected with the AR glasses prototype "Project Aria", as the name suggests. The device is a sensor prototype for future high-end AR headsets, but does not have a display integrated.

With Aria, Meta primarily wants to collect data for software development for high-quality, future AR applications and generally learn how the sensors in the glasses behave in everyday life. Meta first introduced Aria about two years ago.

Aria collects a variety of data on top of the video data to augment the new dataset: In addition to one color and two black-and-white cameras, the headset has integrated eye tracking, a barometer, a magnetometer, spatial sound microphones and GPS.

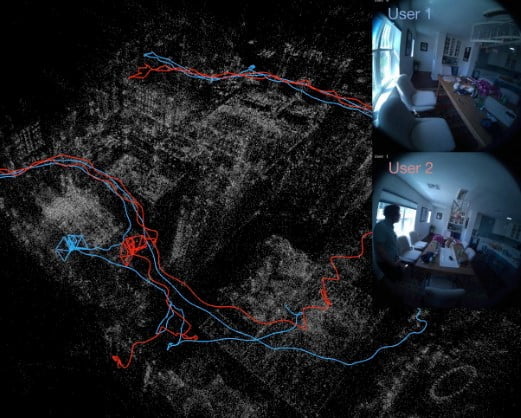

Complementing this data, Meta provides further information about the environment, e.g., how multiple spectacle wearers in the same household interact with each other. Speech-to-text capture also enables the evaluation of conversations and remarks in the context of visual impressions from the cameras.

"We believe this dataset will provide a baseline for external researchers to build and foster reproducible research on egocentric Computer Vision and AI/ML algorithms for scene perception, reconstruction and understanding," Meta writes.

In addition to these "Everyday Activities," Meta is expanding the data set to include "Desktop Activities." Here, the company has installed a motion-capture system on a desktop to capture everyday activities such as cooking even more accurately and from different perspectives.

For more information, visit the official website for the Aria dataset, where you can also request access.