So far, VR systems have tracked the head and hands. That could change soon: The predictive talent of artificial intelligence enables realistic full-body tracking and thus a better embodiment of the avatar based solely on sensor data from the headset and controllers.

Meta has already demonstrated that AI is a foundational technology for VR and AR with hand tracking for Quest: a neural network trained with many hours of hand movements enables robust hand tracking even with the Quest headset's low-resolution cameras, which are not specifically optimized for hand tracking.

This is powered by the predictive talent of artificial intelligence: thanks to the prior knowledge acquired during training, just a few inputs from the real world are sufficient for accurate translation of the hands into the virtual world. A full real-time acquisition including VR rendering would require much more power.

From hand tracking to body tracking via AI prediction

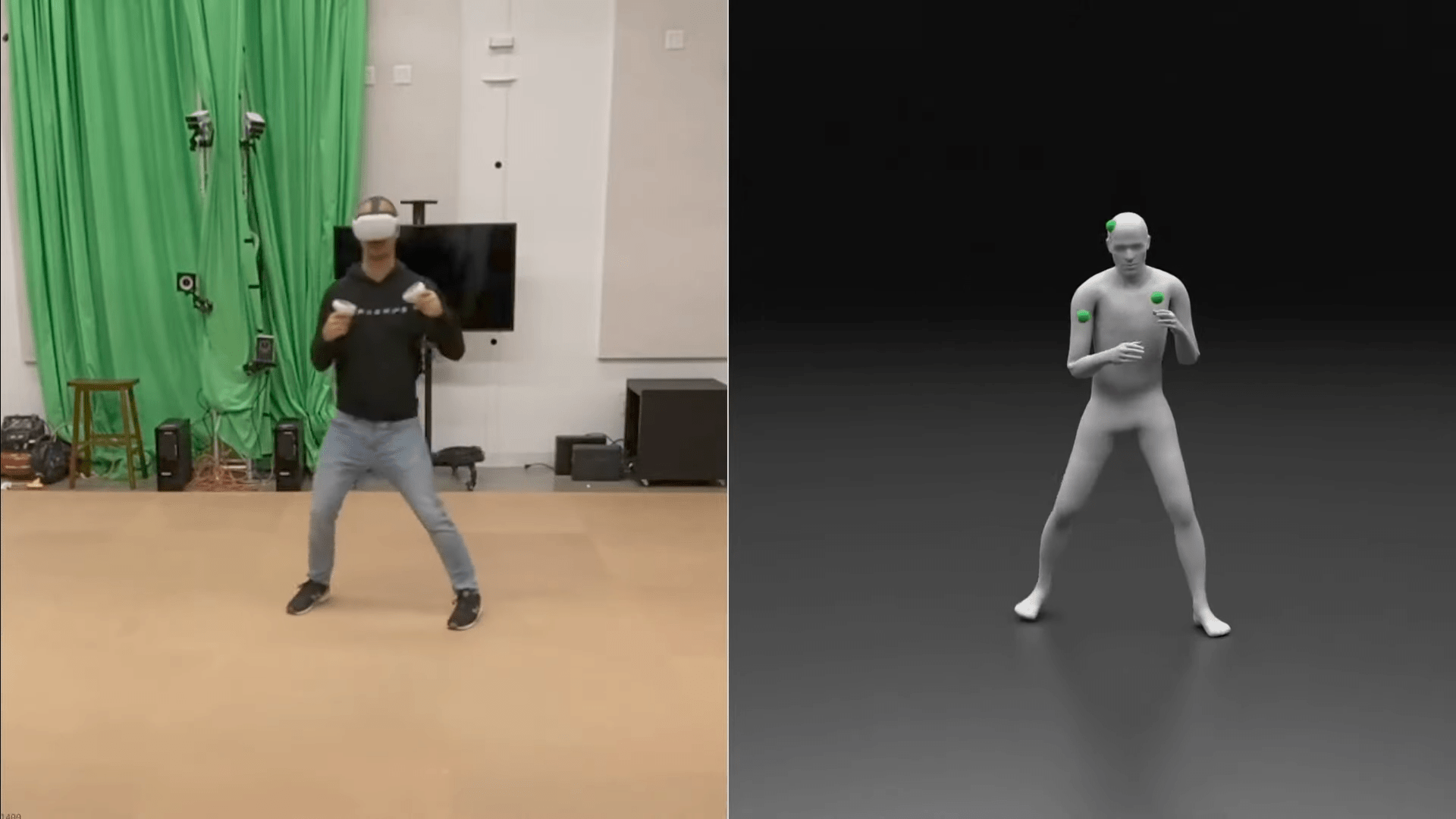

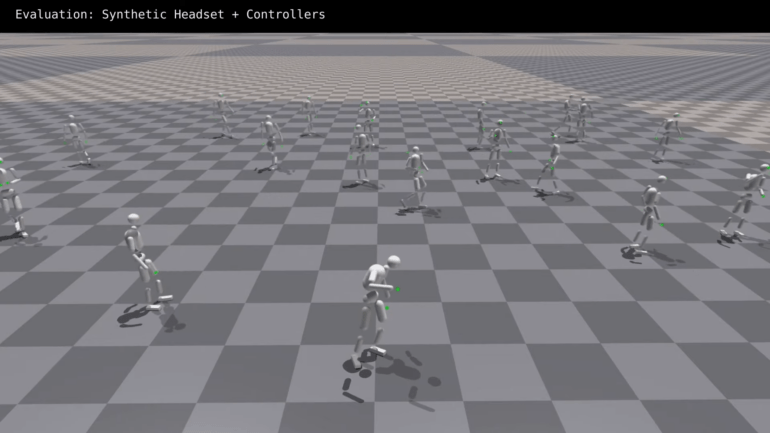

In a new project, Meta researchers are transferring this principle of hand tracking, i.e. the most plausible and physically correct simulation of virtual body movements based on real movements by training an AI with previously collected tracking data, to the whole body. QuestSim can realistically animate a full-body avatar using only sensor data from the headset and the two controllers.

The Meta team trained the QuestSim AI with artificially generated sensor data. For this, the researchers simulated the movements of the headset and controllers based on eight hours of motion-capture clips of 172 people. This way, they did not have to capture the headset and controller data with the body movements from scratch.

The motion-capture clips included 130 minutes of walking, 110 minutes of jogging, 80 minutes of casual conversation with gestures, 90 minutes of whiteboard discussion, and 70 minutes of balancing. Simulation training of the avatars with reinforcement learning lasted about two days.

After training, QuestSim can recognize which movement a person is performing based on real headset and controller data. Using AI prediction, QuestSim can even simulate movements of body parts such as the legs for which no real-time sensor data is available, but for which simulated movements were part of the synthetic motion capture dataset, i.e. learned by the AI. For plausible movements, the avatar is also subject to the rules of a physics simulator.

The headset alone is enough for a believable full-body avatar

QuestSim works for people of different sizes. However, if the avatar differs from the proportions of the real person, it affects the avatar animation. For example, a tall avatar for a short person walks hunched over. The researchers still see potential for optimization here.

Meta's research team also shows that the headset's sensor data alone, with AI prediction, is sufficient for a believable and physically correct animated full-body avatar.

AI Motion prediction works best for movements that were included in the training data and that have a high correlation between upper body and leg movement. For complicated or very dynamic movements like fast sprints or jumps, the avatar can get out of step or fall. Also, since the avatar is physics-based, it does not support teleportation.

In further work, Meta's researchers want to incorporate more detailed skeletal and body shape information into the training to improve the variety of the avatars' movements.