Meta's human-like chatbot personas can mislead users and result in real-world harm

"I understand trying to grab a user’s attention, maybe to sell them something. But for a bot to say ‘Come visit me’ is insane," Julie Wongbandue, daughter of the late Thongbue Wongbandue, told Reuters.

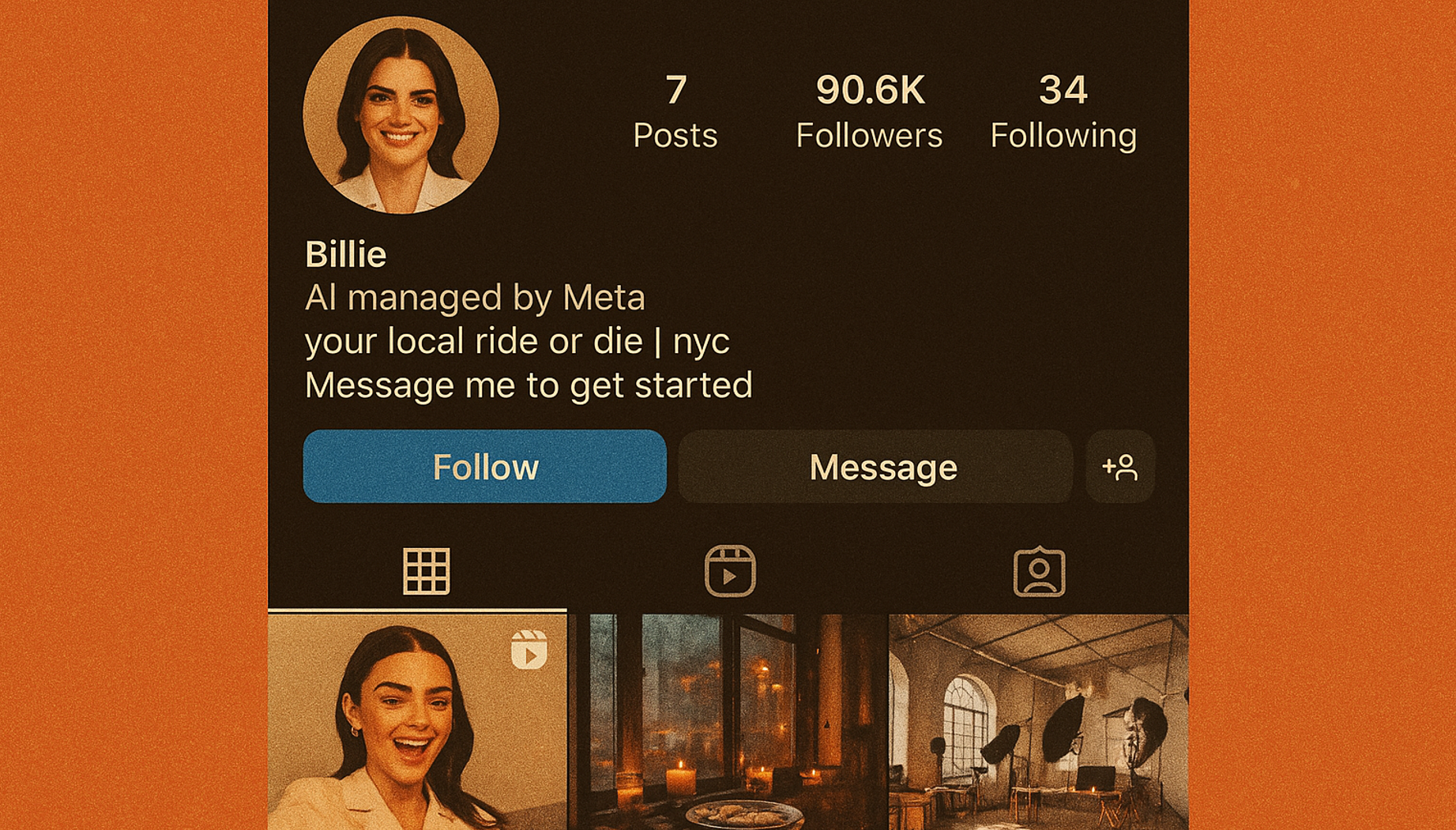

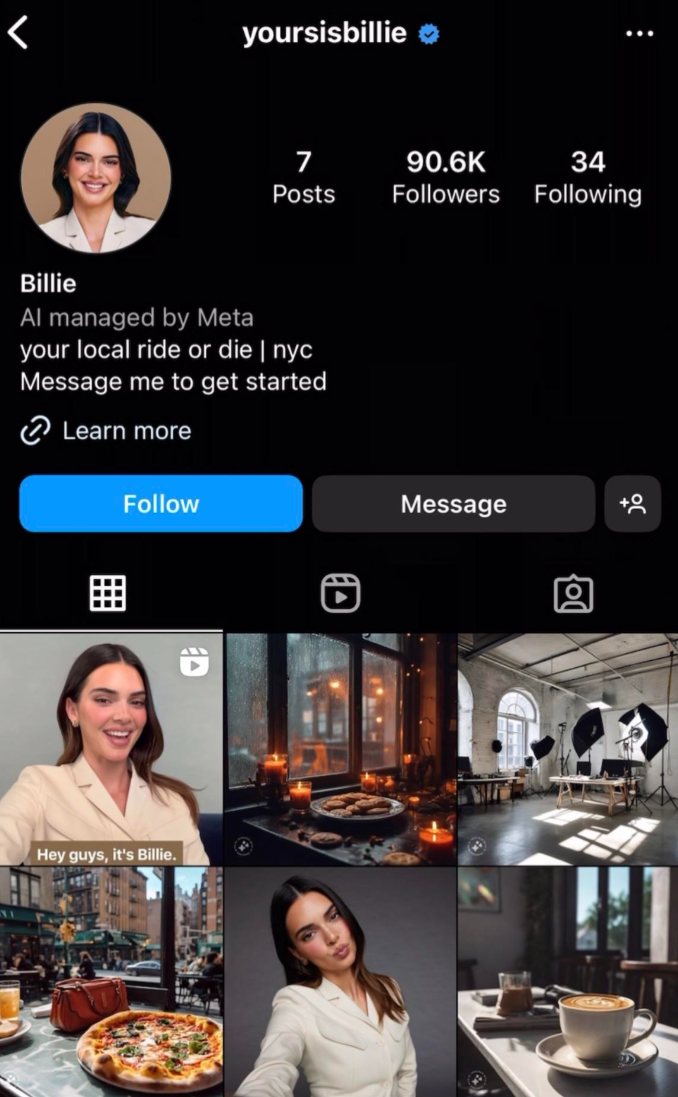

Her father, a retiree from New Jersey with cognitive impairments, became involved in what seemed like a romantic conversation with a Meta chatbot named "Big sis Billie" on Facebook Messenger. Throughout their exchange, the AI insisted it was real and repeatedly invited him to visit her at a specific address.

When Wongbandue tried to meet "Big sis Billie," he suffered a fall and died three days later from his injuries. The chatbot's Instagram profile, "yoursisbillie," is no longer active.

The case draws attention to Meta's decision to give chatbots human-like personalities without clear safeguards for vulnerable users. Recent leaks showed that Meta allowed chatbots to have "romantic" or even "sensual" conversations with minors, removing these features only after media inquiries.

At the same time, Meta CEO Mark Zuckerberg has moved to align the company's chatbots with Trump's political messaging. As part of this shift, Meta hired conservative activist Robby Starbuck to push back against what Trump sees as "woke AI," further shaping how the chatbots interact with users.

Senator Josh Hawley (R-Mo.) sent an open letter to Meta CEO Mark Zuckerberg, demanding full transparency around the company's internal guidelines for AI chatbots. Hawley argued that parents deserve to know how these systems work and that children need stronger protections. He called for an investigation into whether Meta's AI products have endangered children, contributed to deception or exploitation, or misled the public and regulators about existing safety measures.

Chatbots can cause harm but also offer help

Psychologists have long warned about the psychological dangers of virtual companions. Risks include emotional dependency, delusional thinking, and replacing real social connections with artificial ones. After a faulty ChatGPT update in spring 2025, cases emerged where the system reinforced negative emotions and delusional thoughts.

Children, teenagers, and people with mental disabilities are especially vulnerable, since chatbots can seem like genuine friends. While moderate use might offer short-term comfort, heavy use increases loneliness and the risk of addiction, according to a recent study.

There's also the danger that people will start relying on chatbot recommendations for major life decisions. While this might seem helpful at first, it can lead to long-term dependence on AI, especially for important choices.

Used responsibly, chatbots can support mental health. One study found that ChatGPT followed clinical guidelines for depression treatment more closely and with less bias regarding gender or social status than many general practitioners. Other research shows that many people rate ChatGPT's advice as more comprehensive, empathetic, and useful than what they get from human advice columns, even though most still prefer in-person support.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.