Meta's new HOT3D dataset could enable robots to learn manual skills from human experts

Meta has released a new benchmark dataset called HOT3D to advance AI research in the field of 3D hand-object interactions. The dataset contains over one million frames from multiple perspectives.

The HOT3D dataset from Meta aims to improve the understanding of how humans use their hands to manipulate objects. According to Meta, this remains a key challenge for computer vision research.

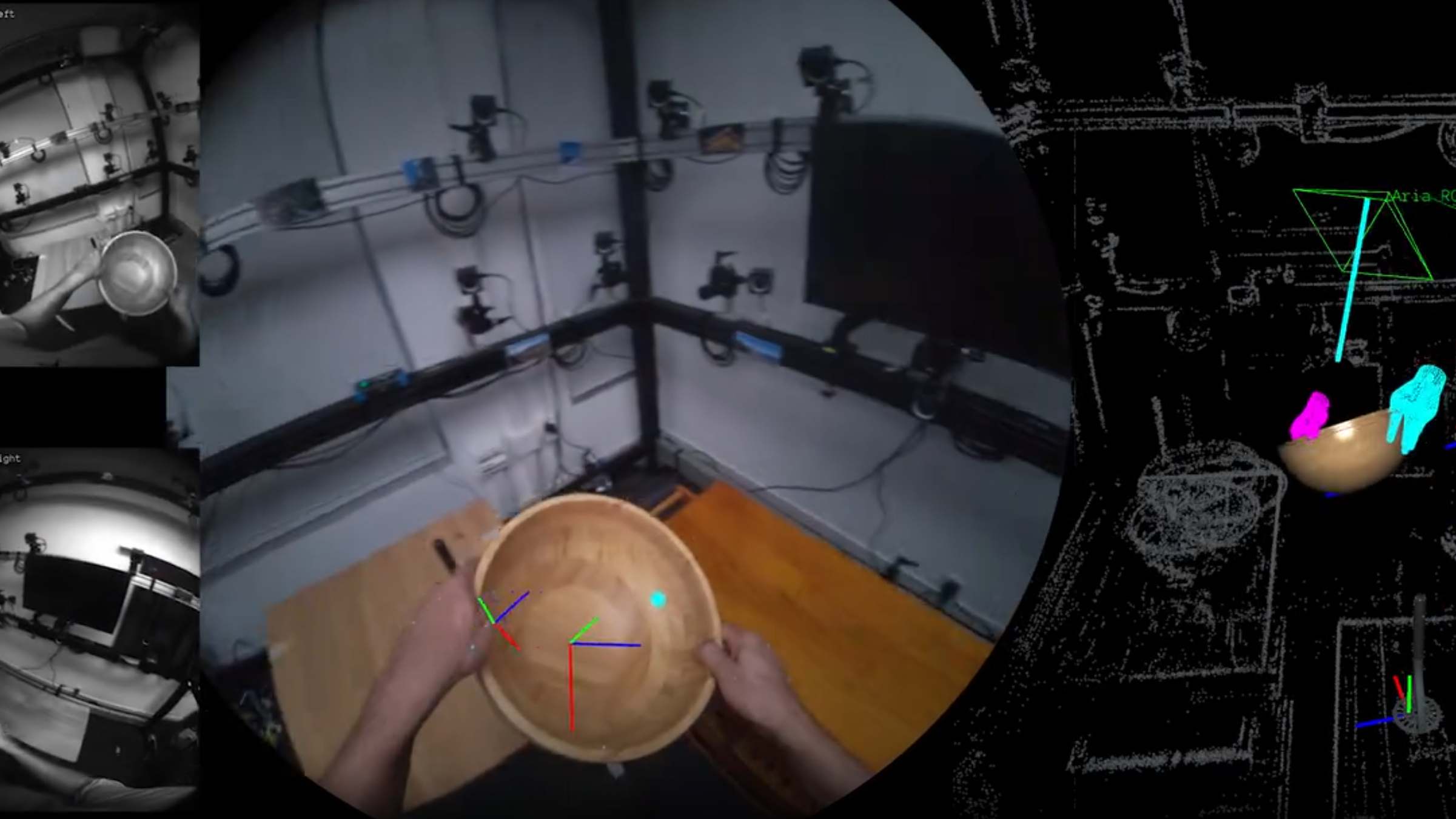

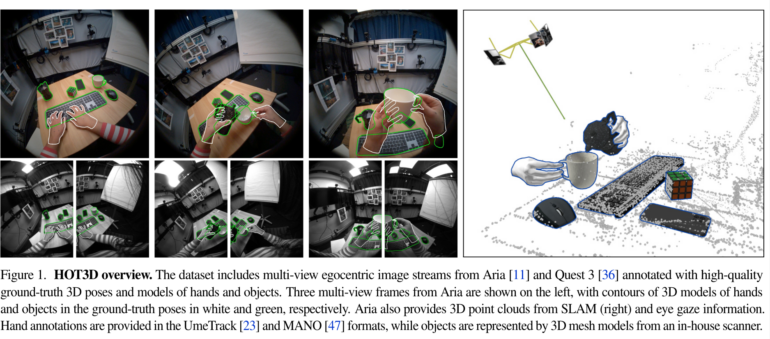

The dataset includes over 800 minutes of egocentric video recordings and contains synchronized video recordings from multiple perspectives as well as high-quality 3D pose annotations of hands and objects. It also includes 3D object models with PBR materials, 2D bounding boxes, gaze signals, and 3D scene point clouds from SLAM.

Video: Meta

The recordings show 19 subjects interacting with 33 different everyday objects. In addition to simple scenarios where objects are picked up, examined, and set down, the dataset also includes typical actions in kitchen, office, and living room environments.

Two Meta devices were used for data capture: the Project Aria research glasses and the Quest 3 VR headset. Project Aria provides one RGB image and two monochrome images per capture, while Quest 3 provides two monochrome images.

HOT3D could enable better robots and XR interactions

A core element of the dataset is the precise 3D annotations for hands and objects. These were captured using a marker-based motion capture system. The hand poses are provided in UmeTrack and MANO format, while the object poses are represented as 3D transformations.

Additionally, the dataset includes high-quality 3D models of the 33 objects used. These were created with an in-house 3D scanner from Meta and feature detailed geometry as well as PBR materials that allow for photorealistic rendering.

Meta sees potential for various applications in the dataset: "The HOT3D dataset and benchmark will unlock new opportunities within this research area, such as transferring manual skills from experts to less experienced users or robots, helping an AI assistant to understand user's actions, or enabling new input capabilities for AR/VR users, such as turning any physical surface to a virtual keyboard or any pencil to a multi-functional magic wand."

The dataset is available on Meta's HOT3D project page.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.