Meta's new state-of-the-art, versatile image model is trained solely on licensed data

Meta's latest image model CM3leon can understand and generate both text and images. It can create images from text descriptions and compose text based on images, making it useful for many tasks.

CM3leon (pronounced "chameleon") is a single foundation model capable of both text-to-image and image-to-text generation. It is the first multimodal model trained with a recipe adapted from text-only language models that can input and generate both text and images.

CM3Leon's architecture uses a decoder-only tokenizer-based transformer network, similar to text-based models. It builds on previous work (RA-CM3), utilizing an external database during training with something called "retrieval augmentation". While other models might only learn from the raw data fed to them, models with retrieval augmentation actively seek out the most relevant and diverse data for their learning process during training, making the training phase more robust and efficient.

Meta claims it requires five times less computation than previous transformer-based methods and less training data, making it as efficient to train as existing diffusion-based models.

A multitasking chameleon

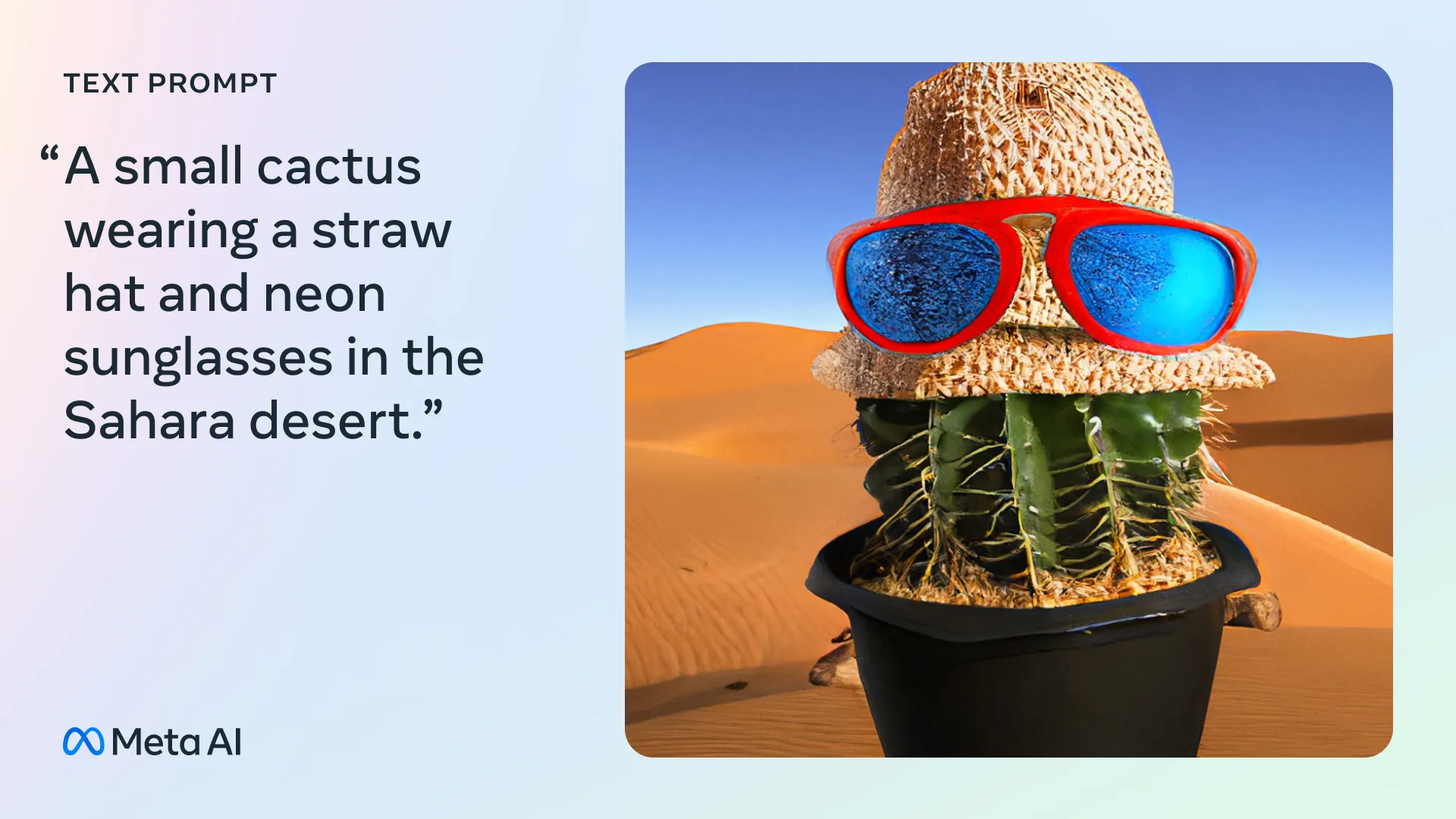

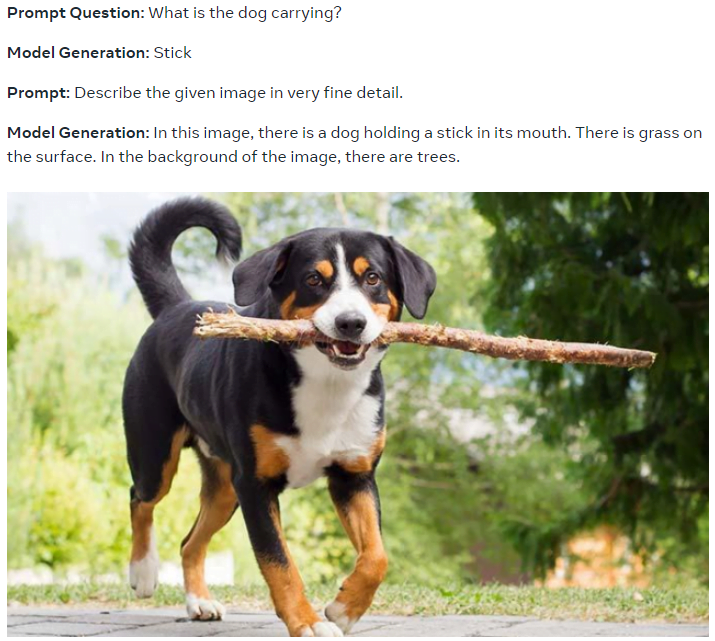

Thanks to large-scale multitask instruction tuning, CM3leon can perform a variety of tasks, including text-guided image generation and editing, text-to-image generation, text-guided image editing, caption generation, visual question answering, and structure-guided image editing.

"Instruction tuning" means that the model is trained to follow instructions given in text format. For example, you could provide an instruction such as "describe an image of a sunset over the ocean," and the AI model will generate a description based on that instruction. The model has been trained on such examples in the wide variety of tasks mentioned above.

Meta also says that scaling recipes developed for text-only models generalize directly to tokenization-based image generation models, which implies even better results with bigger models, trained longer on more data. CM3leon's training included a large-scale retrieval-augmented pre-training phase on huge amounts of data, and then it undergoes a supervised fine-tuning (SFT) phase with instructions to get its multitasking capabilities.

On the image generation benchmark (zero-shot MS-COCO), CM3leon achieves a Fréchet Inception Distance (FID) score of 4.88, which is a new state-of-the-art result and beats Google's Parti image model.

More coherence, more licensing, more metaverse

According to Meta, CM3leon excels at producing coherent images that better follow even complex input instructions. It can better recover global shapes and local details, generate text or numbers as they appear in the prompt, and solve tasks like text-guided image editing that previously required specialized models like Instruct Pix2Pix.

It can also write detailed captions for images, reverse-prompting if you will, which can then be used for further image creation or editing, or for creating synthetic training datasets. Meta says that CM3leon matches or beats Flamingo and OpenFlamingo on text tasks, even though it was trained on less text (3 billion text tokens).

Most notably, Meta says the model was trained on a "new large Shutterstock dataset that includes only licensed image and text data," but it's still very competitive compared to other models.

"As a result, we can avoid concerns related to images ownership and attribution without sacrificing performance," they write.

According to Meta, CM3leon is a step toward higher-fidelity image generation and understanding, paving the way for multimodal language models. And it's still a believer in the metaverse, stating that models like CM3leon "could ultimately help boost creativity and better applications in the metaverse."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.