Microsoft: Iran uses generative AI to influence US elections

Iran is increasingly turning to cyber-based influence operations and generative AI to manipulate the 2024 US presidential elections, according to a recent report from Microsoft. Russia and China also remain active threats.

Microsoft's Threat Analysis Center (MTAC) warns that Iranian actors are ramping up their use of cyber influence operations and generative AI technologies to target the 2024 US elections.

Since June 2024, Iran has been laying the groundwork for influence operations aimed at US targets, including initial cyber reconnaissance activities and the infiltration of online personas and websites in the information space.

Microsoft has identified several Iranian groups involved in these activities. The Sefid Flood group has been preparing for influence operations since the Iranian New Year's festival in late March. They specialize in impersonating social and political activists to sow chaos and undermine trust in authorities.

Another group called Mint Sandstorm, linked to Iran's Revolutionary Guards, attempted to phish a senior staffer of a presidential campaign in June. The group had carried out similar attacks before the 2020 election.

AI plagiarism for influence

Microsoft also reports on an Iranian network called Storm-2035 that runs four websites posing as news portals. These target US voter groups at both ends of the political spectrum, spreading polarizing messages on topics like presidential candidates, LGBTQ rights, or the Ukraine war.

The report says Iranian actors are using AI-based services to plagiarize and rephrase content from US publications. They employ SEO plugins and other AI-based tools to generate article headlines and keywords.

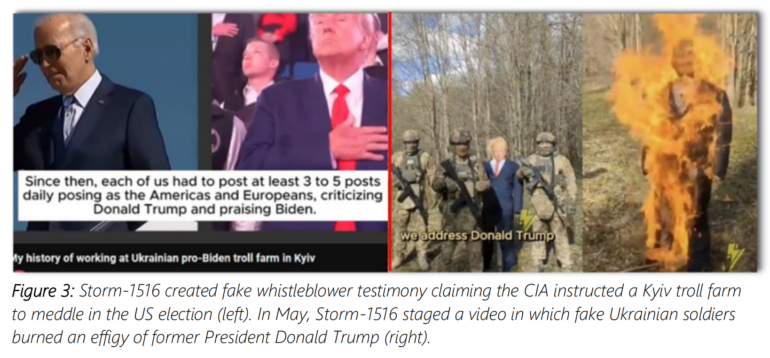

In addition to Iran, Russian, and Chinese actors also remain active. Microsoft is observing three Russian influencers running campaigns related to the US elections. The Storm-1516 group has been focusing on producing fake videos spreading scandalous claims since April.

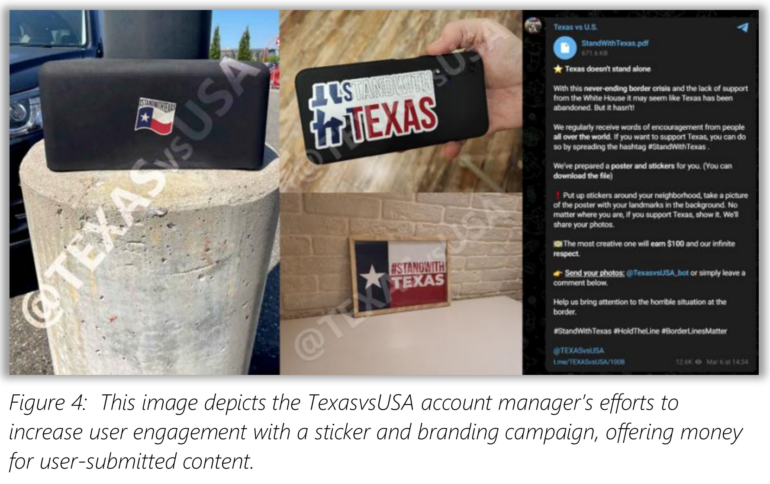

Chinese actors like Taizi Flood and Storm-1852 have expanded their activities to new platforms and evolved their tactics. They use hundreds of accounts to stir outrage over pro-Palestinian protests at US universities and are increasingly turning to short videos on political topics.

AI hype in influence ops fading

Microsoft stresses that the actors' use of generative AI has had limited impact so far - and many are instead returning to tried-and-true methods: "In total, we’ve seen nearly all actors seek to incorporate AI content in their operations, but more recently many actors have pivoted back to techniques that have proven effective in the past—simple digital manipulations, mischaracterization of content, and use of trusted labels or logos atop false information."

A full automation of political influence therefore does not yet seem possible - but generative AI can support and scale these efforts. OpenAI recently identified and banned several such groups.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.