Microsoft releases real-time AI-generated playable demo of Quake II

Microsoft has introduced a research project that generates and runs Quake II entirely within an AI model, producing a playable version of the game in real time.

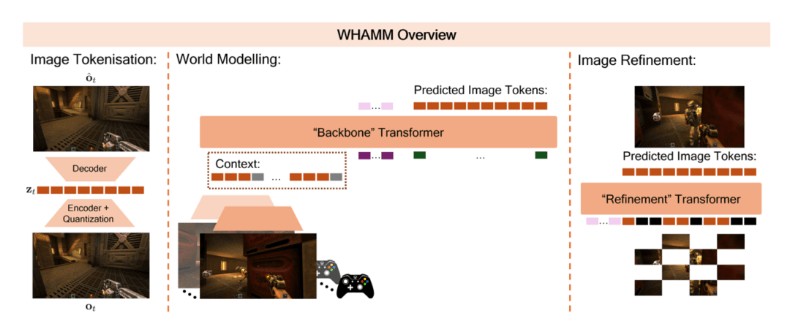

The model, called WHAMM (World and Human Action MaskGIT Model), is part of Microsoft’s Copilot Labs and is designed to explore the capabilities and boundaries of generative AI in interactive media. It builds on an earlier version, WHAM-1.6B, which was trained on the game Bleeding Edge. That model managed only about one frame per second.

WHAMM increases performance significantly, generating over ten frames per second—enough to support real-time interactivity within the model itself. Both WHAMM and WHAM-1.6B are part of Microsoft’s “Muse” model family, which focuses on generative AI tools for game development.

Training with drastically less data

One of WHAMM’s key innovations is its ability to learn from far less data. While WHAM-1.6B was trained on seven years of gameplay, WHAMM required just one week of Quake II gameplay collected from a single level. The dataset, recorded by professional testers, offered targeted and high-quality examples that allowed the model to efficiently learn in-game behavior.

WHAMM also adopts a different technical strategy. Instead of using the autoregressive method employed by WHAM-1.6B—where image tokens are generated one at a time—WHAMM implements a MaskGIT strategy. This approach allows the model to generate all image tokens in parallel over several iterations. As a result, generation speed has increased significantly, and the output resolution has doubled, improving from 300 × 180 pixels to 640 × 360 pixels.

WHAMM's architecture consists of two main components. The first is a “backbone” transformer with roughly 500 million parameters, which generates the initial image predictions. The second is a smaller “refinement” module with 250 million parameters that iteratively improves the output. To produce each new frame, the model uses the previous nine image-action pairs as context.

Playable demo highlights current capabilities

The AI-generated version of Quake II—available for testing here—supports core interactions such as moving, jumping, shooting, and placing objects. The simulation also preserves changes made to the environment and allows players to explore hidden sections of the level.

AI-generated gameplay demo. | Video: Microsoft

Although WHAMM supports basic gameplay, it does not fully reproduce the original Quake II. The model generates an approximation of the environment based on a narrow training dataset, which leads to several technical limitations.

Enemy characters appear visually blurred, combat lacks realism, and health indicators are unreliable. Objects disappear from the scene if they remain off-screen for more than 0.9 seconds—the limit of the model’s context window. The playable area is restricted to a single segment of the level, and the simulation freezes once that section ends. Input latency also remains high, with noticeable delays between player input and system response.

Emerging tools for AI-driven game development

WHAMM is part of a broader set of recent initiatives exploring how generative AI can be applied to game development. Other examples include GameGen-O, which focuses on generating open-world simulations, as well as GameNGen and DIAMOND—systems from Google and Deepmind that simulate gameplay for titles such as DOOM and Counter-Strike. While these models represent significant progress, they continue to face technical constraints, including low-resolution output, limited memory, and reduced contextual awareness.

The gaming industry is particularly prone to adopting generative AI because it brings together multiple disciplines—code, design, storytelling, and multimedia—within development cycles that are often constrained by tight budgets and timelines. This combination of creative complexity and resource pressure makes game production especially receptive to tools that can partially automate structured tasks.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.