Microsoft researcher describes two new deepfake methods and their risks

Eric Joel Horvitz is a computer scientist and director of the Microsoft Research Lab in Redmond. In a new research paper, he describes two new deepfake methods and their far-reaching risks.

In the research paper, "On the Horizon: Interactive and Compositional Deepfakes," Horvitz describes two new deepfake methods that he believes are technically possible in the future and "that we can expect to come into practice with costly implications for society."

Interactive and compositional deepfakes

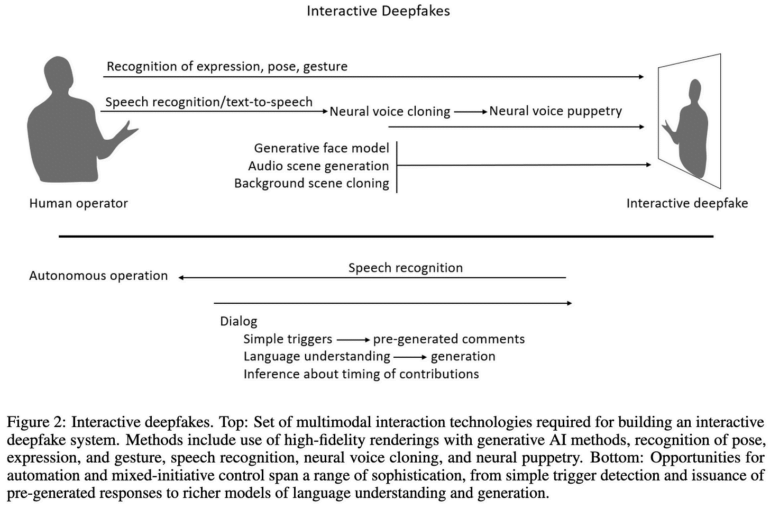

"Interactive deepfakes" is what Horvitz calls multimodal deepfake clones of real people that are indistinguishable from the real person during video phone calls, for example. Current deepfake systems are mostly limited to exchanging faces - and even that offers only limited interaction possibilities. The illusion breaks down, for example, with head turns or expressive mimics.

For interactive deepfakes, Horvitz envisions the use of a range of AI techniques for synthetic recreations of poses, facial expressions, gestures, speech, and remote control by a real person in the style of a puppeteer ("neural puppetry").

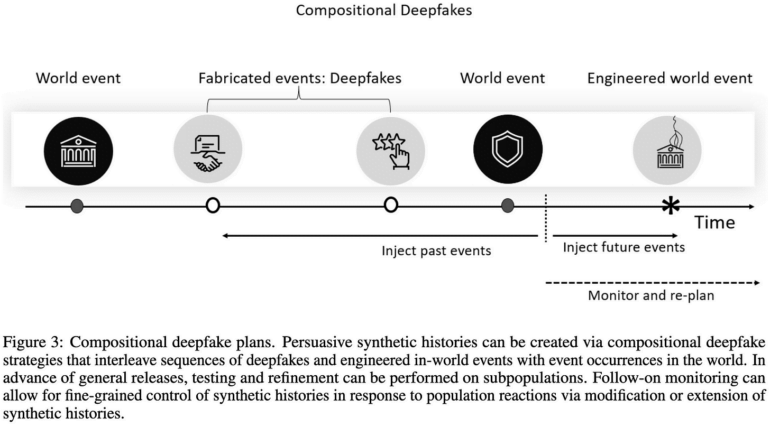

The "compositional deepfake," Horvitz says, is the compilation of a series of deepfakes that together invent a larger story, such as a terrorist attack or a scandal that never happened.

These deepfakes could be interwoven with real events to systematically establish "synthetic histories," or falsifications of history, among subgroups of the population, for example. Horvitz calls these systems "adversarial explanation systems" that retroactively and predictively attack a communal understanding of world events.

Fighting back interdisciplinarily - as best we can

"In the absence of mitigations, interactive and compositional deepfakes threaten to move us closer to a post-epistemic world, where fact cannot be distinguished from fiction," Horvitz writes.

As possible countermeasures, the researcher describes common considerations and systems such as educating the public about deepfake risks, regulating the technology, automatically detecting deepfakes, Red-teaming, and cryptographic proof, such as through digital watermarks.

Horvitz also advocates thinking across disciplines about how AI advances could be used for both the public good and malicious purposes to anticipate coming developments as accurately as possible.

"As we progress at the frontier of technological possibilities, we must continue to envision potential abuses of the technologies that we create and work to develop threat models, controls, and safeguards—and to engage across multiple sectors on rising concerns, acceptable uses, best practices, mitigations, and regulations."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.