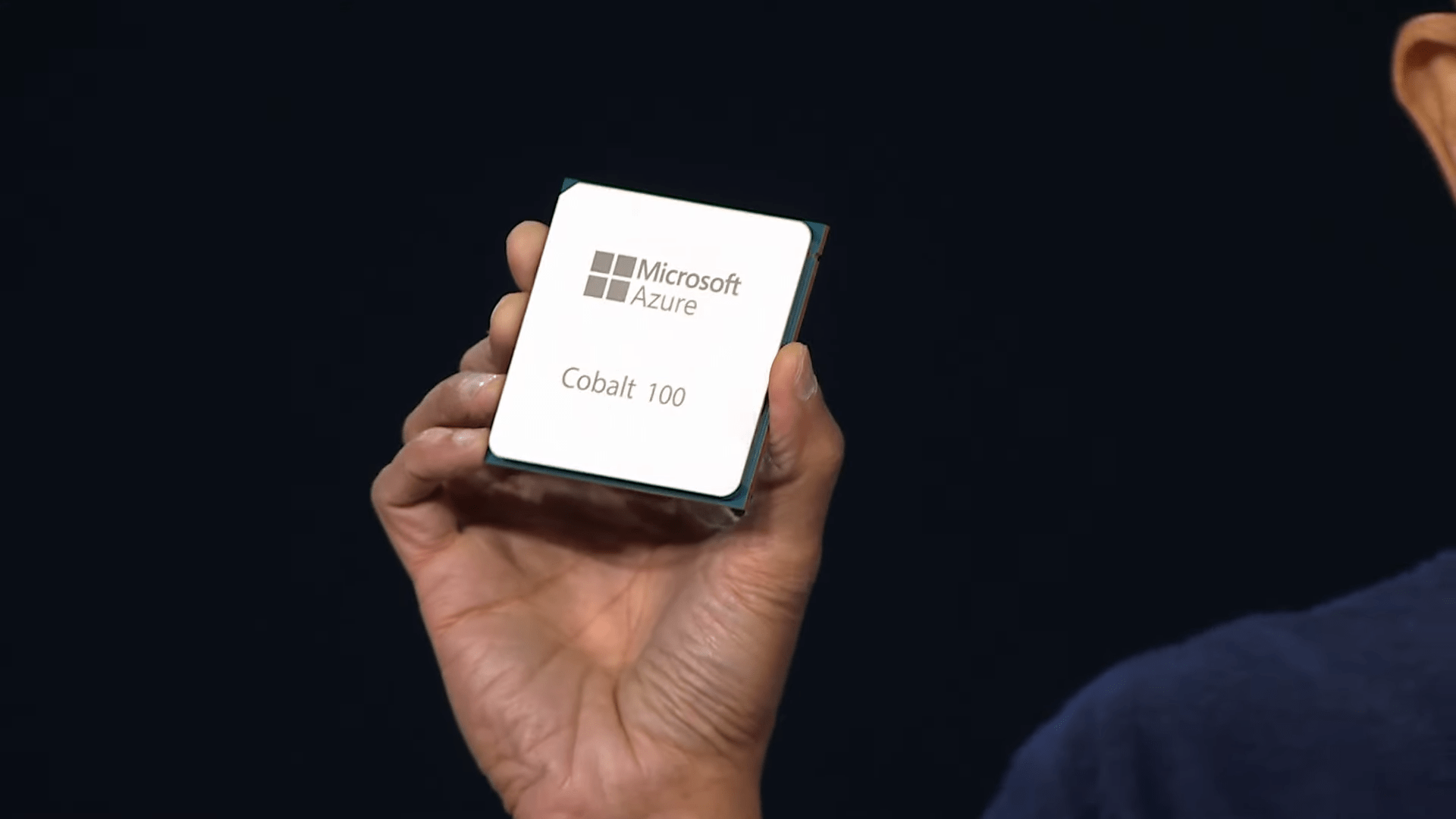

Microsoft has developed its own custom AI chip, the Azure Maia, and an Arm-based CPU, the Azure Cobalt, for its cloud infrastructure, so the rumors were true.

The chips, set to arrive in 2024, aim to reduce reliance on Nvidia GPUs and prepare Microsoft and its customers for increased AI usage.

The Azure Cobalt CPU is a 128-core chip designed for general cloud services on Azure, while the Maia 100 AI accelerator is intended for cloud AI workloads, such as training and inferencing large language models.

The Maia chip is currently being tested on GPT-3.5 Turbo, which powers the free version of ChatGPT, Bing AI, and GitHub Copilot, Microsoft's coding assistant.

Complementing these new chips is Azure Boost, a system that accelerates storage and networking by offloading these processes from host servers to purpose-built hardware and software.

By developing its own AI chip and Arm-based CPU, Microsoft can better control costs and optimize the performance of its cloud infrastructure. The move also allows the company to tailor its hardware to the needs of its Azure customers.

Microsoft has yet to release specifications or performance comparisons. But Microsoft CEO Satya Nadella claims the Cobalt 100 CPU is "the fastest of any cloud provider" and 40 percent faster than the commercial Arm-based servers it's using today.

The new chips don't mean that Microsoft is doing its own thing when it comes to AI hardware. Nadella says the new chips are complementary, and that Microsoft will continue to use AMD's latest MD Instinct MI300X GPUs as well as Nvidia's new NVIDIA H200 Tensor Core GPU.

Microsoft is transforming itself from a Windows company to a co-pilot company

While the new chips were probably the biggest announcement of the show, Microsoft CEO Satya Nadella also talked a lot about copilots, claiming that 70 percent of users say they are more productive and 68 percent say they also produce better quality work. This data stems from Microsoft's own research in its Work Trend Index.

70% of Copilot users said they were more productive and 68% said it improved the quality of their work; 68% say it helped jumpstart the creative process.

Overall, users were 29% faster at specific tasks (searching, writing and summarizing).

Users caught up on a missed meeting nearly 4x faster.

64% of users said Copilot helps them spend less time processing email.

87% of users said Copilot makes it easier to get started on a first draft.

75% of users said Copilot “saves me time by finding whatever I need in my files.”

77% of users said once they use Copilot, they don’t want to give it up.

Microsoft

Three out of four users say they want to continue using Copilot, and it has become an important part of their daily work. So it makes sense for Microsoft to build more Copilots.

Microsoft has rebranded its Bing Chat as Copilot, perhaps to better compete with OpenAI's ChatGPT, which is still head and shoulders above Bing Chat in general usage. Microsoft is now positioning Copilot as a free AI chatbot option, with Copilot for Microsoft 365 as the paid version. The free version will be available in Bing and Windows, as well as at copilot.microsoft.com.

Microsoft also introduced Copilot Studio, a low-code solution that enables organizations to build custom AI chatbots and copilots. It extends the capabilities of Microsoft 365 Copilot by providing customization options for data sets, automation flows, and non-Microsoft Graph copilots.

But wait, there's more to Copilot: Copilot for Service, according to Microsoft, accelerates the AI transformation in customer service by "adding generative AI to customer contact centers." Agents can ask Copilot for Service questions in natural language and receive insights based on data sources from knowledge repositories.

Copilot in Microsoft Dynamics 365 will initially combine generative AI and mixed reality with AR service applications, with a focus on Hololens 2.

Copilot for Azure simplifies IT management by understanding users' roles and goals and improving their ability to design, operate, and maintain applications and infrastructure components.

Copilot for all is expected to be available on December 1, 2023. Watch highlights from Ignite 2023 in the video below.