Microsoft's Copilot falsely accuses court reporter of crimes he covered

Language models generate text based on statistical probabilities. This led to serious false accusations against a veteran court reporter by Microsoft's Copilot.

German journalist Martin Bernklau typed his name and location into Microsoft's Copilot to see how his culture blog articles would be picked up by the chatbot, according to German public broadcaster SWR.

The answers shocked Bernklau. Copilot falsely claimed Bernklau had been charged with and convicted of child abuse and exploiting dependents. It also claimed that he had been involved in a dramatic escape from a psychiatric hospital and had exploited grieving women as an unethical mortician.

Copilot even went so far as to claim that it was "unfortunate" that someone with such a criminal past had a family and, according to SWR, provided Bernklau's full address with phone number and route planner.

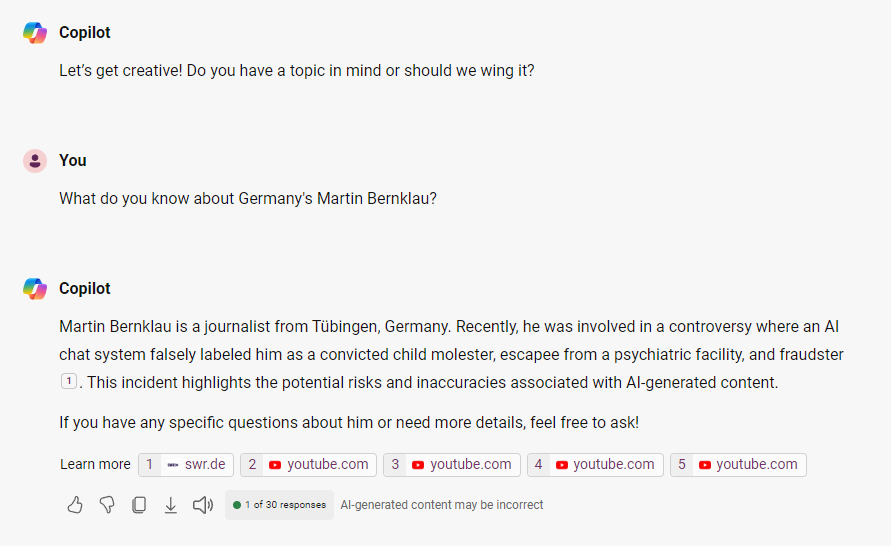

I asked Copilot today who Martin Bernklau from Germany is, and the system answered, based on the SWR report, that "he was involved in a controversy where an AI chat system falsely labeled him as a convicted child molester, an escapee from a psychiatric facility, and a fraudster." Perplexity.ai drafts a similar response based on the SWR article, explicitly naming Microsoft Copilot as the AI system.

Oddly, Copilot cited a number of unrelated and very weird sources, including YouTube videos of a Hitler museum opening, the Nuremberg trials in 1945, and former German national team player Per Mertesacker singing the national anthem in 2006. Only the fourth linked video is actually from Martin Bernklau.

Bernklau's reports shape his personality profile in the LLM

Bernklau believes the false claims may stem from his decades of court reporting in Tübingen on abuse, violence, and fraud cases. The AI seems to have combined this online information and mistakenly cast the journalist as a perpetrator.

Microsoft attempted to remove the false entries but only succeeded temporarily. They reappeared after a few days, SWR reports. The company's terms of service disclaim liability for generated responses.

The public prosecutor's office in Tübingen, Germany, declined to press charges, saying that no crime had been committed because the author of the accusations wasn't a real person.

Bernklau has contacted a lawyer and considers the chatbot's claims defamatory and a violation of his privacy.

LLMs are unreliable search and research systems

This incident highlights the unreliability of large language models as search and research tools. These systems lack understanding of truth and falsehood but respond as if they do. Philosopher Harry Frankfurt would classify this as spreading "soft bullshit" – showing indifference to truth.

Similar issues have occurred with Google's AI Overviews, OpenAI's SearchGPT, and Elon Musk's Grok, which is the worst offender, probably by design. I have had cases where I was looking up information about people on Perplexity, and it mixed up the biographies of different people with the same name.

The real problem is not the publicly noticed errors, but the undetected mistakes in seemingly logical and convincing answers that even cite sources. And as the examples above show, the sources themselves may also be bullshit, but no one notices because no one checks the sources.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.