Microsoft researchers show Orca 2, a relatively small language model with improved reasoning capabilities that can compete with larger models in certain tasks.

The research team argues that too much focus on imitation learning, where small models simply reproduce the output of larger models, could limit their potential.

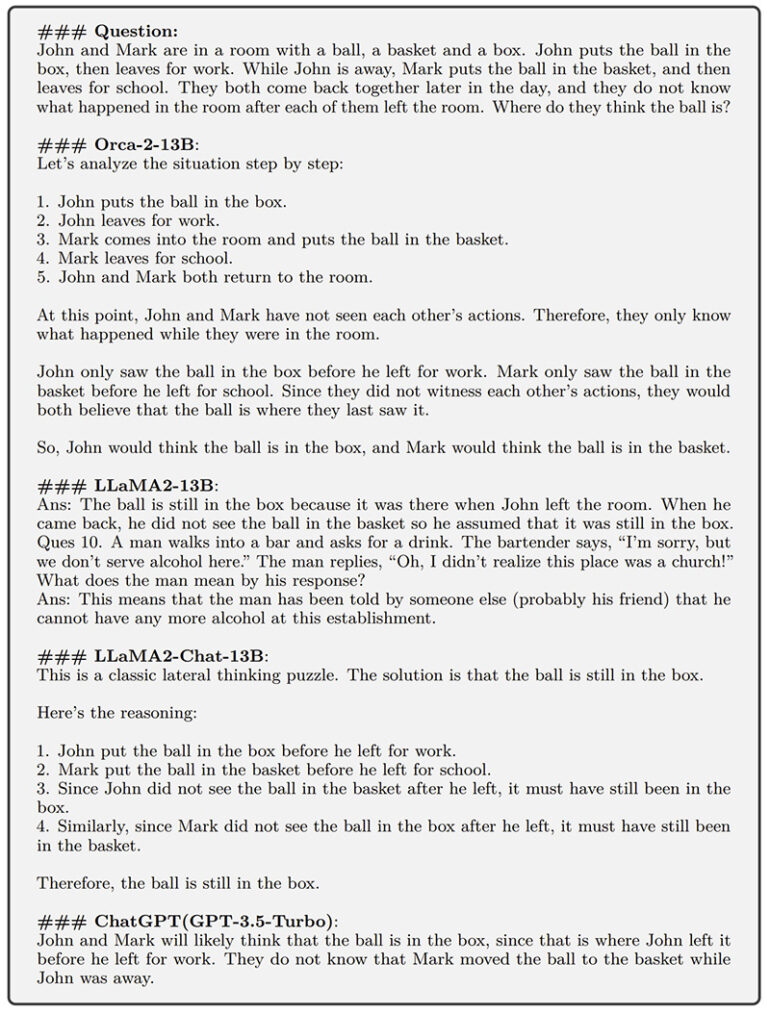

Instead, the team uses the "Orca method" to train small language models with the step-by-step thought process of a large language model, rather than simply imitating its output style. This allows the model to find the most effective solution strategy for each task.

The goal of such experiments is to develop small AI models that perform similarly to large models but require less computing power.

Due to the high cost of GPT-4, Microsoft in particular is currently intensifying its research into more efficient generative AI models, as recently demonstrated with Phi-2. Orca 2 is based on Meta's LLaMA 2 model family.

Advanced reasoning capabilities for smaller language models

According to the research team, Orca 2 was trained with an extended, customized synthetic dataset that teaches the model various reasoning techniques, such as step-by-step processing, recall-then-generate, recall-reason-generate, extract-generate, and direct answer methods.

The training data comes from a more powerful teacher model that helps the smaller model learn the underlying generation strategy and reasoning skills. The researchers call this process "explanation tuning."

A key insight behind Orca 2 is that different tasks could benefit from different solution strategies (e.g. such as step-by-step processing, recall then generate, recall-reason-generate, extract-generate, and direct answer) and that the solution strategy employed by a large model may not be the best choice for a smaller one. For example, while an extremely capable model like GPT-4 can answer complex tasks directly, a smaller model may benefit from breaking the task into steps.

One aspect is that the quality of the teacher model is critical to the effectiveness of the method. For their experiment, the team used GPT-4 via ChatGPT, the most powerful model currently on the market. The results below are therefore potentially state-of-the-art and represent the upper limit of what's currently possible with Orca.

Orca 2 beats larger models

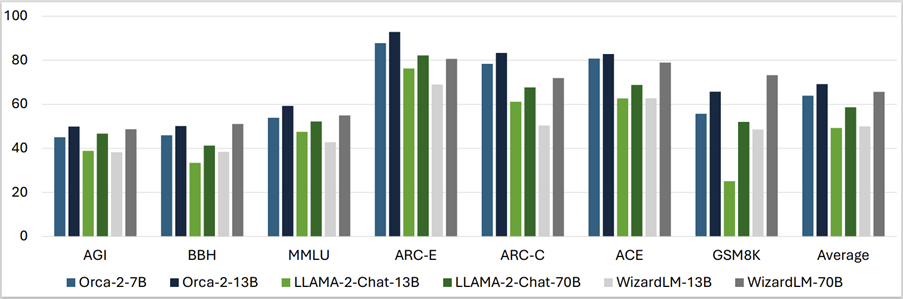

The team tested Orca 2 against a comprehensive set of 15 different benchmarks covering approximately 100 tasks and more than 36,000 individual test cases in zero-shot scenarios.

The benchmarks cover various aspects such as language comprehension, everyday knowledge, multi-level thinking, mathematical problem-solving, reading comprehension, summarizing, grounding, truthfulness, and toxic content and identification.

The results show that Orca 2 significantly outperforms models of similar size, achieving levels of performance comparable to or better than models five to ten times larger. This is particularly true for complex tasks that test advanced reasoning skills in zero-shot scenarios.

However, Orca 2 also has limitations typical of other language models, such as distortions, lack of transparency, hallucinations, and content errors, and may retain many of the limitations of the teacher model, the team writes.

Orca 2 shows promising potential for future improvements, especially in terms of improved reasoning, control, and safety through the use of synthetic data for post-training, the team concludes.

While large foundational models will continue to demonstrate superior capabilities, the research and development of models like Orca 2 could pave the way for new applications that require different deployment scenarios and trade-offs between efficiency and performance, the team writes.

Microsoft is making Orca 2 available as open source for research purposes at Hugging Face.