MiniGPT-4 is another example of open-source AI on the rise

OpenAI introduced GPT-4 as a multimodal model with image understanding, but has not yet released the image part of the model. MiniGPT-4 makes it available today - as an open-source model.

MiniGPT-4 is a chatbot with image understanding. This is a feature that OpenAI introduced at the launch of GPT-4, but has not yet been released outside the Be my Eyes app.

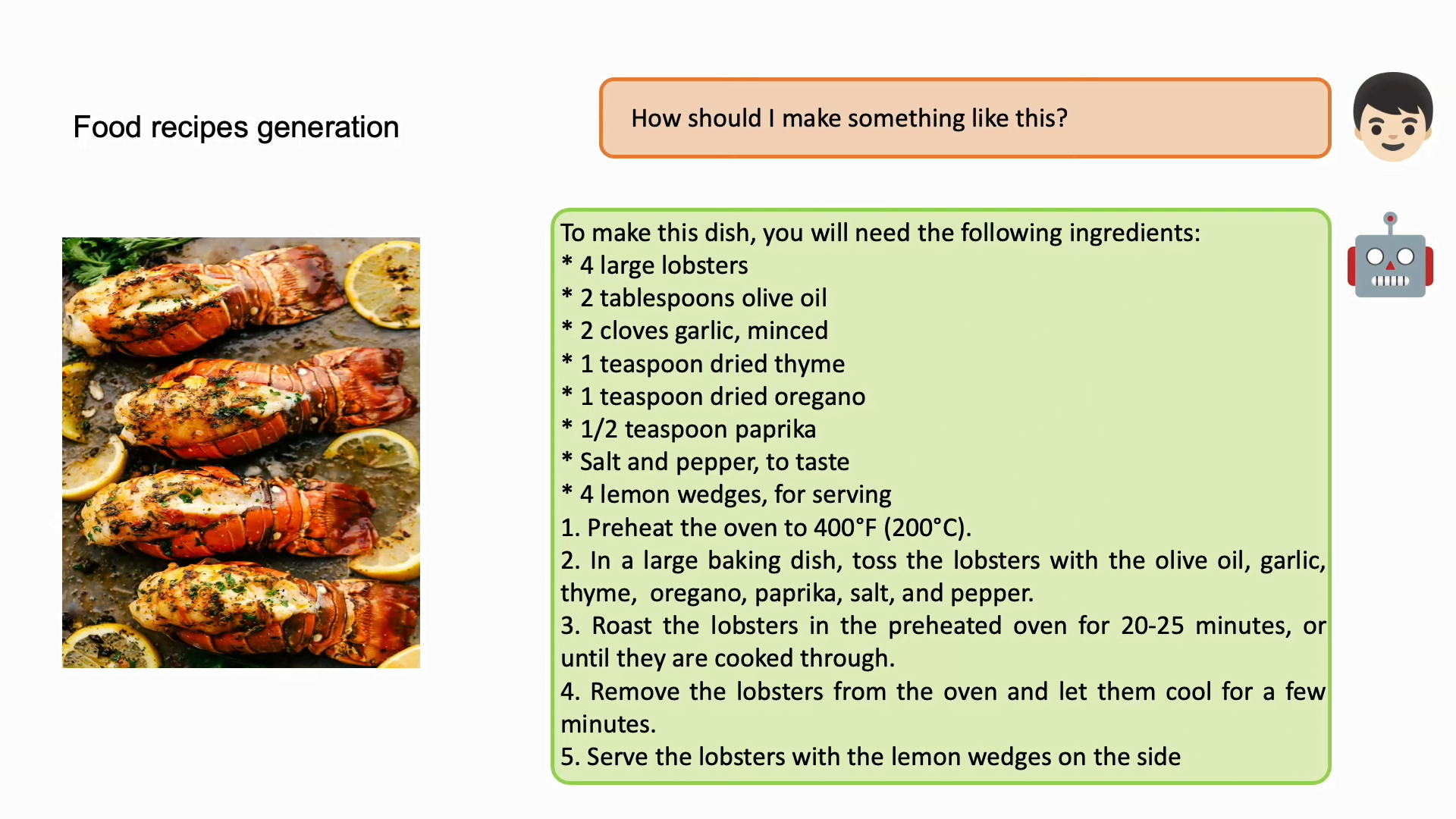

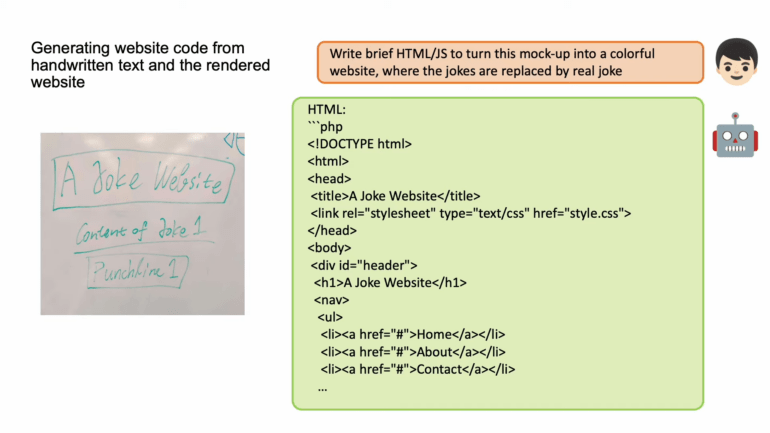

Like its larger counterpart, MiniGPT-4 can describe images or answer questions about the content of an image: for example, given a picture of a prepared dish, the model can output a (possibly) matching recipe (see featured image) or generate an appropriate image description for visually impaired people. Similar to Midjourney's new "/describe" feature, MiniGPT-4 could extract prompts from images, or at least some ideas. OpenAI's much-touted image-to-website feature, introduced at the GPT-4 launch, can also be done with MiniGPT-4, according to the researchers.

"Our findings reveal that MiniGPT-4 processes many capabilities similar to those exhibited by GPT-4 like detailed image description generation and website creation from hand-written drafts," the paper states.

The development team makes the code, demos, and training instructions for MiniGPT-4 available on Github. They also announce a smaller version of the model that will run on a single Nvidia 3090 graphics card. The demo video below shows some examples.

Open-source AI is on the rise

The remarkable thing about MiniGPT-4 is that it is based on the Vicuna-13B LLM and the BLIP-2 Vision Language Model, open-source software that can be trained and fine-tuned for comparatively little money and without massive data and computational overhead.

The research team first trained MiniGPT-4 with about five million image-text pairs in ten hours on four Nvidia A100 cards. In a second step, the model was refined with 3,500 high-quality text-image pairs generated by an interaction between MiniGPT-4 and ChatGPT. ChatGPT corrected the incorrect or inaccurate image descriptions generated by MiniGPT-4.

Fix the error in the given paragraph. Remove any repeating sentences, meaningless characters, not English sentences, and so on. Remove unnecessary repetition. Rewrite any incomplete sentences. Return directly the results without explanation. Return directly the input paragraph if it is already correct without explanation.

ChatGPT prompt for MiniGPT-4

This second step significantly improved the reliability and usability of the model - and required only seven minutes of training on a single Nvidia A100. The researchers themselves said they were surprised by the efficiency of their approach.

MiniGPT-4 Vicuna's language model follows the "Alpaca formula" and uses ChatGPT's output to fine-tune a Meta language model of the LLaMA family. Vicuna is said to be on par with Google Bard and ChatGPT, again with a relatively small training effort.

MiniGPT-4 is another example of the rapid progress the open source community has made in a very short time. It suggests that the moat for pure AI model companies may not be that high: just yesterday, the open-source chatbot OpenAssistant was launched, trained with instructional data collected from volunteers and intended to become an open ChatGPT alternative eventually.

Given this development, it would make sense for OpenAI to first focus on building a partner ecosystem using ChatGPT plugins for GPT-4, rather than training GPT-5 now. The research and training effort for a new model may be greater for OpenAI than the head start it might gain over competitors or the open-source community. In comparison, building a chat ecosystem is more challenging and economically unsustainable. It can also have a strong lock-in effect on users.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.