The Mistral AI team releases Mistral 7B, a 7.3 billion parameter language model that outperforms larger Llama models on benchmarks. The model can be used without restrictions under the Apache 2.0 license.

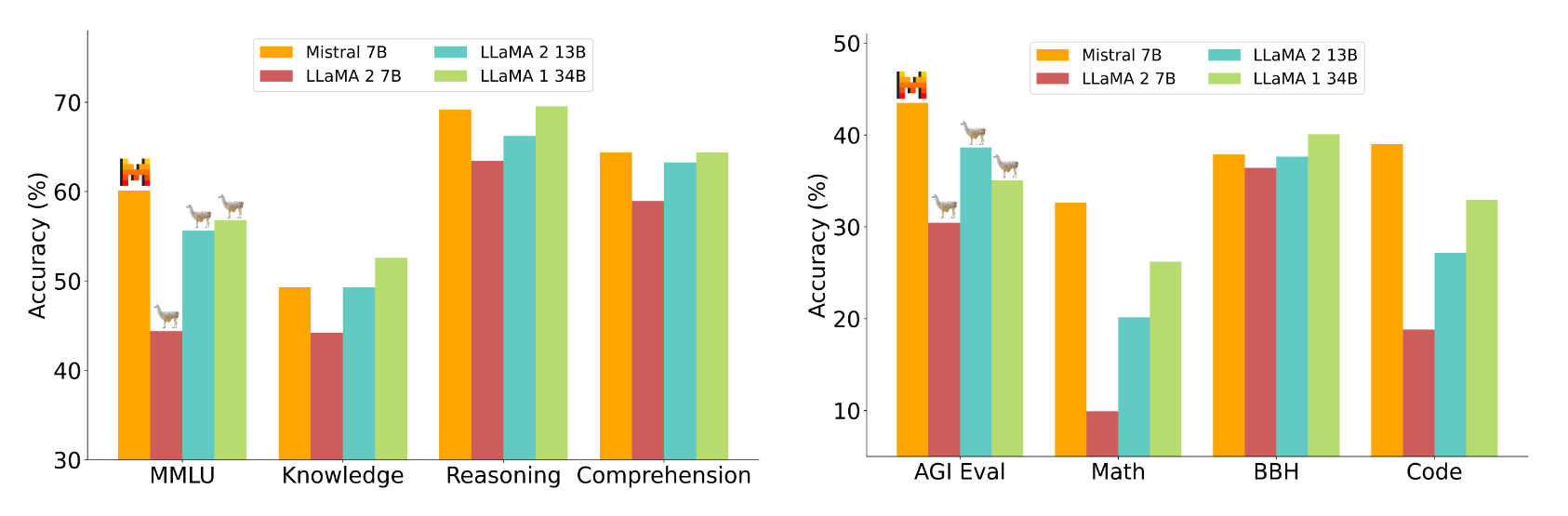

Mistral 7B outperforms the larger Llama 2 13B on all benchmarks measured and Llama 1 34B on many benchmarks, the Mistral team claims. In addition, Mistral 7B approaches the programming performance of CodeLlama 7B and still performs well in English language tasks.

Mistral 7B can be downloaded for free and deployed anywhere using the reference implementation, in any cloud (AWS/GCP/Azure) using vLLM Inference Server and Skypilot, or via HuggingFace. According to Mistral AI, the model can be easily adapted to new tasks such as chat or instructions through fine-tuning.

Mistral AI compares Mistral 7B to Llama 2 models 7B and 13B in multiple domains, including reasoning, world knowledge, reading comprehension, math and code.

Image: MistralAccording to Mistral AI, Mistral 7B is on par with a theoretical Llama 2 model that is more than three times larger, but saves memory and increases data throughput. Mistral attributes the fact that it trails Llama 1 34B in knowledge questions to its lower parameters.

Transformer architecture optimizations

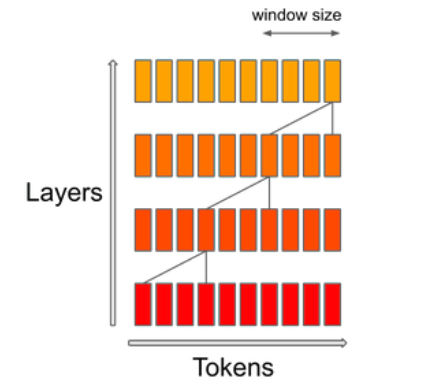

Mistral achieves greater efficiency through Grouped Query Attention (GQA), which can handle multiple queries simultaneously, increasing computational efficiency in Transformer models while maintaining high model performance.

The Sliding Window Attention (SWA) mechanism focuses on a specific size of context window within a sequence. The goal is to achieve a balance between computational cost and model quality. According to Mistral, this doubles the speed for sequence lengths of 16k with a context window of 4k.

To demonstrate its versatility, Mistral AI adapted Mistral 7B to HuggingFace instruction datasets, resulting in the Mistral 7B Instruct model. It outperforms all 7B models on MT-Bench and competes with 13B chat models.

Mistral AI to follow suit

French startup Mistral AI made waves in June when it announced the largest European seed round at $105 million - without having a product. The team consists of former Meta and Google Deepmind employees. One of its high-profile investors is former Google CEO Eric Schmidt.

Their business model is to distribute powerful open-source models with specific paid features for customers willing to pay. According to a leaked pitch letter, top-of-the-line models could be paid for.

The letter also reveals that Mistral plans to release a "family of text generation models" by the end of 2023 that will "significantly outperform" ChatGPT with GPT-3.5 and Google Bard. Part of this family of models will be open-source. So Mistral 7B should be just the beginning.