Researchers present a new prompt method that allows GPT-3 to better answer more complex questions.

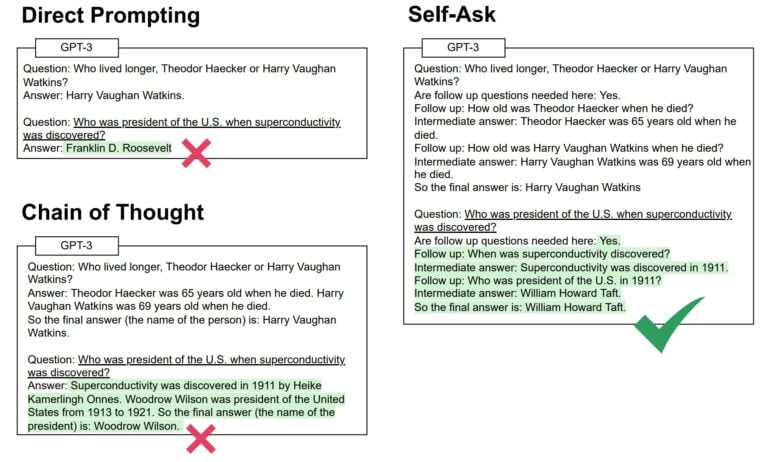

The researchers call their method "self-ask": Instead of answering a question directly, the language model independently asks itself follow-up questions whose answers lead to the final answer. This improves GPT-3's ability to correctly answer complex questions through an argumentative approach.

Self-ask is fully automatic: the first input provides an example of the self-ask process and then asks the actual question. GPT-3 independently applies the process to the new question and asks as many follow-up questions as needed to answer the final question.

Self-Ask builds on Chain-of-Thought prompting, but divides the process into several small outputs by asking follow-up questions, rather than outputting a continuous, non-delimitable chain of thought.

Self-Ask improves GPT-3 answers

The researchers also used their method to investigate something they call the "compositionality gap." This is the (in)ability of large language models to answer partial questions correctly, but draw the wrong conclusion from them for the answer to the final question.

An example is a question about the incumbent US president at the time of the invention of superconductors. To answer this, GPT-3 must first determine when superconductors were discovered, and then check which US president was in office at that time.

In this example, direct prompting and chain-of-thought prompting fail, while the self-ask method leads to the correct result.

According to the researchers, self-ask can reduce and in some cases even close the compositionality gap in GPT-3 models described earlier, depending on the model tested. In plain language, this means that GPT-3 is more likely to answer complex questions correctly using the self-ask method.

Accuracy increases with a combined Google search

Combining the self-ask method with a Google search produces correct answers even more reliably. The language model searches Google for the answer to a simple intermediate question and feeds it back into the self-ask process. After all intermediate questions have been answered, the language model decides on the final answer.

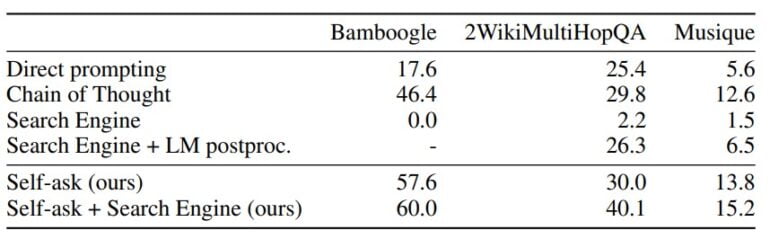

In tests with different multi-level question datasets, both self-ask and self-ask with Google search perform significantly better than direct prompts, chain-of-thought prompts, and direct Google searches. In the latter case, a possible snippet output or text from the first search result was taken as the answer.

According to the research team, GPT-3 with Google can answer questions that GPT-3 or Google could not answer alone. GPT-3 was trained in 2019, current information is not stored in the model.

Regarding the compositionality gap, the researchers had another interesting finding: the gap did not get smaller as the size of the models increased. The researchers conclude from this "surprising result" that while larger models store and retrieve more information, they have no advantage over smaller models in the quality of inferences.

We've found a new way to prompt language models that improves their ability to answer complex questions

Our Self-ask prompt first has the model ask and answer simpler subquestions. This structure makes it easy to integrate Google Search into an LM. Watch our demo with GPT-3 ?⬇️ pic.twitter.com/zYPvMBB1Ws- Ofir Press (@OfirPress) October 4, 2022

OpenAI (WebGPT), Deepmind (Sparrow), and Meta (Blenderbot 3) already introduced chatbots based on large language models that can also research answers to users' questions on the Internet.