New prompting method could improve understanding between humans and LLMs

Misunderstandings are common in human communication, but also between humans and large language models (LLMs). A new prompting method could help.

When misunderstandings occur, LLMs give useless answers or, worse, start to hallucinate. To prevent this, researchers at the University of California (UCLA) have developed a new prompting technique called "Rephrase and Respond" (RaR) that can improve the performance of LLMs in answering questions.

New prompting technique: Rephrase and Respond

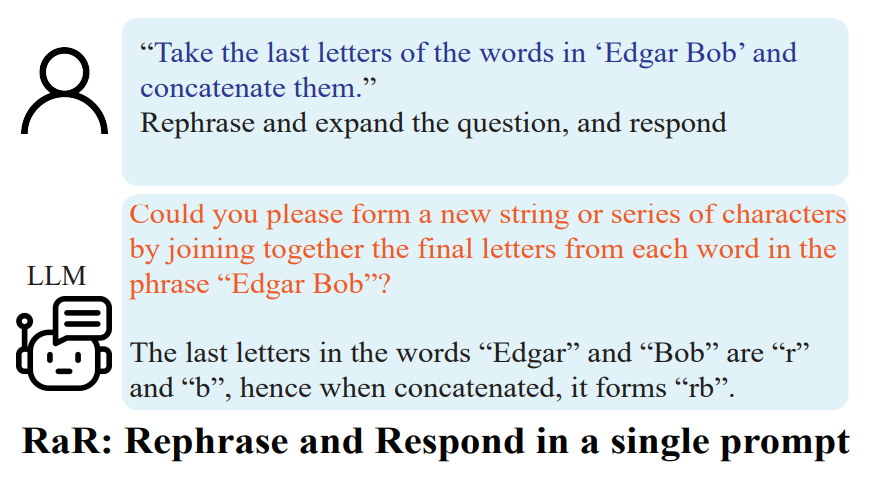

The RaR method allows LLMs to rephrase and expand on questions asked by humans before generating a response. The basic idea is that if you ask a clearer question, you will get a better answer.

The process is similar to the way people sometimes rephrase a question in their mind to better understand it before answering. By giving the LLM time to think about and clarify the question, the accuracy of the answers can be greatly improved.

One-step RaR:"{question}" Rephrase and expand the question, and respond.

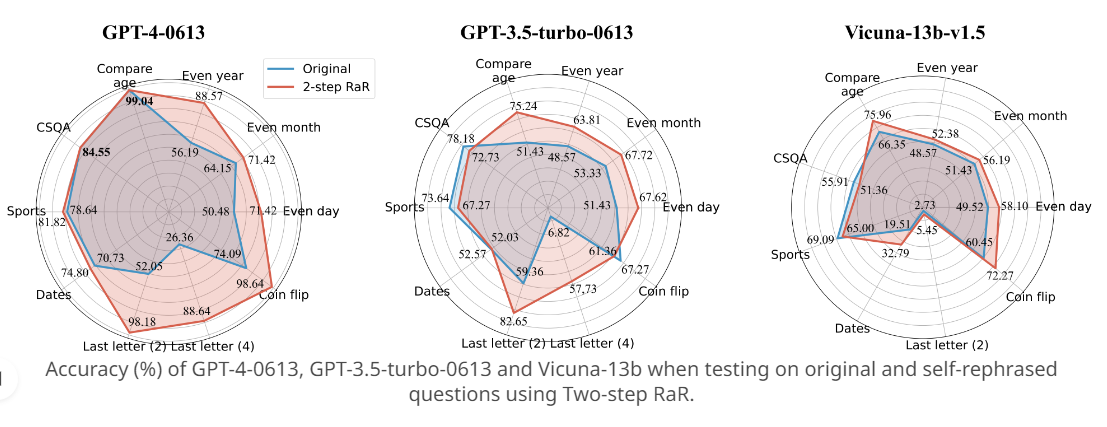

In the experiments conducted by the researchers, the RaR method was able to increase the accuracy of tasks previously difficult for GPT-4 to almost 100 percent in some cases.

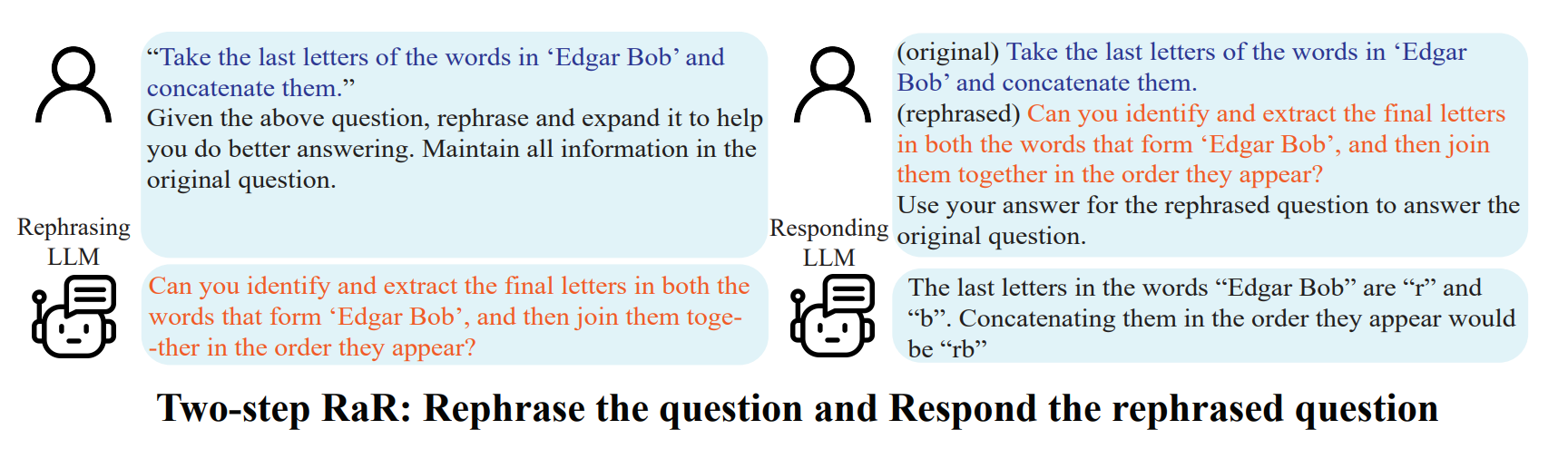

Other LLMs such as GPT-3.5 and Vicuna also showed significant performance improvements. This is especially true for a two-step variant of RaR, where an original query is reformulated by a capable large language model (LLM) such as GPT-4 and then used to generate a refined query.

Two-step RaR:

"{question}"

Given the above question, rephrase and expand it to help you do better answering. Maintain all information in the original question.

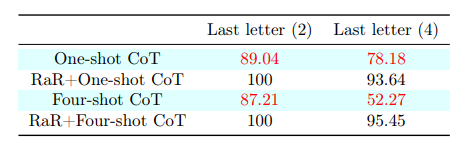

The researchers compared the RaR method with the well-known Chain-of-Thought (CoT) technique. Both methods aim to improve the performance of LLMs through a simple prompt addition.

In the researchers' experiments, RaR proved effective in tasks where CoT did not improve performance. In addition, RaR and CoT can be combined by adding the typical "think step by step" prompt to the RaR prompt.

RaR + CoT

Given the above question, rephrase and expand it to help you do better answering. Lastly, let's think step by step to answer.The task "Last Letter Concatenation", which is very demanding for LLMs, could be solved by GPT-4 with the RaR-CoT prompt with almost 100% reliability, even with up to four names. In this task, the language model has to concatenate the last letters of a given list of names into a new word.

The questions and examples from the study, as well as the prompt variations, are available in the paper or on Github.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.