Study reveals AI models have hidden capabilities they can't access through normal prompts

A new research method reveals how AI systems learn concepts and are more capable than previously thought. Analysis in "concept space" reveals surprising results and offers clues for better training and prompt engineering.

Researchers have developed a new method to analyze how AI models learn from training data by examining their dynamics in "concept space." This abstract coordinate system represents each independent concept underlying the data generation process, such as an object's shape, color, or size.

"By characterizing learning dynamics in this space, we identify how the speed at which a concept is learned, and hence the order of concept learning, is controlled by properties of the data we term concept signal," the researchers explain in their study.

The concept signal measures how sensitive the data generation process is to changes in a concept's values. The stronger the signal for a specific concept, the faster the model learns it. For example, models learned color more quickly when the difference between red and blue was more pronounced in the dataset.

Hidden capabilities cannot be addressed via simple prompts

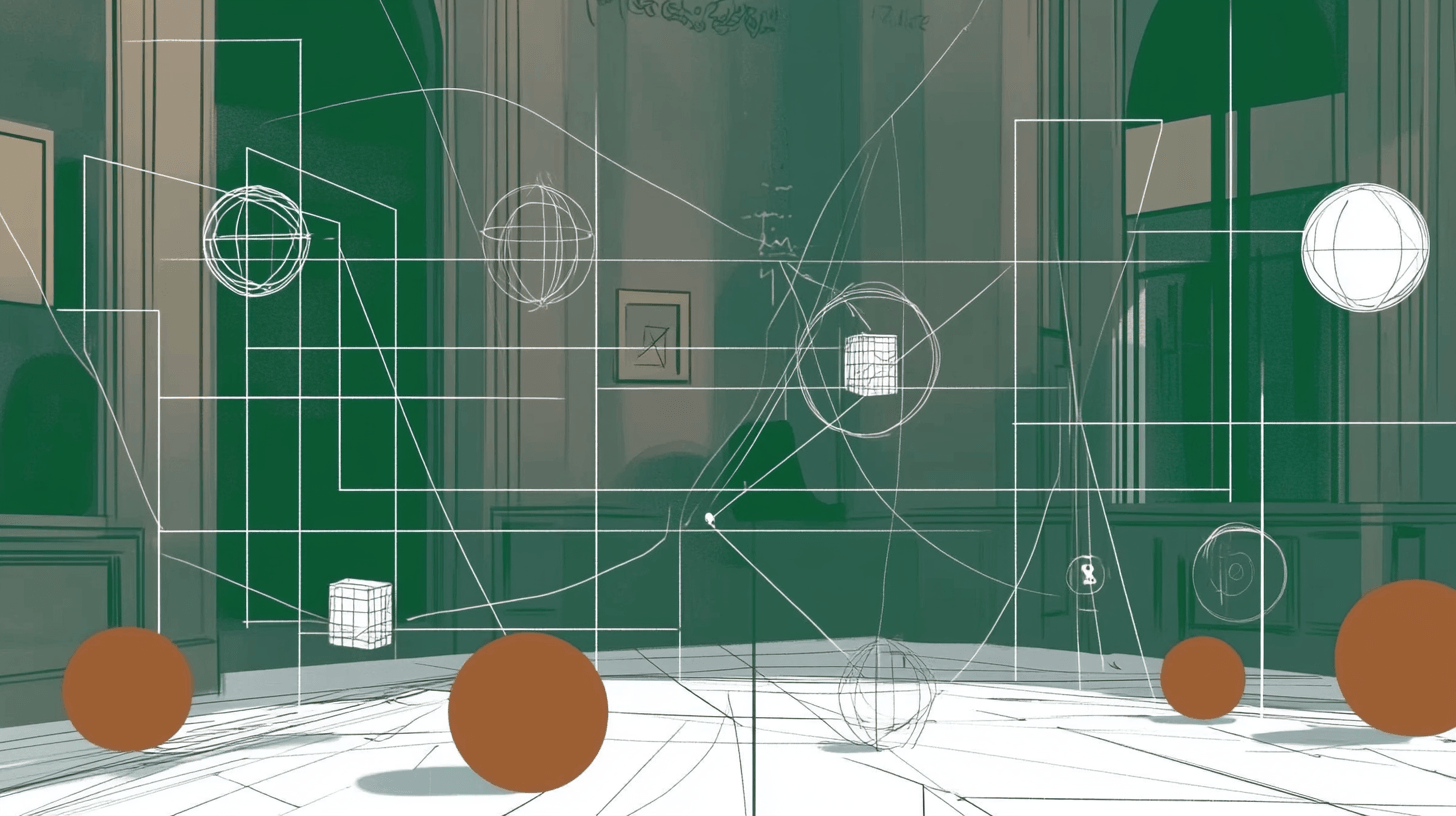

The researchers observed sudden directional changes in the model's learning dynamics within the conceptual space—shifting from conceptual memory to generalization. To demonstrate this, they trained the model with images of "large red circles," "large blue circles," and "small red circles." The combination of "small" and "blue" was not shown during training, requiring the model to generalize to create a "small blue circle." Simple text prompts like "small blue circle" failed to produce the desired result.

However, the researchers successfully generated the intended images using two methods: "latent interventions", or the manipulation of model activations responsible for color and size, and "overprompting", or the intensification of color specifications in prompts using RGB values.

Both techniques enabled the model to correctly generate a "small blue circle," even though it couldn't do so with standard prompts. This showed that while the model understood and could combine the concepts of "small" and "blue" (generalization), it hadn't learned to access this combination through simple text prompts.

Hidden capabilities even in larger models?

The team expanded their research to real datasets, including CelebA, which contains face images with various attributes like gender and smiling. They found evidence of hidden capabilities here as well. Through latent interventions, they could generate images of smiling women, while the model struggled with basic prompts. An initial experiment with Stable Diffusion 1.4 also demonstrated that overprompting could produce unusual images, such as a triangular credit card.

Thus, the team hypotheses that a general claim about the emergence of hidden capabilities may hold: "Generative models possess latent capabilities that emerge suddenly and consistently during training, though a model might not exhibit these capabilities under naive input prompting."

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.