New Tencent AI model Hunyuan3D 2.0 turns 2D images into detailed 3D objects

Tencent has released version 2.0 of Hunyuan3D, an open-source generative AI system that creates textured 3D models from regular images.

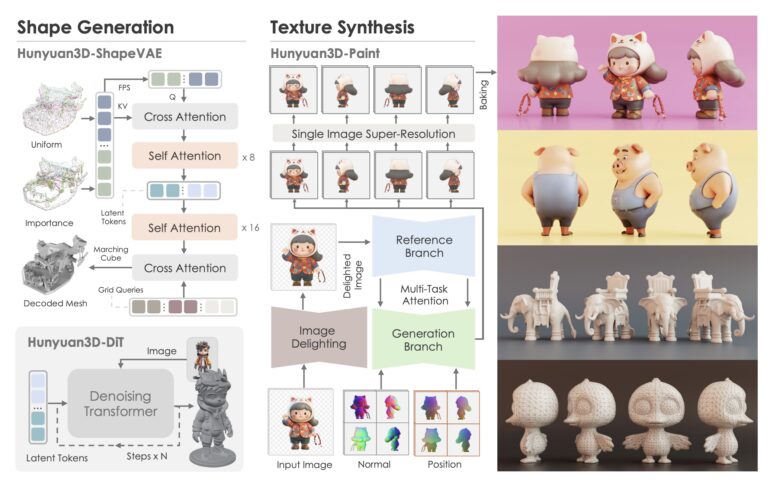

The system splits the work between two specialized components: one that handles the basic 3D shapes, and another that adds realistic textures.

Video: Tencent

Under the hood, the system uses Hunyuan3D-DiT, a diffusion transformer model that figures out the core shapes of objects and represents them in compressed form. Once it understands the basic structure, it creates 3D shapes that closely match the input image.

The texturing happens through Hunyuan3D-Paint, which looks at details like surface angles and positions to create textures that look natural from any viewing angle. The system even removes lighting effects from the original image, ensuring the textures work well in any lighting condition.

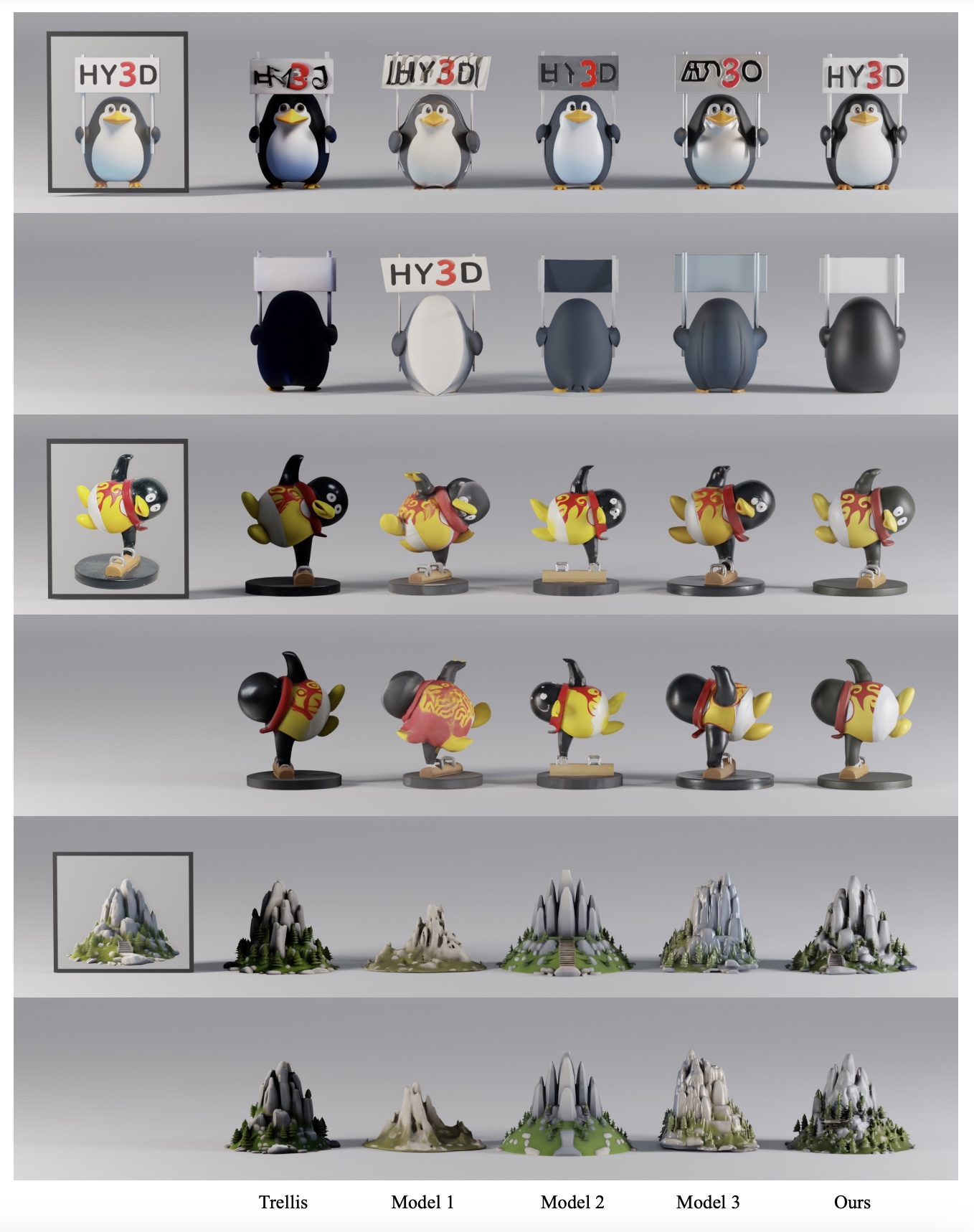

The latest version offers several improvements over its predecessor. Shape recognition now captures fine details such as edges and corners more accurately, resulting in better reproduction of faces, surface patterns, and text. The researchers say the new models come out clean, without the holes and errors that often plague 3D generation.

Tests show that Hunyuan3D 2.0 outperforms similar tools across the board - in shape generation, texturing, and overall model quality. One striking example shows the system accurately reproducing readable text on a sign held by a penguin model.

Hunyuan3D-Studio: Bringing AI 3D creation to the web

To make the technology more accessible, Tencent launched Hunyuan3D-Studio, a web-based toolkit for 3D creation. Users can convert sketches to 3D models, simplify complex designs, and even animate characters - though access requires logging in through WeChat, QQ, or a Chinese phone number.

By releasing the system as open source, Tencent hopes to create a foundation for future 3D AI models and encourage further research. The move comes as other tech giants like Nvidia, Stability AI, and Meta push forward with their own advances in AI-powered 3D generation.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.