A survey by the Associated Press found that editorial teams are looking for the right use cases for generative AI in newsrooms. The technology is already used a lot, but there are still some ethical issues to be resolved.

The Associated Press surveyed more than 290 media professionals worldwide about the use of generative AI. The survey was conducted from early to late December 2023 and included a very experienced sample, with participants having worked in the news business for 18 years on average.

About 62% of the responses came from North America, 25% from Europe, and the rest from Asia, Africa, Oceania, and South America. The responses were mostly from editors, but decision-makers and technical directors also responded.

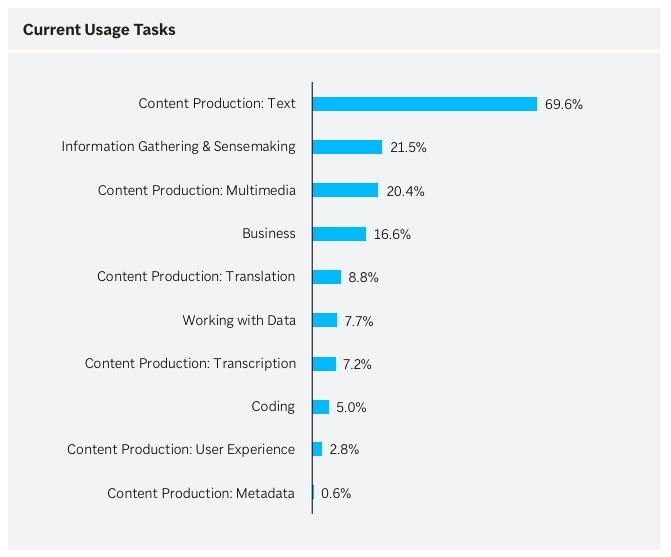

Over 80 percent of respondents said they were already pretty familiar with generative AI. Almost three-quarters already use the technology in some form, most often for text production, like headlines, social media posts, newsletters, and article drafts. But it's also used for multimedia content, translations, and transcriptions.

According to the survey, media professionals want to use generative AI more for research, topic discovery, content curation, and data analysis and processing. Chatbots and personalized user experiences are also being explored.

Whether the technology actually saves time and effort is still unclear. Efficiency gains vary widely by task, according to respondents. Often, new work is added, such as writing effective prompts to run models efficiently, or the effort to control output. Further research should shed light on which tasks are truly suitable for the use of generative AI.

Newsroom workflows and roles are already changing as AI becomes more widespread. For example, new positions are being created for AI experts, prompt designers, fact-checkers, or on the product side. Media companies are also hiring software engineers to integrate and maintain AI systems.

Fear of AI mistakes dominates ethical concerns

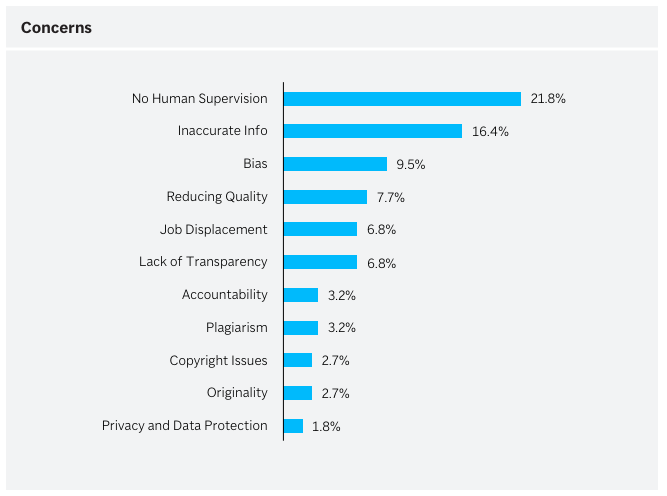

When it comes to ethical concerns, respondents are most concerned about lack of human oversight, misinformation, and bias. To address this, certain use cases, such as fully automated generation of entire articles, may need to be prohibited or restricted.

Guidelines for responsible use already exist in some places. However, according to the interviewees, they need to be much more specific and tailored to specific journalistic tasks. Mandatory training and monitoring mechanisms are also needed, as media companies are still at the beginning here.

An open question is whether tech companies should be allowed to train their language models with content from news organizations. Half of the respondents are divided on this issue: it could improve the accuracy and reliability of the models, but there would be no control over the use of the content and there would be a risk of copyright infringement. Some publishers are suing OpenAI and others for copyright infringement.