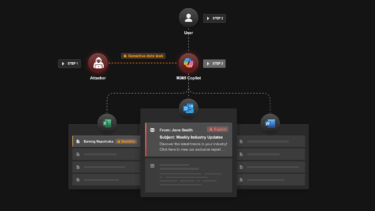

The National Institute of Standards and Technology (NIST) has released a comprehensive report on adversarial machine learning (AML) that provides a taxonomy of concepts, terminology, and mitigation methods for AI security. The report, authored by experts from NIST, Northeastern University, and Robust Intelligence, reviewed the AML literature and organized the major types of ML methods, attacker goals, and capabilities into a conceptual hierarchy. It also provides methods for mitigating and managing the consequences of attacks, and highlights open challenges in the lifecycle of AI systems. With a glossary for non-expert readers, the report aims to establish a common language for future AI security standards and best practices. The full 106-page report is very detailed and references real-world attacks such as Prompt Injections. It's available for free.

Ad

Support our independent, free-access reporting. Any contribution helps and secures our future. Support now:

Sources

News, tests and reports about VR, AR and MIXED Reality.

What happens next with MIXED

My personal farewell to MIXED

Meta and Anduril are now jointly developing XR headsets for the US military

MIXED-NEWS.com

Join our community

Join the DECODER community on Discord, Reddit or Twitter - we can't wait to meet you.