Nvidia CEO Jensen Huang claims AI no longer hallucinates, apparently hallucinating himself

Key Points

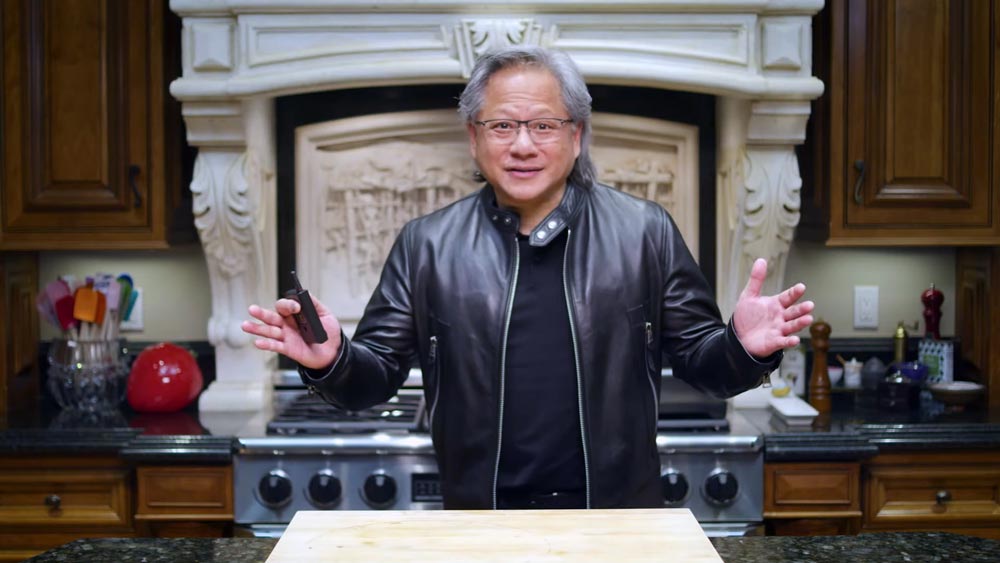

- Nvidia CEO Jensen Huang claimed in a CNBC interview that generative AI is "no longer hallucinating" — a statement that is factually incorrect.

- Hallucinations remain a fundamental, structural problem rooted in the probability-based architecture of language models, with no technical breakthrough to support Huang's assertion.

- Solving the hallucination problem would represent a transformative shift for the entire AI industry. The interview was published as Nvidia's major customers Meta, Amazon, and Google faced stock market pressure over plans to invest additional billions in AI infrastructure.

Anyone who thinks AI is in a bubble might feel vindicated by a recent CNBC interview with Nvidia CEO Jensen Huang.

The interview dropped after Nvidia's biggest customers Meta, Amazon, and Google took a hit on the stock market for announcing even bigger AI infrastructure investments on top of already massive budgets.

In the interview, Huang repeats what we keep hearing from the AI industry: more compute automatically means more revenue ("If they could have twice as much compute, the revenues would go up four times as much"). As a chip supplier, Nvidia profits directly from people buying into that equation.

And there's some truth to the basic narrative. Demand for AI compute is real, the models are getting better, revenue at the major AI providers is actually growing, and progress in agentic AI is impressive. "Anthropic is making great money. OpenAI is making great money," Huang says, not mentioning the cost of it all.

But Huang stretches these observations past the breaking point. For example, he calls the current AI expansion the "largest infrastructure build out in human history," as if electrification, railroads, highway systems, or the global buildout of fossil fuel infrastructure never happened.

Huang's own hallucination: claiming AI models no longer hallucinate

This is most obvious at one point in particular. Huang literally says: "AI became super useful, no longer hallucinating." Meaning language models no longer generate false information. That's just not true.

You could give Huang the benefit of the doubt and assume he misspoke and meant "significantly less" or "rarely enough that it's barely noticeable." That's probably what he was going for.

But this distinction is anything but trivial. Hallucinations aren't a bug you can patch away. They're a byproduct of the probability-based architecture language models run on. This is exactly what makes companies slow to adopt AI: unreliable outputs, the need for human oversight, and unresolved security issues.

Huang's false claim matters. Reliability is still the single biggest unsolved problem in generative AI. Even OpenAI has admitted that hallucinations will likely never go away. Even if these systems could just reliably flag how confident they are in their own output, that alone would be transformative. But even that isn't there yet. Whether people care about that, and what the fallout looks like, is a separate question.

If hallucinations, aka reliability, were actually solved, the "human in the loop" would be mostly unnecessary. Legal advice could be fully automated, AI-generated code could ship straight to production, medical diagnoses could happen without a doctor, and AI systems could improve themselves since errors would no longer compound. We would live in a different world.

It's no coincidence that a wave of new AI startups is popping up right now, hunting for new architectures because they no longer trust current models to make fundamental improvements.

That the CEO of the most important AI chip company can go on CNBC and claim AI no longer makes mistakes, and nobody calls him on it, is perhaps the clearest sign yet of how far the AI hype cycle has drifted from reality.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe now