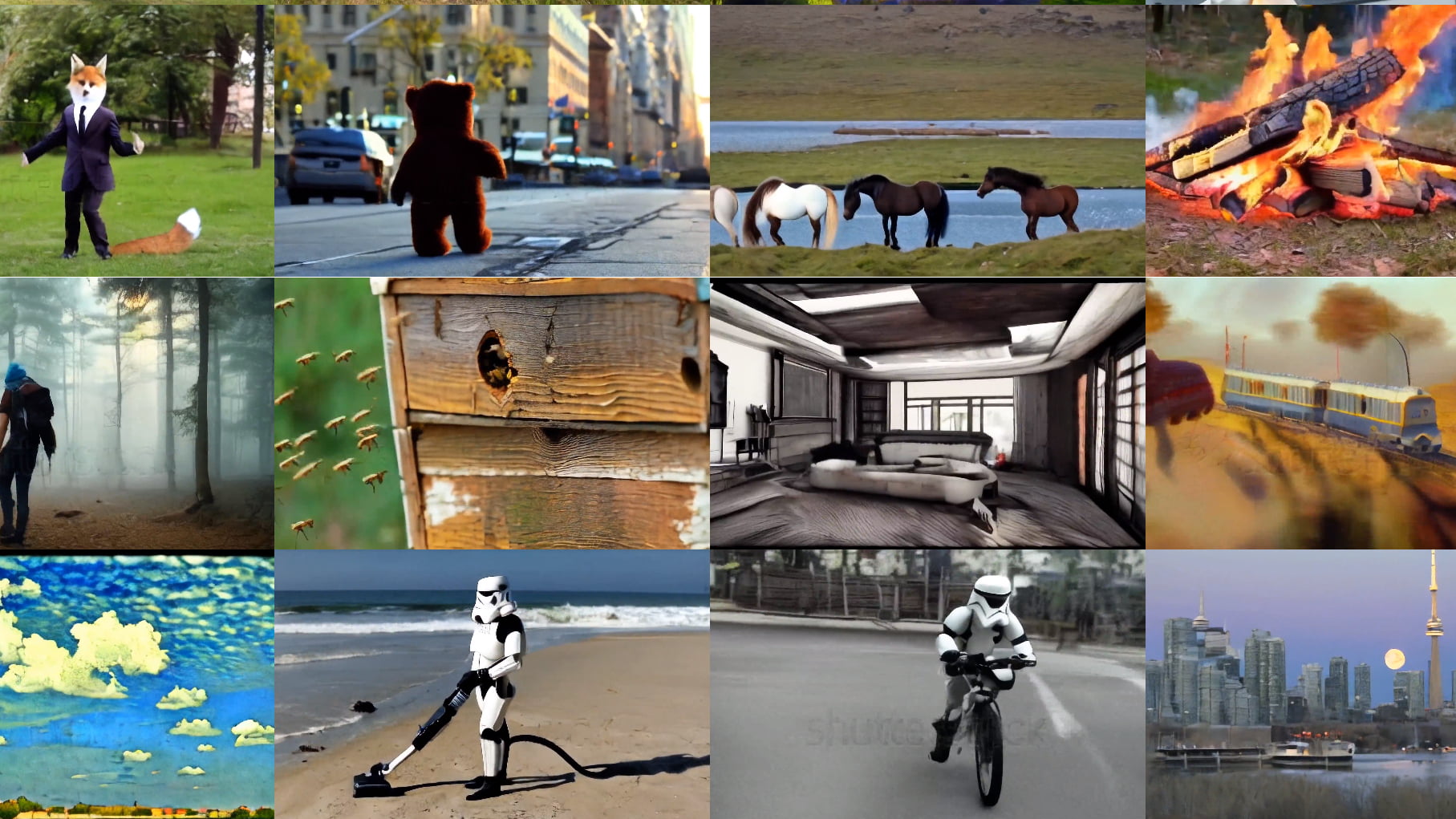

Nvidia turns Stable Diffusion into a text-to-video model, generates high-resolution video, and shows how the model can be personalized.

Nvidia's generative AI model is based on diffusion models and adds a temporal dimension that enables temporal-aligned image synthesis over multiple frames. The team trains a video model to generate several minutes of video of car rides at a resolution of 512 x 1,024 pixels, reaching SOTA in most benchmarks.

In addition to this demonstration, which is particularly relevant to autonomous driving research, the researchers show how an existing Stable Diffusion model can be transformed into a video model.

Video: Nvidia

Nvidia team turns Stable Diffusion into a text-to-video model

To do this, the team trains Stable Diffusion with video data for a short period of time in a fine-tuning step, and then adds additional temporal layers behind each existing spatial layer in the network and trains them with the video data as well. In addition, the team trains time-stable upscalers to generate 1,280 x 2,048 resolution videos generated from text prompts.

Video: Nvidia

Video: Nvidia

With Stable Diffusion as the basis for the video model, the team does not need to train a new model from scratch and can benefit from existing capabilities and methods. For example, although the WebVid-10M dataset used only contains real-world videos, the model can also generate art videos thanks to the underlying Stable Diffusion model. All videos are between 3.8 and 4.7 seconds long - depending on the frame rate.

Video Stable Diffusion can be customized with Dreambooth

By using Stable Diffusion as the basis for the video model, the team does not have to train a new model from scratch, but can take advantage of existing skills and methods. For example, although the WebVid-10M dataset used contains real-world videos, the model can also generate art videos thanks to the underlying Stable Diffusion model. All videos are between 3.8 and 4.7 seconds long, depending on the frame rate.

The Nvidia team shows that Dreambooth also works with the video-specific Stable Diffusion model, generating videos with objects that were not part of the original training data. This opens up new possibilities for content creators who could use DreamBooth to personalize their video content.

Video: Nvidia

The team deposited a cat in the model via Dreambooth. | Video: Nvidia

There are more examples on the Nvidia Video LDM project page. The model is not available, but one of the authors of the paper is Robin Rombach - one of the people behind Stable Diffusion and at Stability AI. So maybe we will see an open-source implementation soon.