Nvidia unveiled its new H200 AI accelerator at the SC23 Supercomputing Show. Thanks to its faster memory, it is said to almost double the inference speed of AI models.

Nvidia's H200 is the first GPU to use the fast HBM3e memory and offers 141 gigabytes of HBM3e instead of 90 gigabytes of HBM2e and a bandwidth of 4.8 terabytes per second instead of 3.35 compared to its predecessor H100. These are around 2 to 2.5 times higher than the A100.

The faster memory and other optimizations should be particularly noticeable in inference compared to the H100: according to Nvidia, Meta's Llama 2, for example, runs almost twice as fast on the H200 in the 70 billion parameter variant. The GPU is also said to be more suitable for scientific HPC applications. The company expects further improvements from software optimizations.

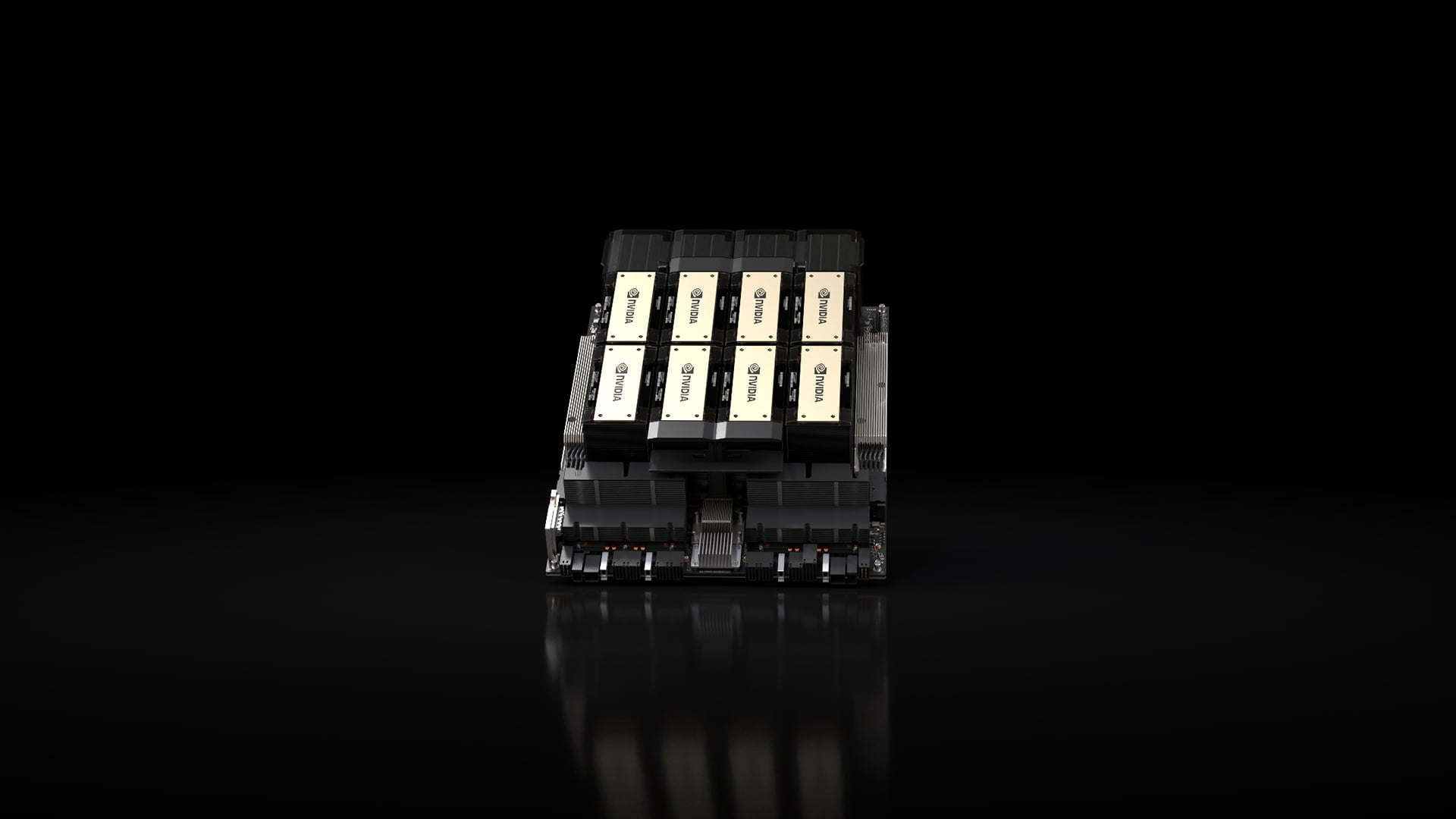

Systems and cloud instances with H200 are expected to be available from the second quarter of 2024, including HGX H200 systems. The H200 can be deployed in multiple data center environments, including on-premises, cloud, hybrid cloud, and edge.

Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure will be among the first cloud service providers to offer H200-based instances starting next year.

Nvidias H200 it the GPU in the GH200 with 144 gigabytes of HBM3e

According to Nvidia, the H200 GPU will also be available in a 144-gigabyte version of the GH200 Grace Hopper super chip starting 2024. The GH200 connects Nvidia's GPUs directly to Nvidia's Grace CPUs, and in the recently released MLPerf benchmark version 3.1, the current GH200 variant with lower bandwidth and 96 gigabytes of HMB3 memory showed a speed advantage of almost 17 percent over the H100 when training AI models.

This version will be replaced by the HBM3e version in 2024. GH200 chips will be used in more than 40 supercomputers worldwide, including the Jülich Supercomputing Centre (JSC) in Germany and the Joint Center for Advanced High Performance Computing in Japan, the company said.

JUPITER supercomputer uses 24,000 GH200

JSC will also operate the JUPITER supercomputer, which is based on the GH200 architecture and is designed to accelerate AI models in areas such as climate and weather research, materials science, pharmaceutical research, industrial engineering, and quantum computing.

JUPITER is the first system to use a four-node configuration of the Nvidia GH200 Grace Hopper super chip.

In total, nearly 24,000 GH200 chips will be installed, making JUPITER the fastest AI supercomputer in the world. JUPITER is due to be installed in 2024 and is one of the supercomputers being built as part of the EuroHPC Joint Undertaking.

There was also news at SC23 about Nvidia's recently unveiled Eos supercomputer. German chemical company BASF plans to use EOS to run 50-qubit simulations on Nvidia's CUDA Quantum platform.

The aim is to study the properties of the compound NTA, which is used to remove toxic metals from urban wastewater.