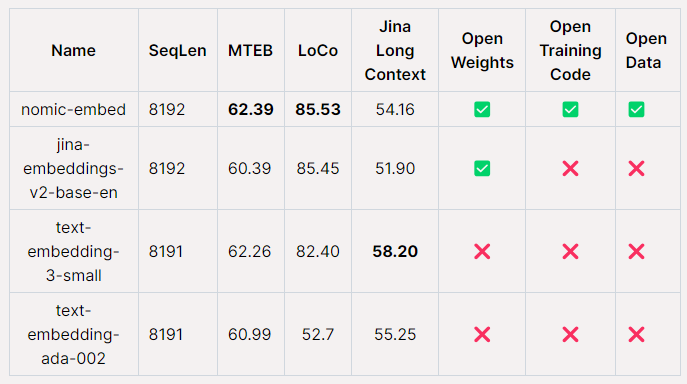

Nomic AI has released an open-source embedding model called Nomic Embed that outperforms OpenAI's Ada-002 and text-embedding-3-small models on both short and long-context tasks. The model is fully reproducible, auditable, and supports a context length of 8192. Nomic Embed outperformed its competitors on the Massive Text Embedding Benchmark (MTEB) and the LoCo Benchmark, but fell short on the Jina Long Context Benchmark. Model weights and full training data are published for "complete model auditability". Nomic Embed is also available via the Nomic Atlas Embedding API with one million free tokens for production workloads and via the Nomic Atlas Enterprise offering for enterprises.

Ad