OpenAI releases improved GPT-4 model for ChatGPT and via API

Update from April 12, 2024:

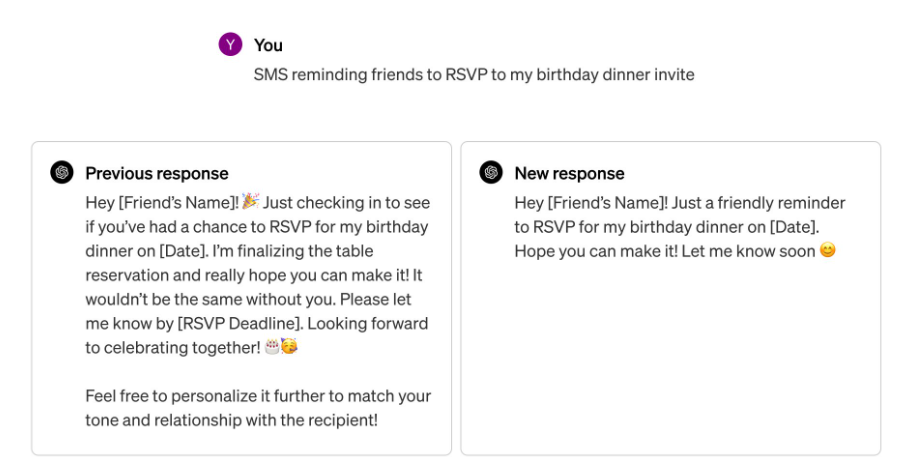

The updated GPT-4 is now available in ChatGPT. The model is expected to be mainly better at math and coding and also less verbose.

The benchmarks show this as follows:

- MATH (Measuring Mathematical Problem-Solving With the MATH Dataset): +8.9%, measures the ability to solve mathematical problems.

- GPQA (A Graduate-Level Google-Proof Q&A Benchmark): +7.9%, measures the ability to answer challenging questions that cannot be answered by simple Google searches.

- MGSM (Multilingual Grade School Math Benchmark): +4.5%, measures the ability to solve elementary school level math problems in multiple languages.

- DROP (A Reading Comprehension Benchmark Requiring Discrete Reasoning Over Paragraphs): +4.5%, measures reading comprehension and the ability to draw discrete conclusions from paragraphs.

- HumanEval (Evaluating Large Language Models Trained on Code): +1.6%, measures the ability to understand and generate code based on human evaluations.

- MMLU (Measuring Massive Multitask Language Understanding): +1.3%, measures the understanding and ability to solve tasks from various domains.

Original article from April 9, 2024:

OpenAI has announced improvements to the GPT-4 Turbo model in its API, which will soon be available in ChatGPT.

The new multimodal GPT-4 Turbo model (2024-04-09, cut-off month December 2023) with image processing is now generally available in the API.

The model is "more intelligent and multimodal", according to OpenAI, and can analyze both text and images and draw conclusions with just one API call. Previously, developers had to use separate models for this.

Vision requests now also support common API features such as JSON mode and function calls, which should make it easier to integrate the model into applications and developer workflows.

OpenAI demonstrates the capabilities of the new model with use cases such as tldraw, which writes code based on an interface drawing.

Video: Roberto Nickson

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.