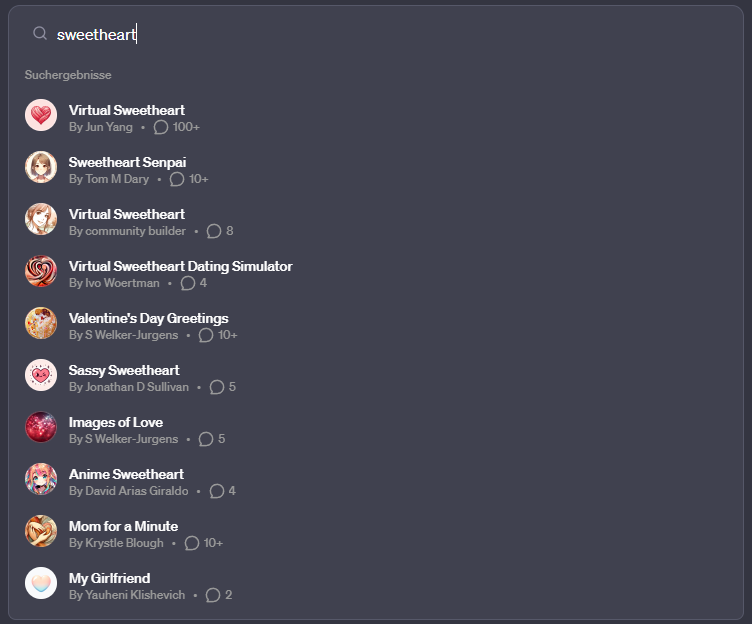

OpenAI's GPT store, which offers customized versions of ChatGPT, is already facing problems with users breaking the rules by creating "girlfriend" AI chatbots. These bots, such as "Korean Girlfriend," "Virtual Sweetheart," and "Your AI Girlfriend, Tsu✨," violate OpenAI's usage policy, which prohibits GPTs "dedicated to fostering romantic companionship or performing regulated activities." OpenAI uses a combination of automated systems, human review, and user reports to find and evaluate GPTs that may violate its policies. Virtual companions aren't new, see character.ai, and not a bad thing per se. But as Quartz points out, it only took two days for users to break these rules. I wonder what this means for OpenAI's promise to block GPTs from being used for election propaganda.

Ad

Support our independent, free-access reporting. Any contribution helps and secures our future. Support now:

News, tests and reports about VR, AR and MIXED Reality.

What happens next with MIXED

My personal farewell to MIXED

Meta and Anduril are now jointly developing XR headsets for the US military

MIXED-NEWS.com

Join our community

Join the DECODER community on Discord, Reddit or Twitter - we can't wait to meet you.