ChatGPT inspires many people, but also irritates, for example, with politically controversial statements. OpenAI explains how its conversational AI could cover a wider range of perspectives and be more responsive to individual users.

To reduce bias and misinformation, ChatGPT is fine-tuned with human feedback. For future ChatGPT or AI models in general, OpenAI also wants to improve default behavior. The company does not mention any concrete measures for this, apart from further investment in research and development.

Whether and to what extent it is possible to rid large language models of biases or misstatements, or to prevent them from learning them in the first place, is a matter of debate in the research community. OpenAI admits that there is still "room for improvement" when it comes to both misstatements and biases.

In many cases, we think that the concerns raised have been valid and have uncovered real limitations of our systems which we want to address. We’ve also seen a few misconceptions about how our systems and policies work together to shape the outputs you get from ChatGPT.

OpenAI

OpenAI to offer ChatGPT models with customized behavior

One way to combat bias is not to get rid of it, but to make a model more open to different perspectives. To that end, OpenAI is planning an upgrade to ChatGPT that will allow users to customize the behavior of the AI model to their needs within "limits defined by society."

"This will mean allowing system outputs that other people (ourselves included) may strongly disagree with," OpenAI writes.

The company leaves open when the upgrade will be rolled out and how it will be implemented technically. The challenge, it says, is defining the limits of society and therefore for personalization.

"If we try to make all of these determinations on our own, or if we try to develop a single, monolithic AI system, we will be failing in the commitment we make in our Charter to 'avoid undue concentration of power'," OpenAI writes.

OpenAI hopes to solve this dilemma by involving the public more in the ChatGPT's alignment process. To that end, the company says it is launching a pilot project to gather external feedback on system behavior and use cases, such as using AI in education. In addition, OpenAI is considering partnerships with external organizations to review its AI security and behavior policies.

How OpenAI's ChatGPT is trained

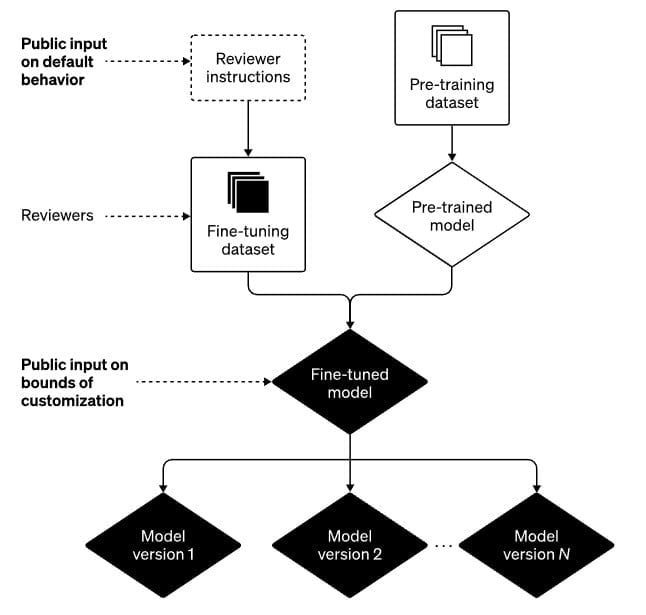

ChatGPT is trained in two steps: First, the model is pre-trained with lots of text data. During this training, the model learns to predict likely word sequences. In the sentence "Don't go right, go ...", the word "left" (or "straight" or "back", which is part of the problem of large language models) follows with a certain probability.

In this first training step, according to OpenAI, ChatGPT learns grammar, many facts about the world, and some reasoning skills - and the biases in the data that lead to the issues mentioned above.

The second step is to fine-tune the pre-trained model. For this, OpenAI uses selected text examples that have been evaluated by human reviewers and combined into a smaller dataset. In practice, ChatGPT generalizes based on this human feedback.

Bias is a bug - not a feature

OpenAI provides the human reviewers with a framework for evaluation, e.g. ChatGPT should not answer requests about illegal content or comment on controversial topics.

According to OpenAI, this is an ongoing process that is discussed on a weekly basis. OpenAI publishes a sample briefing for reviewers on how to handle certain requests.

"Our guidelines are explicit that reviewers should not favor any political group. Biases that nevertheless may emerge from the process described above are bugs, not features," OpenAI writes.

But mistakes will continue to happen, the company says. OpenAI wants to learn from them and improve its models and systems.

"We appreciate the vigilance of the ChatGPT user community and the public at large in holding us accountable," OpenAI writes.

Previous research has indicated that ChatGPT was or is politically left-leaning, at least at times. Because OpenAI continues to feed information back into the system, and because it can generate different answers to the same questions, the measurable political bias is fluid.