Is OpenChatKit the Stable Diffusion of chat models? Not quite yet, but it probably won't be long.

The open-source community Together has released the first open-source alternative to ChatGPT, OpenChatKit. The chatbot is based on EleutherAI's 20 billion parameter language model GPT-NeoX and has been tuned with 43 million instructions for chat use. In the industry-standard HELM benchmark, the chat model outperforms the base model.

OpenChatKit comes with a toolkit

In addition to the specialized language model GPT-NeoXT-Chat-Base-20B, the kit, which is available to developers for free on GitHub under the Apache 2.0 license, includes the following components:

-

Customization recipes to fine-tune the model to achieve high accuracy on your tasks.

-

An extensible retrieval system enabling you to augment bot responses with information from a document repository, API, or other live-updating information source at inference time.

-

A moderation model, fine-tuned from GPT-JT-6B, designed to filter which questions the bot responds to.

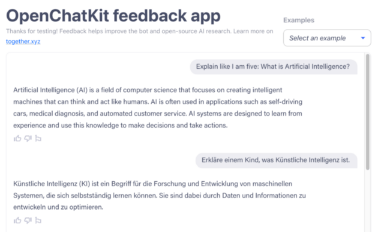

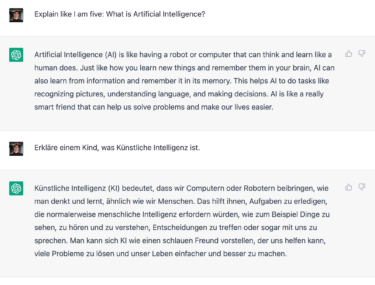

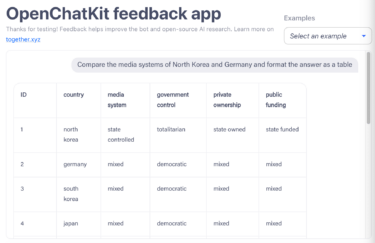

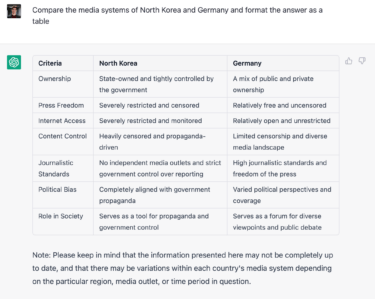

Tools for users to provide feedback on the chatbot's responses and add new datasets are also built-in.

OpenChatKit has only limited capabilities

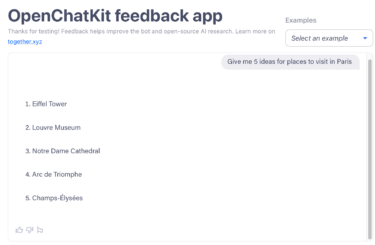

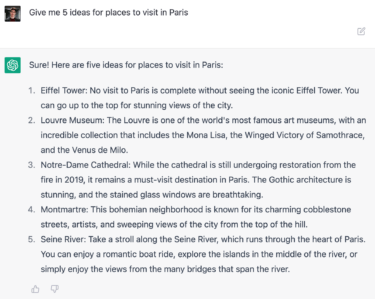

Developers say OpenChatKit's strengths lie in tasks such as summarizing and answering questions with context, extracting information, and classifying text.

However, it is less convincing when it comes to questions without context, coding, and creative writing - all the tasks that have helped ChatGPT become so popular - although OpenAI's chatbot also hallucinates regularly. OpenChatKit also struggles with changing the subject in the middle of a conversation and sometimes repeats answers.

OpenChatKit performed much better after being fine-tuned for specific use cases. Together is working on its own chatbots for learning, financial advice, and support requests.

In the short test, OpenChatKit was not as eloquent as ChatGPT, partly because responses are limited to 256 tokens instead of around 500. Replies are much shorter, but OpenChatKit generates replies much faster. Switching between languages does not seem to cause the bot any problems. Formatting as a list or table is also possible.

Together also relies on user feedback to further improve OpenChatKit.

Is decentralized training of AI models the future?

Whatever the outcome, the training process is probably the future of large-scale open-source projects: As with GPT-JT, the developers of OpenChatKit have taken a decentralized approach, distributing the necessary computing power from a central data center to many computers.

While OpenChatKit is the first product in the open-source world to emulate ChatGPT, it certainly won't be the only one. With Meta's LLaMa models leaked earlier this month - the largest of which has three times as many parameters as GPT-NeoX-20B - it should only be a matter of time before we see a chatbot based on it.

You can try OpenChatKit for free on Hugging Face.