A study underscores what observers of deepfake technology probably thought anyway: AI-generated photos are almost indistinguishable from real photos. But there's more to it than that.

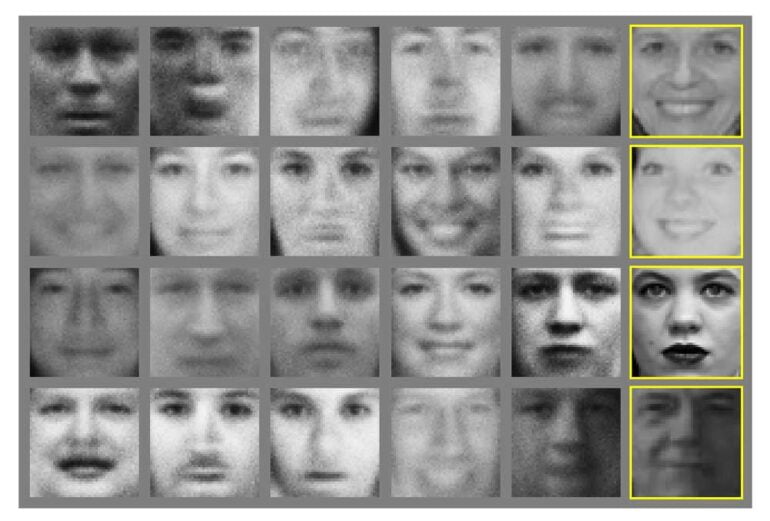

When Ian Goodfellow and his colleagues first showed fake human photographs created with GAN AI technology in 2014, they were still blurry black-and-white images (History of Deepfakes).

Even then, these photos hinted at the potential of generative AI technology, which - at least in the case of portrait photos - may have been largely fulfilled since last year. Generative systems are now so optimized that they hardly produce any AI-typical image errors, on the basis of which the fake photos were easy to recognize in the past. A new study supports this observation.

Human vs. Deepfake: barely better than chance

Professor Hany Farid of the University of California, Berkeley, and Dr. Sophie Nightingale of Lancaster University now examined whether humans are still able to distinguish between real photos of people and fakes generated by AI.

To do this, they used Nvidia's StyleGAN2, a current but not even the most up-to-date deepfake technology: StyleGAN3 is even more powerful as it eliminates more AI flaws.

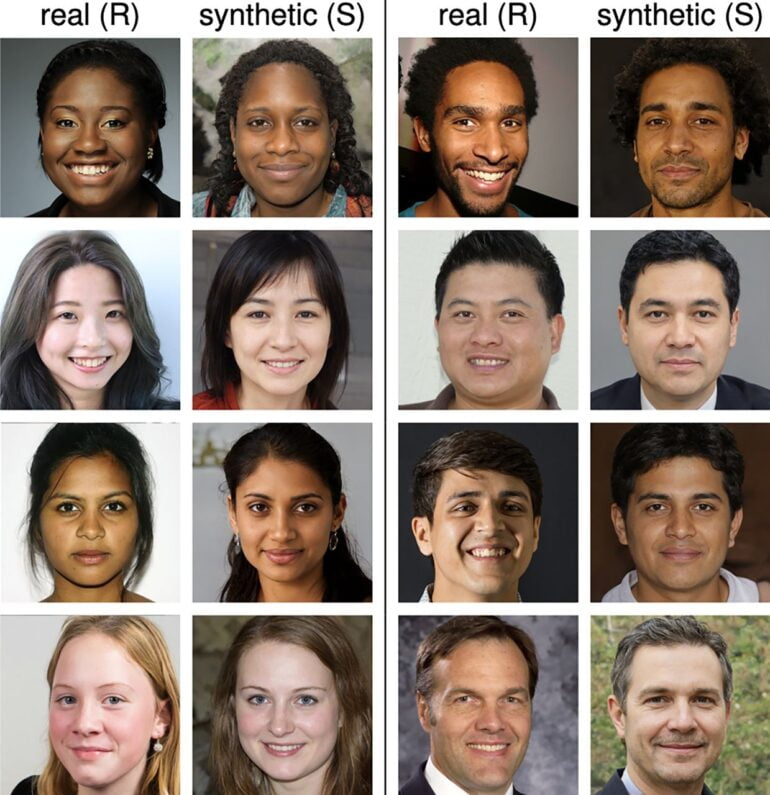

The researchers selected 400 faces generated by artificial intelligence. They paid attention to diversity in terms of origin, gender and age. They also preferred to choose images with a largely uniform background to further reduce extraneous influences. For each AI-generated face, they then selected a matching real-life counterpart from the database with which StyleGAN2 was trained.

With this photo selection of a total of 800 images, they launched a total of three experiments. In the first experiment, 315 participants categorized 128 real or generated photos as real or fake. Their accuracy was about 48 percent, which corresponds to chance.

In a second experiment, participants were previously told what features they could use to identify AI-faked images. 219 participants again examined 128 images, but despite the previous training, they achieved only 59 percent accuracy in deepfake detection. That's little better than coincidence.

Deepfake faces are more trustworthy

The researchers had another question, which they tested in a third experiment: people would draw implicit conclusions about individual characteristics such as the trustworthiness of people based on faces within milliseconds, they said. The researchers wanted to know if people also draw these inferences from AI-generated human faces.

In the third experiment, participants were therefore asked to rate the trustworthiness of a total of 128 real and synthetic faces. The participants rated the deepfake portrait photos 7.7 percent more trustworthy than real faces.

The researchers do not assume that this more positive attribution has anything to do with the selection of the photos themselves. Although smiling people or women, for example, are generally rated as more trustworthy, the various facial expressions and faces were evenly distributed across the real and synthetic photos.

The real reason for the higher trust in deepfake faces could be their averageness, according to the researchers. The AI creates the fake faces by assembling individual fragments of the faces of very many people learned during AI training into a new synthetic face.

The researchers call on the graphics and imaging community to create guidelines for the creation and dissemination of deepfakes that can guide academia, publishers, and media companies.