Pixel Transformers: Researchers show that AI models learn more from raw pixels

Researchers have shown that transformer models do not need to divide images into blocks but can be trained directly on individual pixels - this challenges common methods in computer vision.

A team of researchers from the University of Amsterdam and Meta AI has introduced a new approach that questions the necessity of local relationships in AI models for computer vision. The researchers trained transformer models directly on individual image pixels instead of pixel blocks and achieved surprisingly good results.

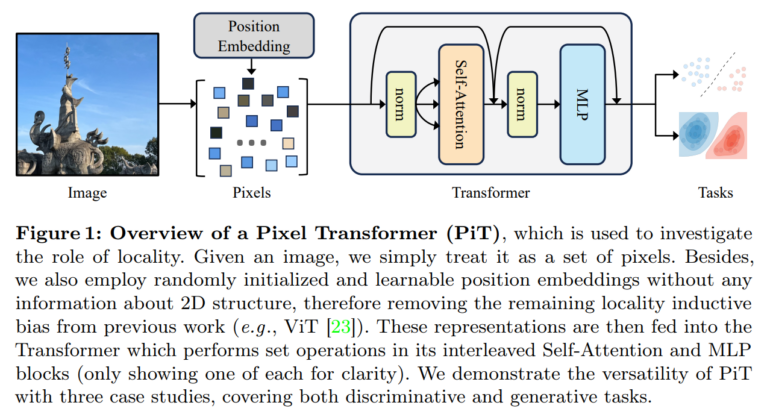

Typically, transformer models like the Vision Transformer (ViT) are trained on blocks of, for example, 16 by 16 pixels. This teaches the model that neighboring pixels are more strongly related than distant pixels. This principle of locality is considered a fundamental prerequisite for image processing by AI and is also used in ConvNets.

The researchers now wanted to find out if this relationship could be completely dissolved. To do this, they developed the "Pixel Transformer" (PiT), which treats each pixel as an individual token and makes no assumptions about spatial relationships. Instead, the model is supposed to learn these relationships independently from the data.

Pixel Transformer outperforms classic Vision Transformer

To test the performance of PiT, the scientists conducted experiments in three application areas:

1. Supervised learning for object classification on the CIFAR-100 and ImageNet datasets. Here, PiT was shown to outperform the conventional ViT.

2. Self-supervised learning using Masked Autoencoding (MAE). Here too, PiT performed better than ViT and scaled better with increasing model size.

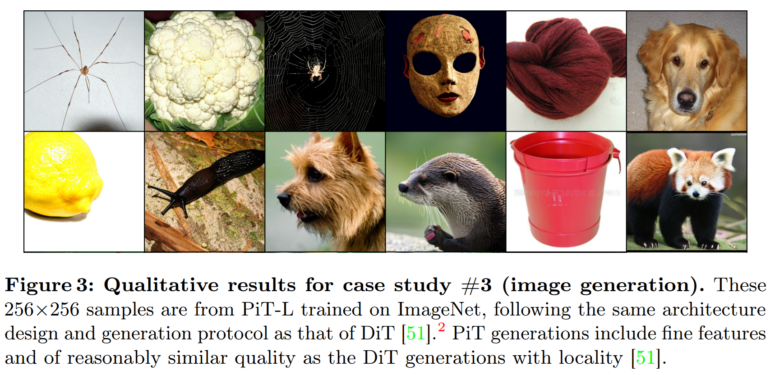

3. Image generation using diffusion models. PiT generated detailed images with a quality comparable to locality-based models.

Pixel Transformer is currently not practical - but could be the future

Overall, the results suggest that transformers can capture more information when they consider images as a set of individual pixels than when they divide them into blocks, as is the case with ViT, according to the team.

"We believe this work has sent out a clear, unfiltered message that locality is not fundamental, and patchification is simply a useful heuristic that trades-off efficiency vs. accuracy."

From the paper

The researchers emphasize that PiT is currently not practical for real applications due to higher computational intensity - but it should support the development of future AI architectures for computer vision.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.