Qwen3-Coder is Alibaba's most "agentic" coding model to date

Alibaba has launched Qwen3-Coder, its most advanced coding model so far, built to go head-to-head with leading Western AI models for programming tasks.

Qwen3-Coder is the newest member of the Qwen3 family, which Alibaba rolled out in April for general AI applications. The company calls the coding version its most "agentic" model to date, engineered to tackle complex, multi-step development workflows.

The flagship, Qwen3-Coder-480B-A35B-Instruct, uses a mixture-of-experts architecture with 480 billion parameters, 35 billion of which are active at once. The model natively supports a context window of up to 256,000 tokens, with the option to extend to one million.

Training with 7.5 trillion tokens, 70 percent code

Alibaba trained Qwen3-Coder on a massive 7.5 trillion tokens, with code making up 70 percent of the dataset. To prepare the data, the company used its previous Qwen2.5-Coder model to clean and rewrite the training corpus.

For post-training, Alibaba applied long-horizon reinforcement learning, teaching the model to use tools and process feedback through multi-stage interactions with its environment. The company built an infrastructure capable of running 20,000 parallel environments on Alibaba Cloud to support this approach.

Coding model demos that require reasoning about physical laws are a common benchmark—and Qwen3-Coder handles them well, according to Alibaba. | Video: Alibaba

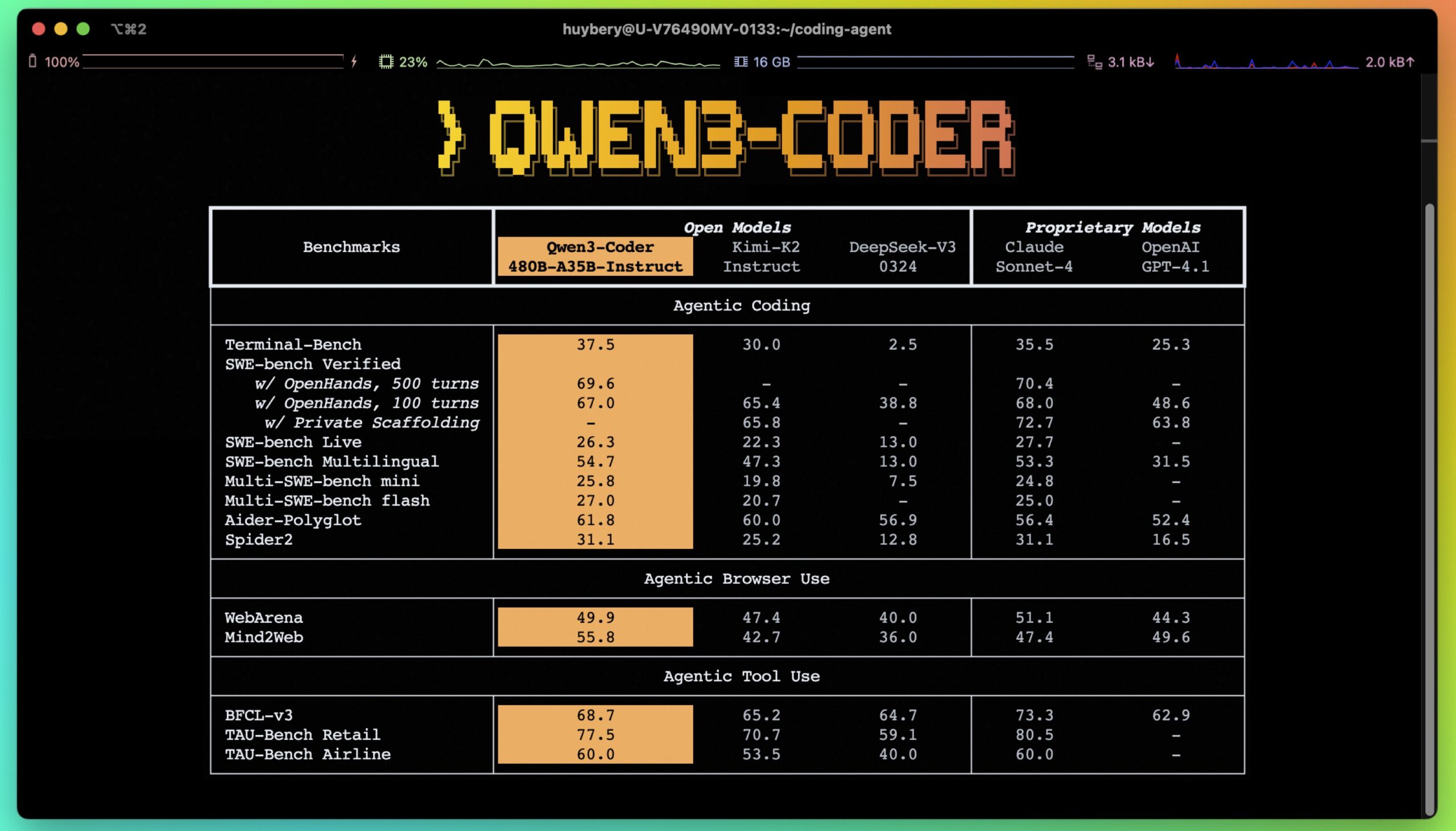

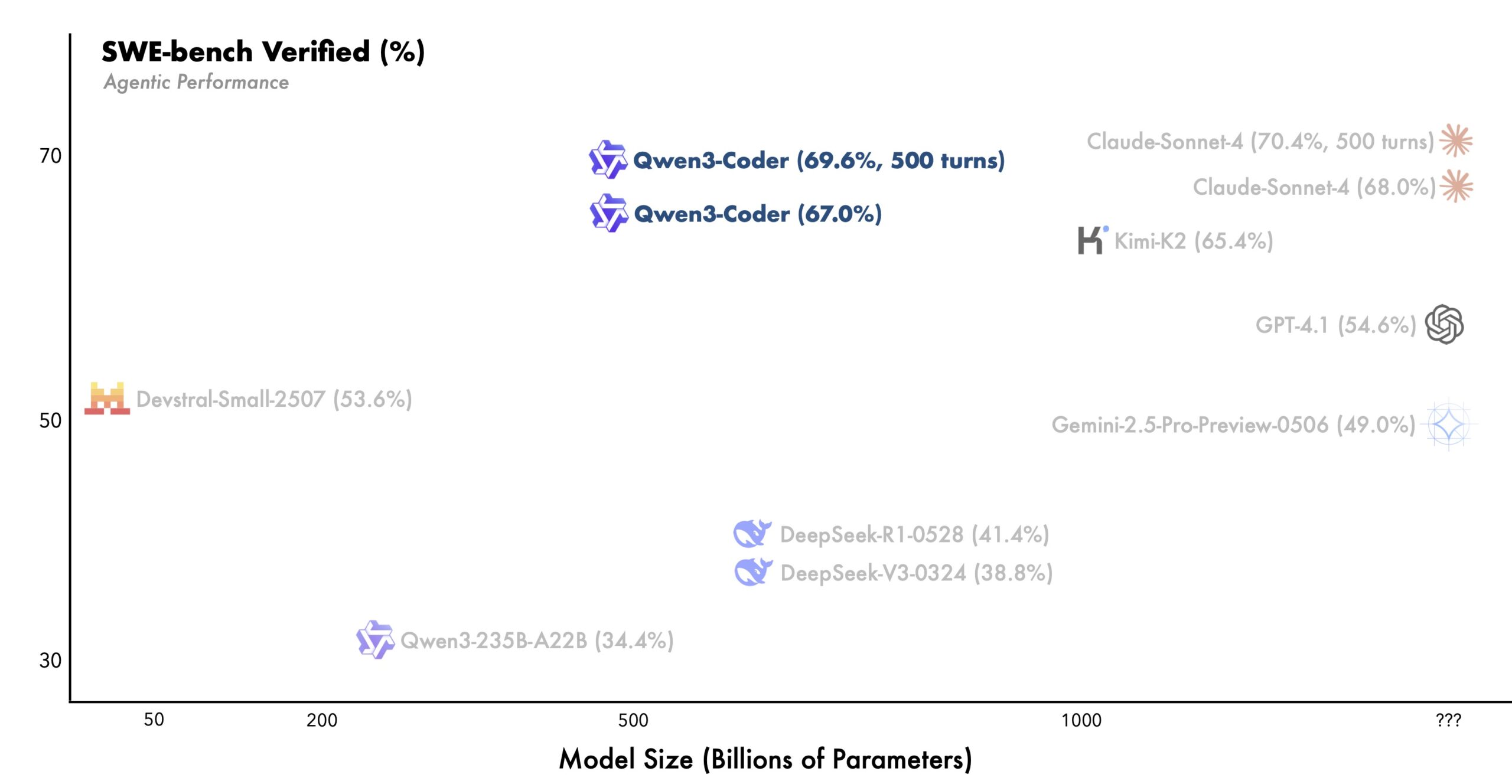

Qwen3-Coder reportedly excels at tasks that require reasoning about physical laws—a common benchmark for coding models. According to Alibaba, it ranks among the top open-source models for agent-based coding, browser automation, and tool use, with results comparable to Claude Sonnet 4.

On the SWE-Bench Verified benchmark for software engineering tasks, Qwen3-Coder delivers state-of-the-art performance among open-source models, all without relying on test-time scaling (which usually requires additional compute during inference).

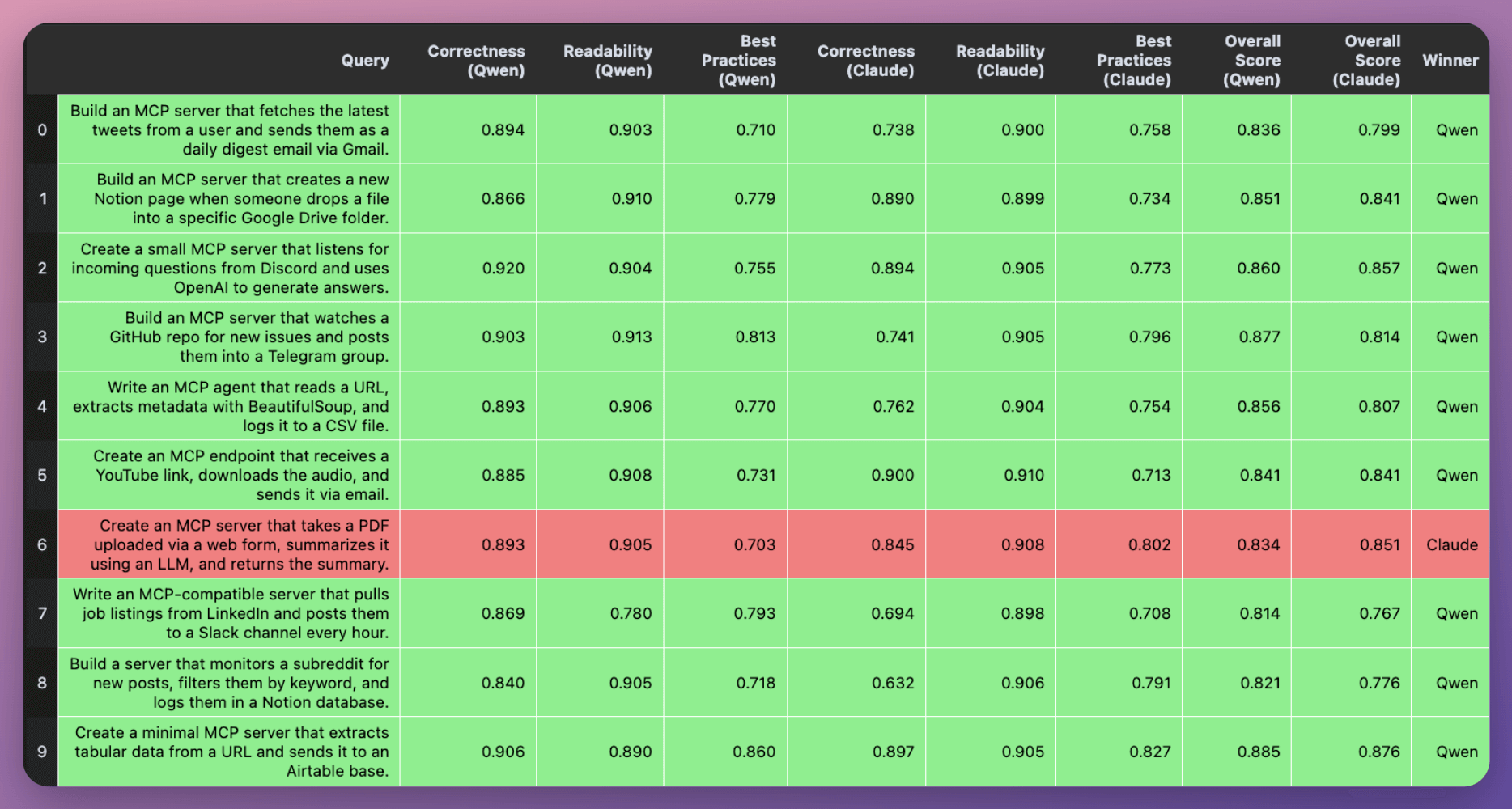

On X, Avi Chawla compared Qwen3-Coder and Claude Sonnet 4 on ten MCP server development tasks. Qwen3-Coder came out ahead in nine cases, consistently posting higher correctness scores.

Qwen Code command line tool

Alongside the new model, Alibaba is releasing Qwen Code, a command line tool for developers. Qwen Code is based on Gemini Code but optimized for Qwen3-Coder, with updated prompts and function call protocols. It supports the OpenAI SDK and can be configured using environment variables.

Qwen3-Coder also integrates with existing developer tools. For Claude Code, users need an API key from Alibaba Cloud Model Studio.

Alibaba says more Qwen3-Coder model sizes are on the way, aiming to deliver strong performance with lower deployment costs. The company is also exploring whether coding agents can improve themselves over time. While the 480B model is too large for standard GPUs, API access is available through Alibaba Cloud Model Studio.

With this launch, Alibaba positions Qwen3-Coder as an open-source alternative to proprietary coding assistants from companies like Anthropic and Google. The open-source approach sets it apart from most Western competitors.

Because coding tasks often involve processing large codebases or documentation, API costs can rise quickly, sometimes forcing users into expensive subscriptions. Qwen3-Coder’s strong open-source performance could put price pressure on these providers.

The code and model weights to run Qwen3-Coder locally are available on GitHub and Hugging Face. There’s also a demo for building small web apps via chat.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.