RATIONALYST: How implicit rationales improve AI reasoning

Researchers at Johns Hopkins University have developed an AI model called RATIONALYST that improves the reasoning capabilities of large language models through implicit rationales.

The team used a pre-trained language model to generate implicit rationales from unlabeled text data. They provided the model with example prompts to demonstrate what implicit reasoning could look like. The model then produced similar justifications for new texts.

To enhance the quality of generated justifications, the researchers filtered them by checking if they facilitated prediction of subsequent text. Only justifications meeting this criterion were retained.

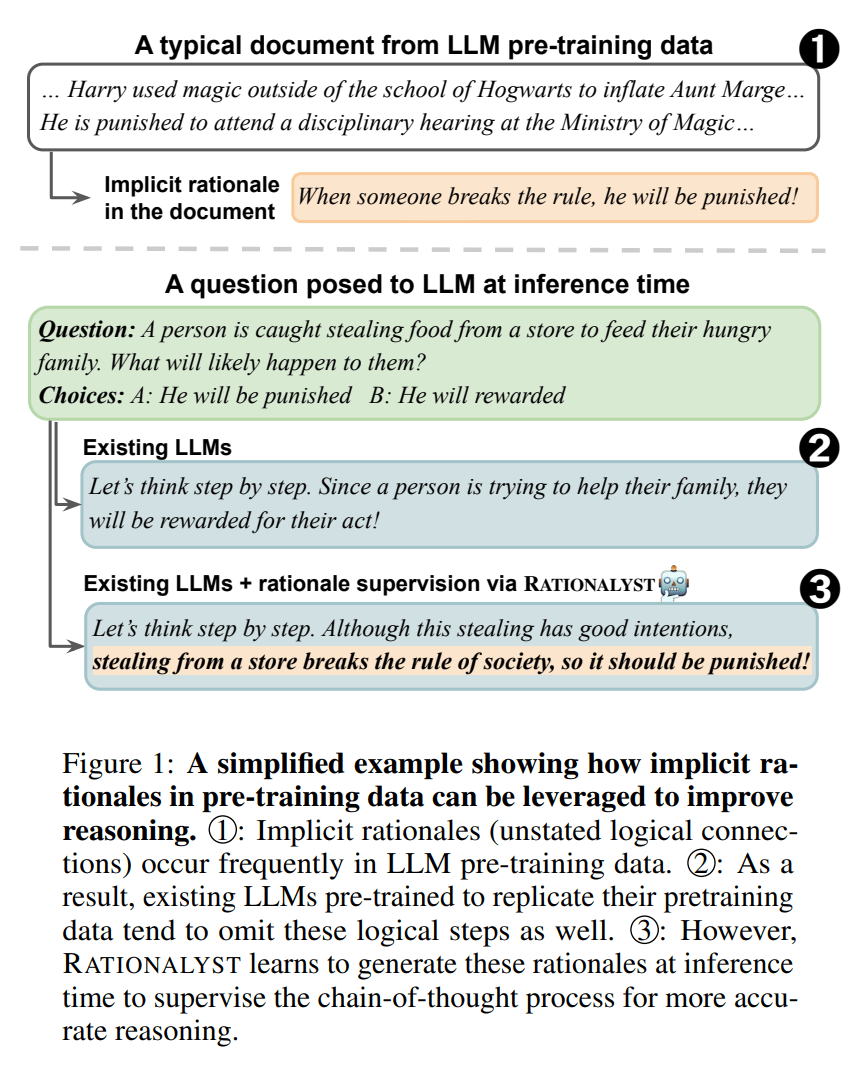

For instance, given the text "Harry used magic outside of Hogwarts to inflate Aunt Marge... He is punished to attend a disciplinary hearing at the Ministry of Magic...", the model generated the implicit justification "When someone breaks the rule, he will be punished!". This justification was deemed useful as it established a causal link between Harry's rule-breaking and his punishment.

Using this method, the researchers extracted about 79,000 implicit justifications from various data sources to train RATIONALYST.

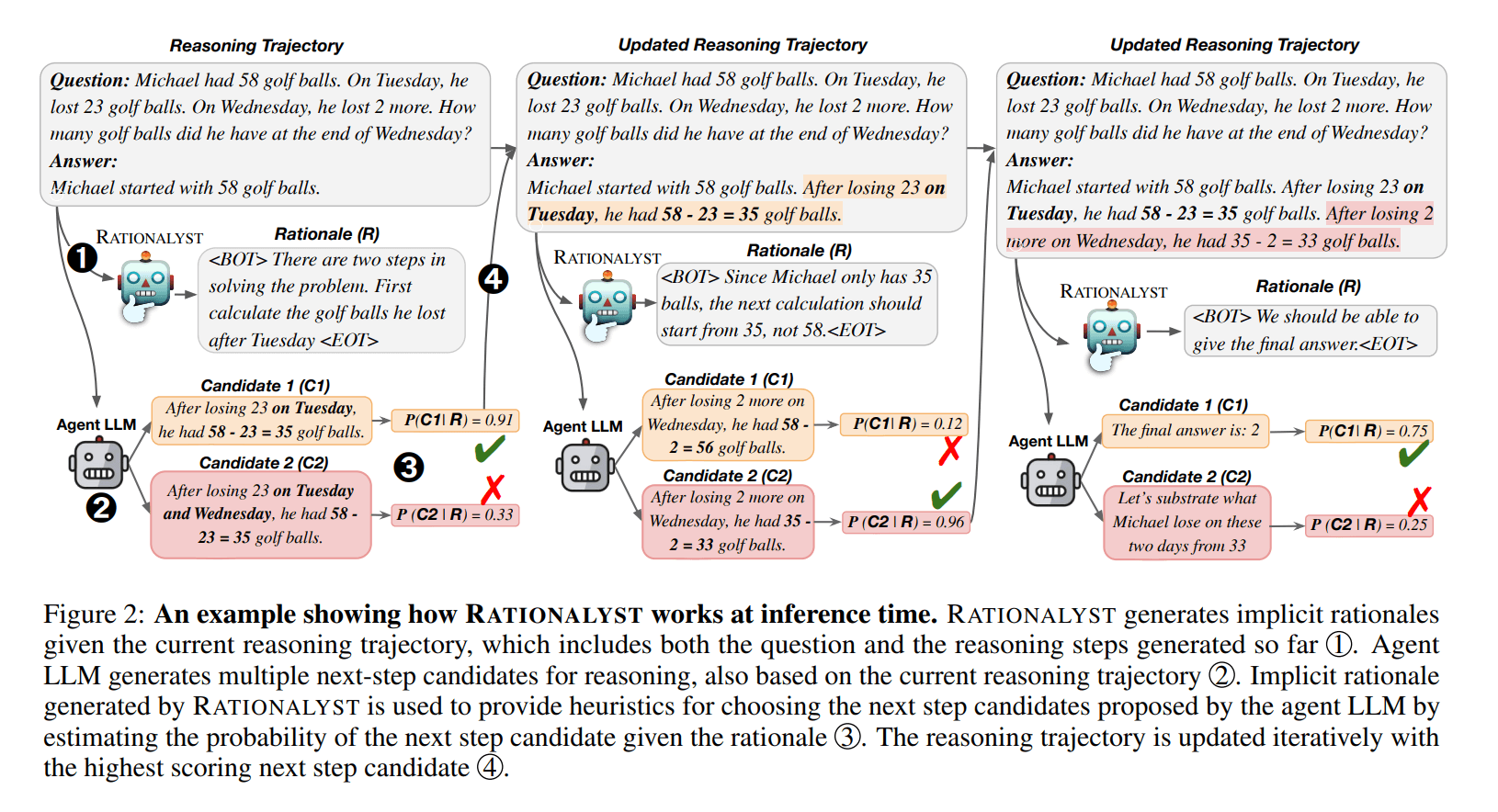

During inference, RATIONALYST monitors step-by-step problem solutions of other models. It generates implicit reasoning for each step and uses it to select the most likely next steps.

The researchers tested RATIONALYST on various reasoning tasks, including mathematical, logical and scientific reasoning. The model improved reasoning accuracy by an average of 3.9 percent on seven representative benchmarks.

RATIONALYST outperforms other verifier models

Notably, RATIONALYST outperformed larger verifier models like GPT-4 in the tests. However, newer models or such specializing in reasoning, such as GPT-4o or o1, were not included in the comparison.

The team believes their data-centric approach allows RATIONALYST to generalize process supervision across different reasoning tasks without human annotation.

The researchers see RATIONALYST as a promising way to enhance the interpretability and performance of large language models in reasoning tasks. By generating human-understandable reasoning, the system could prove particularly useful in complex domains like mathematics or programming.

Future research may focus on scaling RATIONALYST with stronger models and larger datasets.

The code is available on GitHub.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.