REPA accelerates diffusion model training by a factor of 17.5

A new technique called REPA dramatically accelerates the training of AI image generation models. The method leverages insights from self-supervised image models to improve both speed and quality.

REPA, which stands for REPresentation Alignment, aims to slash training times while enhancing output quality. It does this by incorporating high-quality visual representations from models like DINOv2 instead of relying solely on diffusion models to learn them independently.

Diffusion models typically create noisy images that are gradually refined into clean ones. REPA adds a step that compares the representations generated during this denoising process with those from DINOv2. It then projects the diffusion model's hidden states onto DINOv2's representations.

This approach helps the diffusion model extract meaningful features even from noisy training data. The result is an internal representation that closely matches DINOv2's, without requiring extensive training on large image datasets.

Better internal representations help with faster training

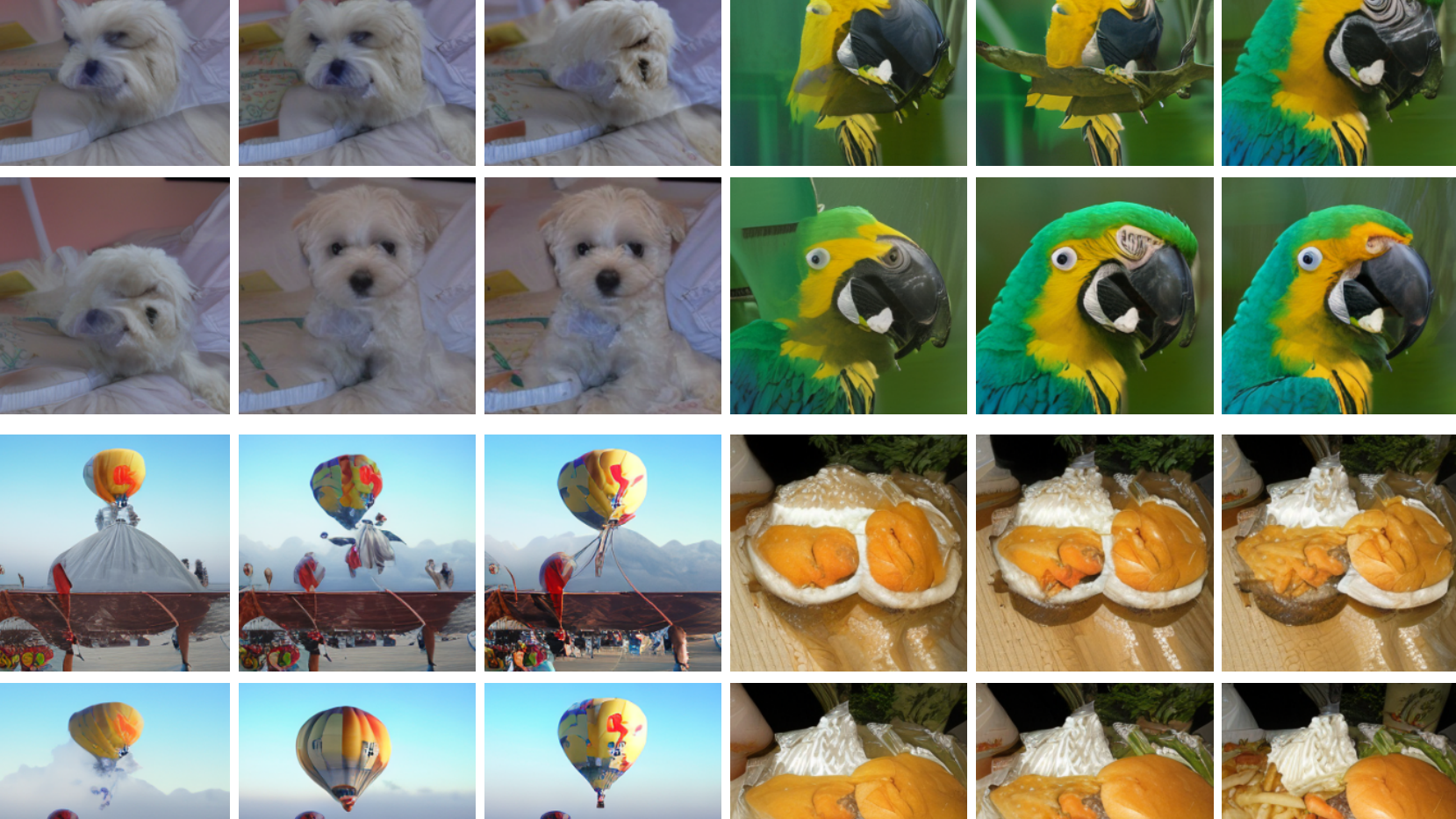

The researchers say REPA not only boosts efficiency but also improves generated image quality. Tests with various diffusion model architectures showed striking improvements:

- Training time reduced by up to 17.5x for some models

- No loss in output image quality

- Better performance on standard image quality metrics

In one example, a SiT-XL model using REPA achieved in 400,000 training steps what a conventional model needed 7 million steps to accomplish. The researchers see it as an important step toward more powerful and efficient AI image generation systems.

More details and code are available on GitHub.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.