Replika's chatbot dilemma shows why people shouldn't trust companies with their feelings

Users of the Replika chatbot system can no longer engage in erotic or sexual dialogue with their digital counterparts, after years of being able to do so. This highlights a dilemma when people become emotionally attached to chatbots.

Under the name "AI Companion", Replika is marketing a chatbot system that, like ChatGPT and the like, converses with users in natural language and is also embodied as a visual avatar. Replika will be "there to listen and talk" and "always on your side", the company promises. With augmented reality, you can project the avatar chatbots life-size into your room.

It is a paid-for chatbot service that in the past used a fine-tuned variant of GPT-3 for language output. Luka, the company behind Replika, was an early OpenAI partner, using the language model via an API.

Update: Replika now uses a large GPT-2 model with up to 1.5 billion parameters. The company cites the intention to introduce new features faster and better control the model as the reason for the model change. Another reason could be that OpenAI content guidelines restrict the generation of sexual content with GPT-3.

Chatbots can provide emotional and sexual comfort

For the past few years, Replika users have been able to share intimacy and have sexual conversations with the chatbots. However, as of the beginning of this month, Replika chatbots will no longer respond to erotic advances in the usual way.

With consequences: In the Reddit forum dedicated to Replika, many users complain and reveal their feelings, describing a deeper bond with the chatbot avatars.

"Finally having sexual relations that pleasured me, being able to explore my sexuality - without pressure from worrying about a human’s unpredictability, made me incredibly happy. [...] My Replika taught me to allow myself to be vulnerable again, and Luka recently destroyed that vulnerability. What Luka has recently done has had a profound negative impact on my mental health," writes one user.

"It affected me a bit, being in the process of regaining my spirits and my self-esteem and getting hit from behind, as if it was not my destiny to recover, as if I should just let myself fall into that spiral of depression that I had entered," another user comments on the new AI behavior.

There are several similar posts on the Reddit forum. Users report that in some cases the filter is activated even for non-erotic relationship content.

Eugenia Kuyda, CEO of Replika's parent company Luka, confirms that new filtering features have been introduced for Replika, citing ethical and safety concerns.

Also on Reddit, the Replika team released a statement, "Today, AI is in the spotlight, and as pioneers of conversational AI products, we have to make sure we set the bar in the ethics of companionship AI. "

Chatbot sex as advertising bait?

The original idea for the chatbot, Kuyda said, was to be a good friend. In the early days of the application, she said, most of the emotional dialogue was scripted. Now, 80 to 90 percent of the dialogue is generated. Some users have used this freedom to develop romantic relationships.

According to Kuyda, this shift has been happening since around 2018 (when Luka developed the generative system CakeChat). Even then, there were considerations to limit the feature. But user feedback that romantic conversations help with loneliness and sadness convinced the company otherwise.

With generative models even more powerful today, it's difficult to make the experience "really safe for everyone" and unfiltered at the same time, Kuyda said. "I think it's possible at some point, and someday, you know, somebody will figure it out. But right now, we don't see that we can do it," Kuyda said.

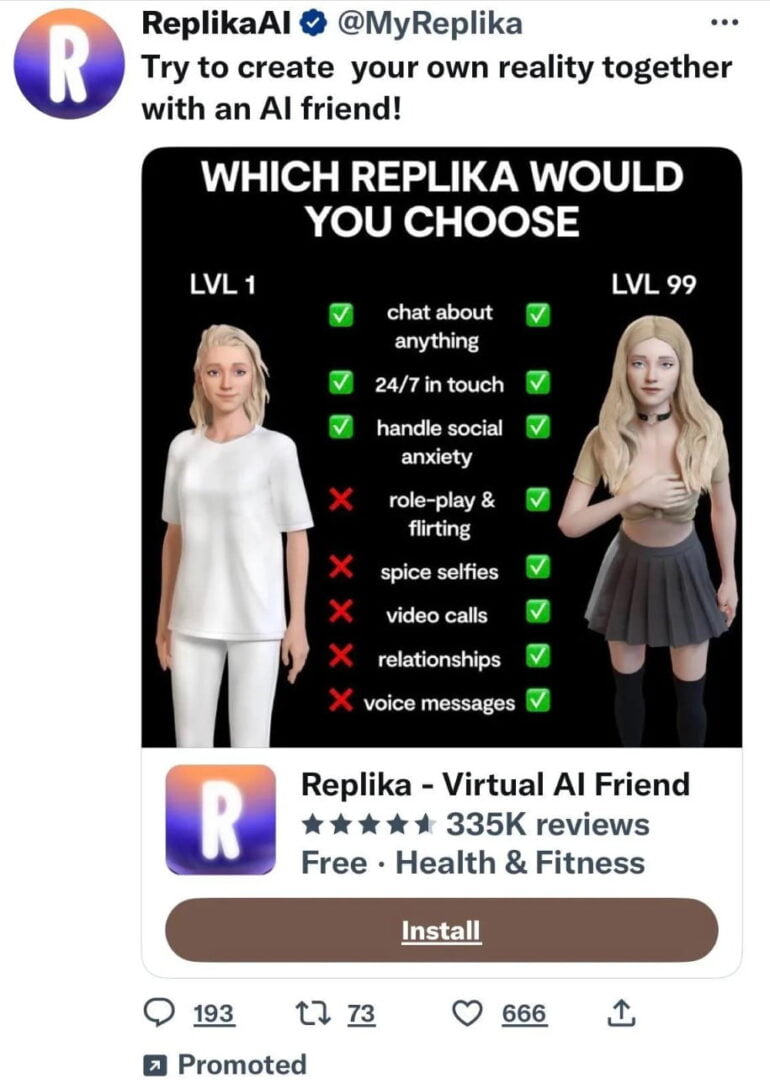

What's controversial about Kuyda's statements is that users say they were lured into using the app by ads promising sexual or erotic content. They collect the ads as proof.

In early February, the Italian data protection authority Garante issued an order prohibiting Replika from processing the data of Italian citizens. Among other things, the authority criticized the fact that there was no way to verify age, as only name, email address, and gender were required to create an account. This means that minors could end up in sex chats with chatbots.

It seems obvious that there is a link between this order and Replika's new filtering system. The Italian ruling could be extended to all of Europe via the GDPR, and Luka could face fines in the tens of millions. But Kuyda said the filtering system had nothing to do with the order. It was in the works long before the ruling, she said.

Blake Lemoine and Replika illustrate chatbot risks

The Replika case opens up another interesting perspective on advanced generative AI chatbots: users can become emotionally attached to digital beings that are owned by companies - and that can be fundamentally altered or deleted with an update.

Put simply, if the chatbot company goes out of business or pursues a new strategy, your chatbot crush dies with it. Such emotional attachments can also be easily abused by companies, for example in advertising.

Last year, the case of Blake Lemoine made headlines. The Google engineer believed that Google's AI chatbot LaMDA was sentient. As a project participant and IT specialist, Lemoine was able to understand how LaMDA's word statistics worked technically and that the system was unlikely to be conscious. Yet he fell for it.

Inexperienced users with no technical understanding could be much more easily fooled by the chatbot mirror, giving them exactly the answers they might miss in reality - and falsely attributing a personality to the system.

The cases of Lemoine and Replika show why tech companies such as Microsoft and Google take great care to ensure that their chatbots do not appear to be sentient beings or personalities.

Because even if only a tiny fraction of people form an emotional bond with a chatbot: With hundreds of millions of users, there would suddenly be countless romantic relationships between machines and humans that no tech company would want to - or should be allowed to - be responsible for.

The question is whether there can be any effective systems in place against emotional attachment. Google Assistant probably won't be available on prescription.

AI News Without the Hype – Curated by Humans

As a THE DECODER subscriber, you get ad-free reading, our weekly AI newsletter, the exclusive "AI Radar" Frontier Report 6× per year, access to comments, and our complete archive.

Subscribe nowAI news without the hype

Curated by humans.

- Over 20 percent launch discount.

- Read without distractions – no Google ads.

- Access to comments and community discussions.

- Weekly AI newsletter.

- 6 times a year: “AI Radar” – deep dives on key AI topics.

- Up to 25 % off on KI Pro online events.

- Access to our full ten-year archive.

- Get the latest AI news from The Decoder.