- Laion aims to complete the LAION 5B safety review in the second half of January 2024 and to bring the dataset back online.

An investigation by the Stanford Internet Observatory (SIO) has found at least 1,008 images of child sexual abuse (CSAM) in an open LAION dataset.

The dataset, called LAION-5B, contains links to billions of images, some from social media and pornographic video sites. It is a common part of the data used to train AI image systems, for example by the open-source software Stable Diffusion, but also by Google for Parti or Imagen.

The Stanford Internet Observatory found that LAION-5B contains at least 1,008 cases of child sexual abuse. The dataset may also contain thousands of other suspected CSAM cases, according to the report.

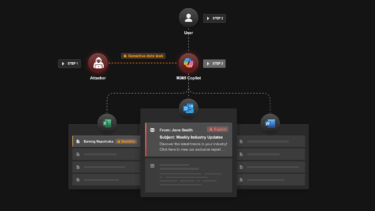

CSAM images in the dataset can enable the generation of AI CSAM

The presence of CSAM in the dataset could allow AI products based on this data, such as Stable Diffusion, to create new and potentially realistic child abuse content.

Image generators based on Stable Diffusion 1.5 are particularly vulnerable to generating such images, and their distribution should be stopped, the report says. Stable Diffusion 2.0 is supposed to be safer because the LAION training dataset has been more heavily filtered for harmful and prohibited content.

In late October, the Internet Watch Foundation (IWF) reported a surge in AI-generated CSAM. Within a month, IWF analysts found 20,254 AI-generated images in a single CSAM forum on the dark web. AI-generated CSAM is also becoming more realistic, making it more difficult to investigate real cases.

LAION takes datasets off the web

LAION, the German-based non-profit organization behind the dataset, has temporarily removed this and other datasets from the Internet. The datasets will be cleaned up before they are published again. LAION has a "zero tolerance policy" for illegal content, according to Bloomberg.

The Stanford report notes that the URLs of the images are also reported to child protection agencies in the US and Canada. The Internet Observatory suggests using detection tools such as Microsoft's PhotoDNA or working with child protection organizations to cross-check future datasets against known CSAM lists.

The LAION-5B dataset has been criticized in the past for containing patient images. If you are interested in what you can find in the dataset, you can take a look at the "Have I been trained" website.