Researchers demonstrate VideoDex, a robot learning method that can learn from internet videos of human interactions.

Among the main goals of robotic research are robots that can autonomously perform various tasks in diverse environments. To achieve this goal, many development methods use successful robot interactions as training data.

However, such data is rare because using untrained robots in the real world often requires constant supervision.

For robot learning, this is a chicken-and-egg problem: To reliably gain experience, the robot must already have experience, Carnegie Mellon University (CMU) researchers write in a new paper.

One solution could be training in a simulation. However, there are numerous problems in transferring simulation experience to the real world.

VideoDex learns from human interactions

The CMU team proposes an alternative approach: Learning from Internet videos in which people interact in the real world.

"This data can potentially help bootstrap robot learning by side-stepping the data collection-training loop," the paper says.

Video examples from the Epic Kitchens dataset | Video: Shaw, Bahl et al., Epic Kitchens

The idea of training robots with videos is not new. But most video training is intended to teach robots visual representations, meaning they serve as a source of visual pretraining.

This, they argue, has advantages but neglects a key challenge in robot training: mastering the very large action spaces.

Although pretraining visual representations can aid in efficiency, we believe that a large part of the inefficiency stems from very large action spaces. For continuous control, learning this is exponential in the number of actions and timesteps, and even more difficult for high degree-of-freedom robots

From the paper

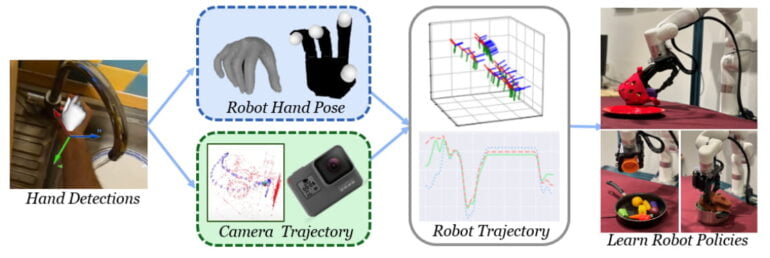

That is why, in addition to the visual aspects, the CMU team also uses training videos to convey information about human movement patterns. For training their "VideoDex" system, they use videos of kitchen interactions filmed from a first-person perspective.

VideoDex demonstrates dexterity

To enable VideoDex to learn from the videos, the team uses algorithms that track hand, wrist, and camera position in space. Hand movements are mapped to the 16-DOF robotic hand, and wrist movements and camera positions are mapped to the movements of the robotic arm.

In addition to this movement information, VideoDex uses learned visual representations and learned Neural Dynamic Policies that improve robot control. Equipped like this, the AI system learns from hundreds or thousands of videos of specific human interactions, such as picking up, opening, or covering items.

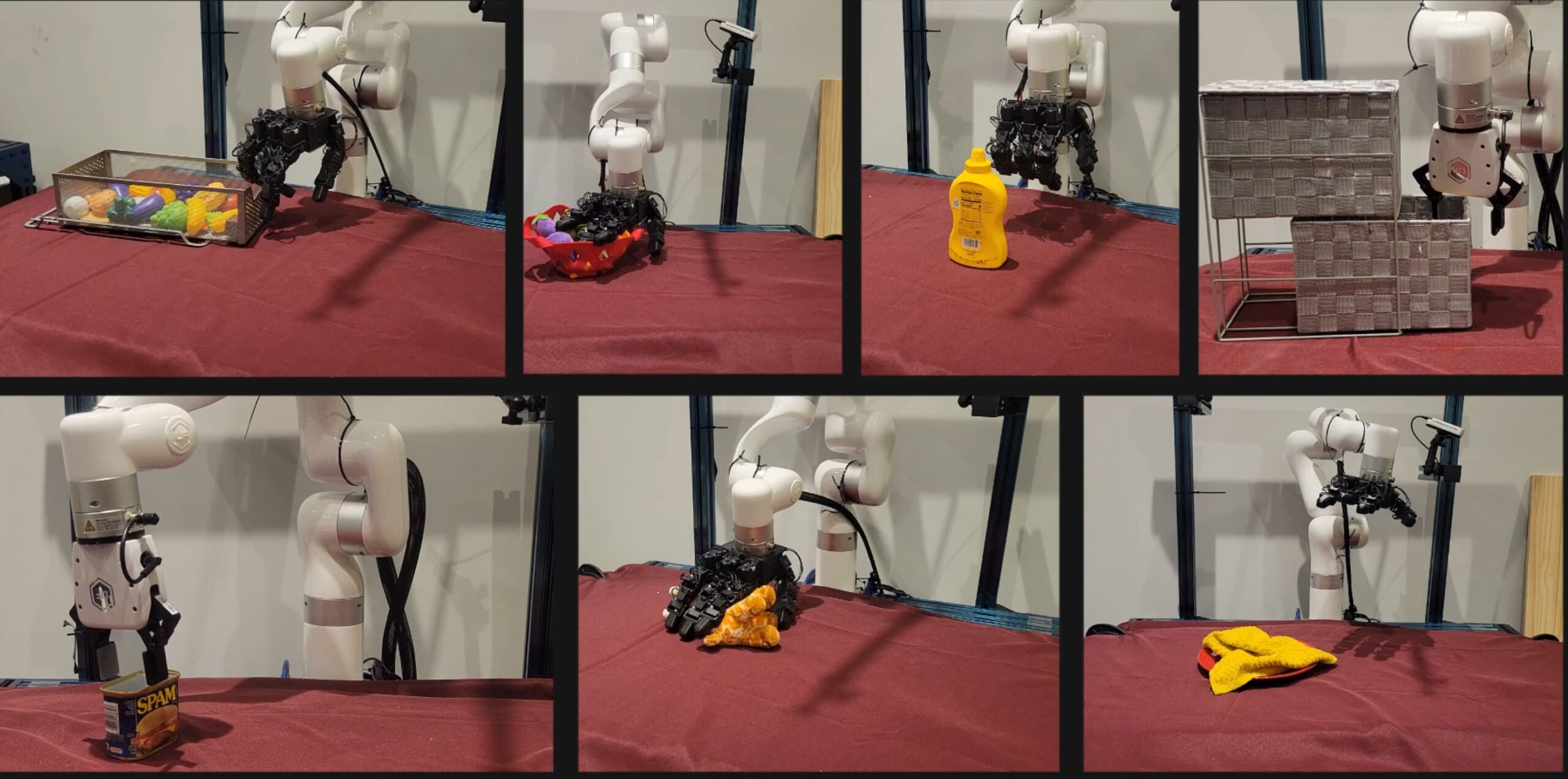

After training, VideoDex needs only a few real-world examples to outperform many of the state-of-the-art robot learning methods in seven different real-world interactions. These examples can be previously demonstrated to the robotic arm via human remote control.

Video: Shaw, Bahl et al.

More examples of their work are available on the VideoDex project page. The code will be published there soon.